I have a workflow that sends POST to a Web Service. The Web Service responds with JSON-encoded data.

I have used JSON Path to extract the key values (job_id and data).

I need the job ID because it will be a consistent value in every row I’m about to create. But it’s not in the data portion of the response data. So I need to extract job_id as a workflow variable–or something similar. But I can’t seem to do that. Any ideas?

Also, the data is complex. There are multiple results, and each result has a collection of models I must iterate over (a loop inside a loop). I will need some data from the big/outer loop to create rows in the small/inner loop. This is a fairly common need in handling complex output, so I know Knime must have a way to do it. But I can’t find a good example of this.

I also, at present, cannot figure out how to proceed with even the big/outer loop of JSON data. Whenever I try to – for example – JSON to Table, the node hangs and never completes execution.

Any ideas?

Hi,

Would you provide a sample data set?

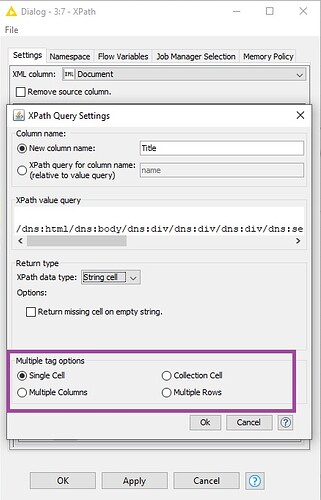

Meanwhile, have you tried to use “JSON To XML” node and then parse the XML by using a “XPath” node?

I have a feeling that says a part of your issue is to input the JSON data into multiple rows or columns which can be done so easily in “XPath” node.

Best,

Armin

2 Likes

Hello @armingrudd:

Thanks for your reply. Here’s a sample of the JSON I’m working with (I’ve removed some data so you can see the structure–each array has multiple values):

{

“id” : “9319cfc8-e0c9-4164-905f-fc8a0303459e”,

“errors” : “”,

“outputs” : {

“job_id” : “337f1b25-4687-4adf-97de-7e487b9cfb83”,

“outputs” : [ {

“models” : [ {

“name” : “Weibull”,

“dfile” : “Weibull\nBMDS_Model_Run\n/temp/bmd/datafile.dax\n/temp/bmd/output.out\n3\n500 1e-08 1e-08 0 1 1 0 0\n0.1 0 0.95\n-9999 -9999 -9999\n0\n-9999 -9999 -9999\nDose Incidence NEGATIVE_RESPONSE\n0.000000 1 59\n5.000000 2 58\n10.000000 0 58”,

“output” : {

“df” : -999,

“AIC” : -999,

“BMD” : -999,

“CSF” : -999,

“BMDL” : -999,

“BMDU” : -999,

“Chi2” : -999,

“fit_dose” : ,

“fit_size” : ,

“p_value4” : -999,

“warnings” : ,

“model_date” : -999,

“model_name” : “Weibull”,

“fit_est_prob” : ,

“fit_observed” : ,

“fit_estimated” : ,

“fit_residuals” : ,

“model_version” : -999,

“execution_duration” : 0.125,

“execution_end_time” : “2019-02-06T13:33:06.122333”,

“execution_start_time” : “2019-02-06T13:33:05.997333”,

“residual_of_interest” : -999

},

“stderr” : “”,

“stdout” : “”,

“outfile” : “”,

“logic_bin” : 2,

“has_output” : true,

“model_name” : “Weibull”,

“logic_notes” : {

“0” : ,

“1” : [ “Residual of Interest does not exist” ],

“2” : [ “BMD does not exist”, “BMDL does not exist”, “AIC does not exist” ]

},

“model_index” : 7,

“recommended” : false,

“model_version” : 2.17,

“execution_halted” : false,

“recommended_variable” : null

} ]

},

“is_finished” : true,

“has_errors” : false,

“created” : “2019-02-06T13:33:06.258552-05:00”,

“started” : “2019-02-06T13:33:06.348990-05:00”,

“ended” : “2019-02-06T13:33:07.533578-05:00”

}

Thanks!

Would you provide a json file that can be read?

And did you try to convert it to XML?

1 Like

@armingrudd I’m not sure about the entire file – let me get back to you on that.

RE: Converting it to XML… I have been able to do that, but I’m left in the same situation: I can’t get the array of data objects I want to iterate through. Unless I’m missing something?

Thank you for your reply!

Which items do you want? Did you try to catch same items in multiple rows?

Can you show me the path you are applying? (Yet I will be able to help more if I have a sample of your json file that can be read)

1 Like

As Armin indicated, having a readable sample would help here.

I’m parsing a complex JSON data structure using a hierarchical approach. Split the complex structure into the next level of JSON data using the JSON Path node and process each of these separate chunks in parallel repeating the split process as required. Join it all up at the end.

A small sample would make it possible to construct a workflow for you.

cheers,

Andrew

2 Likes