Is there any sample workflow available, which performs the operation of inserting the data in IBM DB2 table from the table present in hadoop database.I am first time user of this KNIME platform so exploring some options to leverage KNIME for DB2 related operations.

@ankur welcome to the KNIME forum.

I think in your case you could bring data from hapoop to KNIME and the load it into DB2. For database operations KNIME has the DB extensions that would allow you to access and manipulate various databases.

If there is no direct connection between the DBs you would have to transfer the data thru KNIME which may need some performance. If we are talking about large datasets also the speed of the network connection might be an issue.

Maybe you could tell us more about your setup and what you want to do.

KNIME Database Extension Guide

https://docs.knime.com/2019-06/db_extension_guide/index.html

Links to different sample databases

Basically i have an use case where we need to export the data from hadoop to IBM DB2 linux database.This data transfer from hadoop to DB2 will be ongoing.On ongoing basis we need to export just the changed data ,may be here we can use DB Merge Extension ,but before that I was just trying to explore DB extensions like DB INSERT and DB delete since tis is my first KNIME workflow.

For this I have downloaded the JDBC driver from IBM website ,and had successfully made the connection with DB2.Now while I was going thru one of the property of DB Insert it says

“All selected column names need to exactly match the column names within the database table.”

Does the above statement means ,that do we need to consider Case sensitivity of the column names as well .

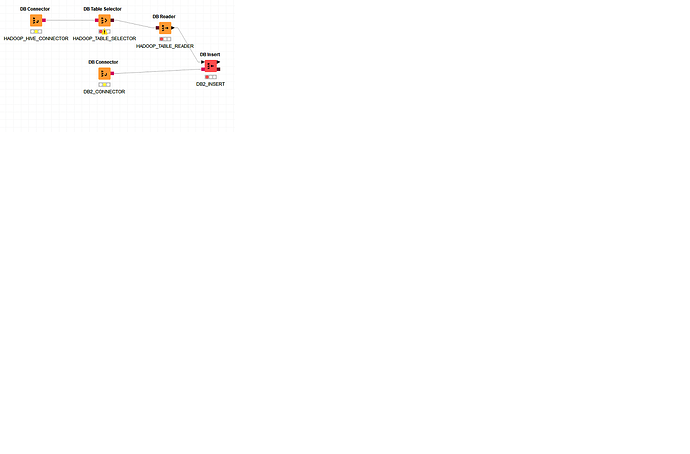

I have just created a sample workflow to pull data from one of the Hive table and insert that in one of the DB2 database table.Can someone please have a look at it ,and let me know that am I going in correct direction to cater my use case.As of now I am just trying to use DBINSERT extension and getting error,for the column which already exists.Only diffrence which i see is hadoop have column names with smaller case where as DB2 table have column names with upper case.

@ankur, KNIME in general is a case sensitive. You can also look at

if you need to update values in future transactions.

If KNIME in general is case sensitive, so could you please let me know ,that how can i transform the column names to UPPER case on the fly?

you could use one of the rename nodes. In general for your scenario you would need some planning. How to know which data has already been transferred. You might need an intermediate table to store such information or check from the target table the IDs or dates that have been transferred.

See recommendation here

How to convert string value to date .The string column is having value in format YYYY-MM-DD HH:mm:ss

node will do it.

is there any way that let me make the DB connection dynamically to diffrenet databases without me specifying by going in the configuring setion of DBCONNECTION.Basically the ask is to cater the use case, where I need to create a workflow, which is capable of exporting data from hadoop to various flavours of database like oracle,DB2,SQL server etc and perform operations like Insert,Update and Delete on them.The connection and credential should be passed on the fly, and connection can be made to various databases based on the configuration passed and accordingly operations can be performed.

I have used String to Date&Time (legacy) instead of String Date&Time as I was getting error.While I was using String TO Date&Time(legacy) I found at a time I can just select one column that means if I have more than one column where I need to convert String to Date I had to use multiple nodes to cater this.

Hi there @ankur,

welcome to KNIME Community!

See you have lot of questions so let me try to give you some answers, pointers and ideas.

- your first workflow - two connectors, reading from one DB and inserting into another - seems fine

- you should use String to Date&Time (not legacy) node to convert to Date&Time format. Sometimes new node is harder to configure (depending on format) but can convert multiple columns at once and offers more possibilities - there is a lot of topics so check Forum search

- your use case (transferring data from one source to another) is common and believe KNIME is well suited for it

- regarding dynamic connections/data transfer - this can be done using Configuration and Switch nodes together with Components but think it requires a new topic

Feel free to explore database workflow examples on KNIME Hub and happy KNIMEing!

Br,

Ivan

Thanks Ivan for your response  .Will create New topic for Dynamic connection to start the discussion as I continue to explore KNIME for this use case.

.Will create New topic for Dynamic connection to start the discussion as I continue to explore KNIME for this use case.

For String to Date&Time I did explored the available options , but could not find much so at last used the String Date&Time(legacy) to at least move ahead with the the issue which I was facing.Will definitely try to see some more topics around String to Date&Time in the Forum.

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.