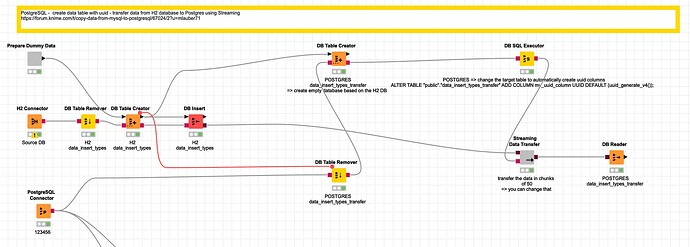

@mgiammarco here is an example. You would have to change the DB nodes. Your initial database is in the H2 DB with some data in: “data_insert_types”.

Based on this table an empty table “data_insert_types_transfer” is being created in the Postgres DB. Then this empty table gets adapted so that it will automatically produce an additional column called “my_uuid_column” with this command:

ALTER TABLE "public"."data_insert_types_transfer" ADD COLUMN my_uuid_column UUID DEFAULT (uuid_generate_v4());

This column now automatically will have uuids (you will not have to fill it yourself). Please note you might have to activate/create this function once in your Postgres DB:

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

Then you can just write the data from H2 thru KNIME into the Postgres DB.

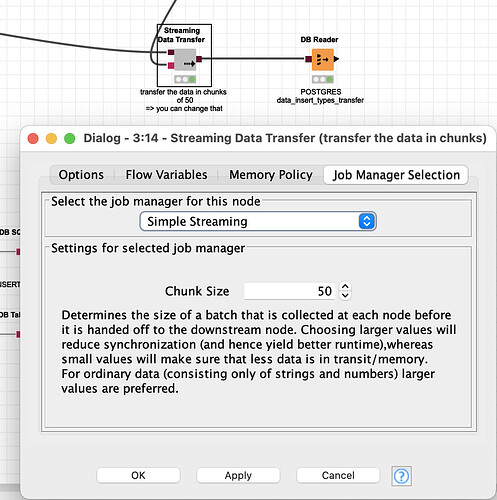

In the component Streaming will be used in order to speed up the process (you can experiment with the chunk size and also do additional manipulations):

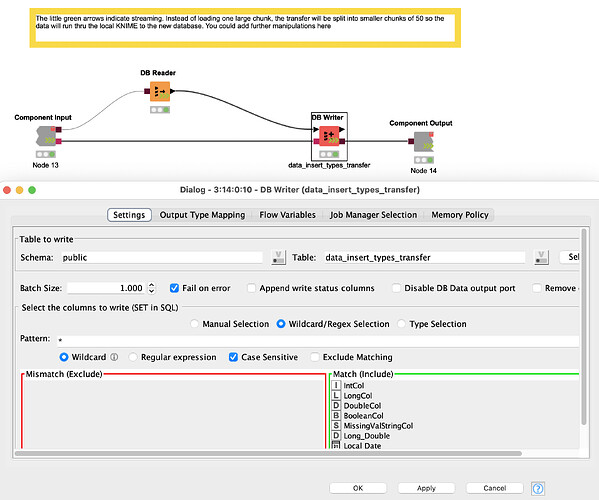

The data will run thru the (local) KNIME in small chunks and so speed up the whole process:

Since the databases will be dynamically created you could work this example into a loop just substituting the table names.

kn_forum_67024_db_postgres_transfer_data_uuid.knwf (85.1 KB)