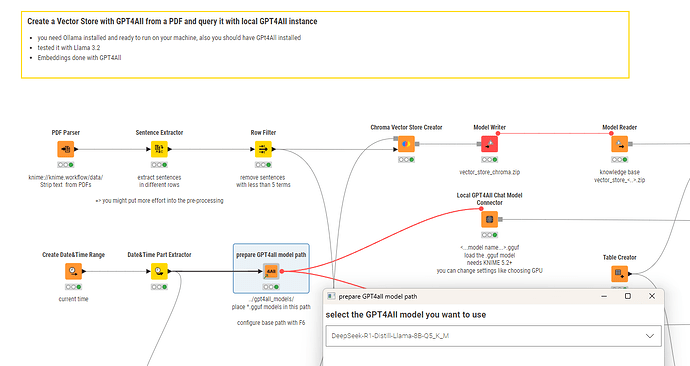

@laval yes you should be able to use that for example with GPT4All and suitable .gguf models:

the use of a Vector store is an extra. It should also work with a suitable prompt:

(just make sure you have the folder structure right where you place the .gguf files. Maybe check the whole workflow group)

Claude.ai says about these .gguf models:

- Q2/Q3 versions are best for limited memory but may have some quality loss

- Q4/Q5 offer a good balance of size and quality

- Q6/Q8 versions maintain better quality but use more memory

- F16 is the highest quality but largest size

Also Ollama should be able to handle such models. You can initially download them and then use them in KNIME.

ollama run deepseek-r1:8b

If you like the results or trust the models with your data is up to you. Although everything should run on you local machine …