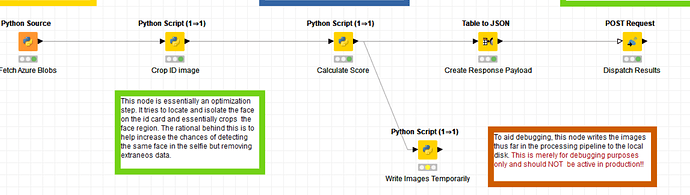

I have created a workflow that takes images from an Azure blob runs it through 2 python script and then sends the results off as a JSON. (see attached)

I am running it on a AWS T2.large ec2 instance. It says its a 4.8.2 knime server…(ubuntu-18.04__KNIME_Server_small_4.8.2-7c82ba77563d8a29d66283b686df-5deef161-954a-4b84-9285-e76ab409e2e7-ami-076b35bcbcd9036da.4 (ami-08d0461ef2ab9d563))8

My version of knime AP is 4.0.0.

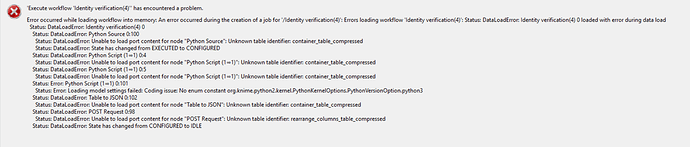

When I try run the workflow on the server I get the attached errors, any ideas on how to fix this?

It looks like the Python script is trying to read a table called container_table_compressed, and it doesn’t seem to exist in the input. Could be that you need to check the naming in the Python script.

One other thing. We didn’t install the Azure blob storage nodes on the AWS instances by default. But if you installed those nodes, then you’d be able to use the Azure Blob Storage nodes to access the files. Sometimes that might be easier than using Python.

https://hub.knime.com/knime/space/Examples/01_Data_Access/06_ZIP_and_Remote_Files/02_Azure_Blob_Store_Remote_File_Example

Best,

Jon

Hi,

could it be that the workflow you’ve uploaded is (partially) executed?

The reason for the error is that with KNIME Analytics Platform 4.0 we introduced a new way on how the tables available at the output ports are stored. The result of that is that we gained some significant speedup, however this new table storage format is not backwards compatible. As you execute the workflow with KNIME Server 4.8.2 you run an executor with version 3.7.2, which cannot handle the new format.

Cheers,

Moritz

Thanks for the speedy responses,

One other thing worth noting is that the whole workflow runs fine when I run it on my local drive so I don’t think it is the naming conventions. Cheers Jon, I will take a look at those nodes.

Moritz, can I update the executor to a version that can handle the new format?

Thanks

Hi,

a guide to update your KNIME Server can be found under

https://docs.knime.com/2019-06/server_update_guide/index.html#update

but before doing that, you can also try to reset the workflow completely and if you need it to be partially executed try to do that within Analytics Platform 3.7.2 and then upload it to your server. This should also guarantee that your workflow behaves the same as locally as in that case the executor and KNIME Analytics Platform have the same version.

Cheers,

Moritz

Hi Moritz,

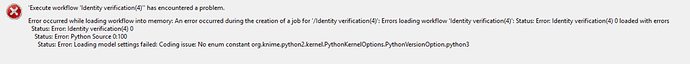

Great, so I reset the workflow and re-uploaded as you said and a load of the errors went away but still left with the attached. Do you think I need to update the server to fix the remaining?

Thanks a lot,

Ryan

Hi @ryanb123,

we did some changes to the Python integration. Therefore, Python nodes created with KNIME AP 4.0 cannot be loaded correctly by lower versions of the KNIME Analytics Platform.

Best

Benjamin

1 Like

Hi @bwilhelm,

So restoring KNIME AP to 3.7.2, recreating the worfklow and re-uploading should work?

Thanks,

Ryan

1 Like

Hi,

yes exactly, that should work. Sorry for the inconvenience.

Cheers,

Moritz

1 Like

Thanks @moritz.heine, will do that now.