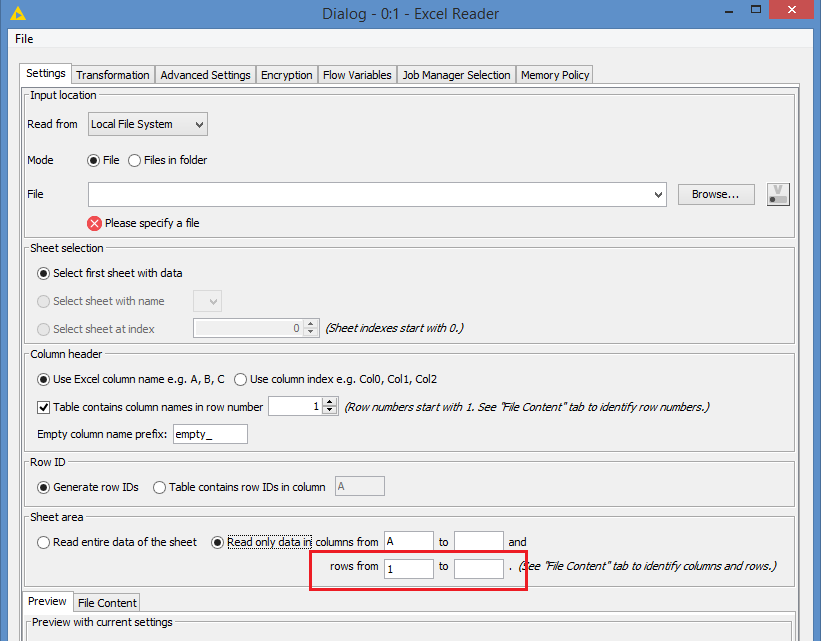

Hi @Fovas , you can try to read the file in batches. The Excel Reader allows you to read between a range of rows:

So, you could read let’s say 40k rows at a time, and do your process and write to your new file, and then repeat the process and append to the file.

You can use a Loop to do this.

You can check this thread that I replied to earlier that can help you with how to read a file partially implement the loop:

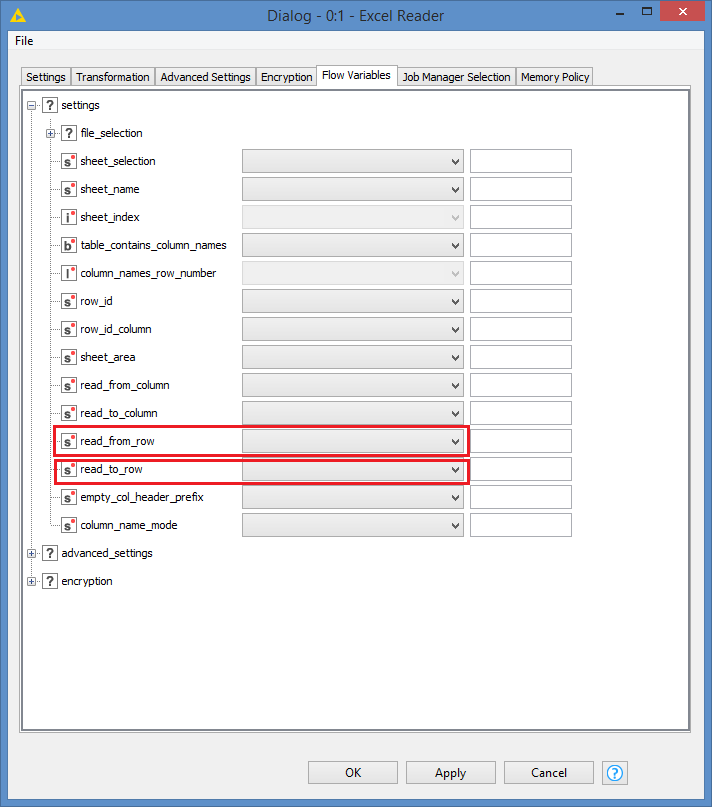

EDIT: Just to add, so you would dynamically calculate the range for the rows within the loop, and assign the range as flow variables in the Excel Reader to these 2: