and inside the SQL Executor node you need to load the data

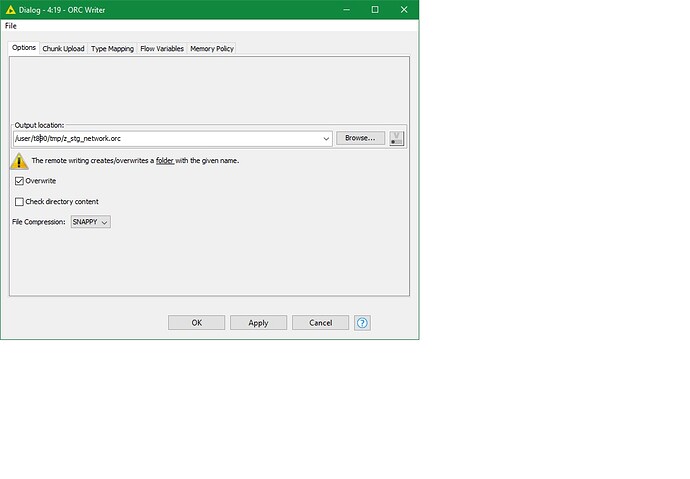

LOAD DATA INPATH ‘/user/t897420/tmp/z_stg_network.orc’ INTO TABLE schema.table;

notice inpath is the same as your orc writer.

this much is known to me using putty. but how to build it out in knime… NOOOOOOOO CLUE. documentation is literally a. non-existent or b.just running people around in circles

i hope this shaves HOURS off someone’s researching time

cheers