@hmfa I tried an approach with the help of ChatGPT and Python:

The workflow first detects the color-saturated regions on a stamp album page to isolate each stamp + caption block and saves those as “outer” crops.

Then, for each crop, it analyzes the caption text below to estimate the correct horizontal boundaries and runs edge-based rectangle detection within that region to extract the precise inner stamp (image + perforation).

This two-stage, caption-guided method keeps each stamp tightly framed while avoiding overlaps with neighboring stamps.

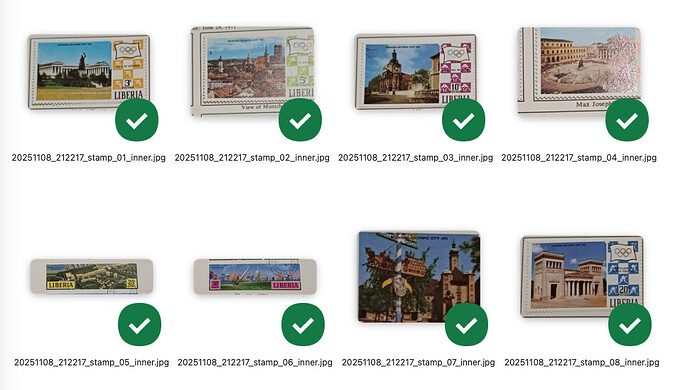

The results of the ‘inner’ processings do look promising I would say. Considering the relatively poor scan. This also might serve as a future idea to separate shares in images. One idea could also be to let the images run again thru an additional process that would just try to get rid of the borders.

One advantage: this process is relatively fast.

Another idea is to use a local LLM like Mistral that has multimodal capacities. One could test if this is possible with the KNIME nodes or if a Python approach accessing Ollama might work.