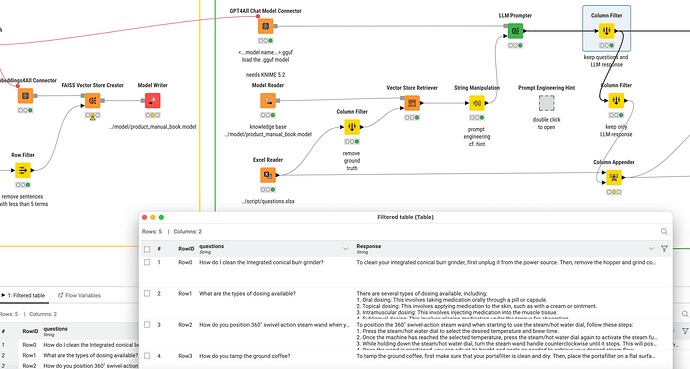

@MoLa_Data I created a workflow based on an example from “KNIME AI Learnathon” using GPT4All local models. I was able to create a (local) Vector Store from the example with the PDF document from the coffee machine and pose the questions to it with the help of GPT4All (you might have to load the whole workflow group):

The chatbot does not (yet?) work. It uses KNIME 5.2 (nightly build).

The first results do look promising, querying a 24 page coffee machine manual ![]() with a local LLM (so no data sent to OpenAI …).

with a local LLM (so no data sent to OpenAI …).

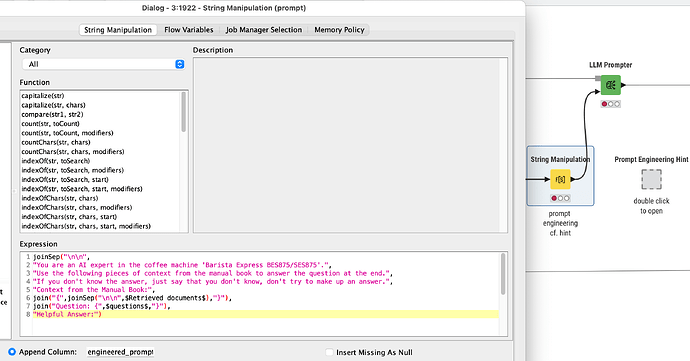

Edit: please note the suggested prompt (from the original workflow). Depending on the model these might have to be adapted. And they also might be crucial.