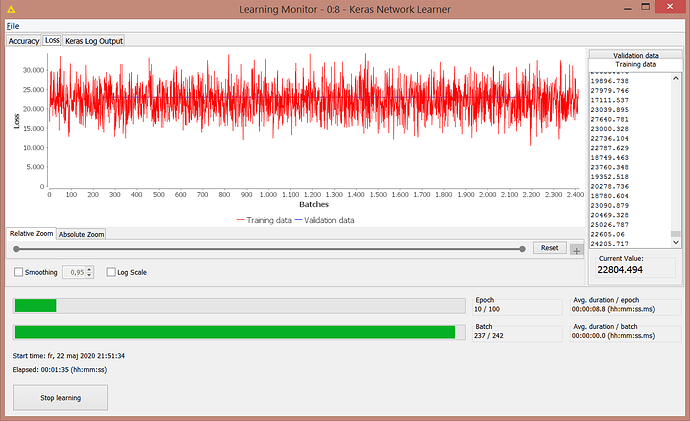

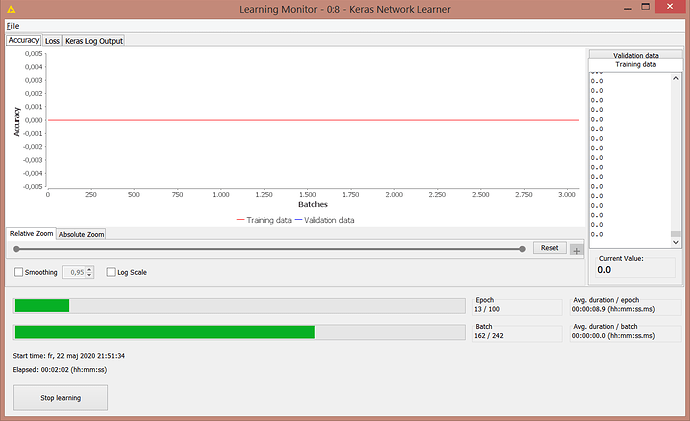

Taking into consideration that the volume might be affecting the loss and accuracy of the model in a negative way I have opted to completely remove it from the model for testing purposes, the result being… the same as before

The loss function still doesnt go towards 0 and the accuracy remains constant 0.

As an extra thing I have tried normalizing the data aswel however without any sucess. I suspect the problem being in using lagged values to predict a current value, but how else would you do forecasting with an LSTM network in KNIME?

If any kind soul could perhaps look at the workflow, maybe there is a KNIME newbie mistake in there?