Very same issue with gpt4all-falcon-newbpe-q4_0.gguf (i.e. “ERROR LLM Prompter 3:868:104 Execute failed: Error while sending a command”) when trying to execute the workflow JKISeason2-20 Ángel Molina – KNIME Community Hub

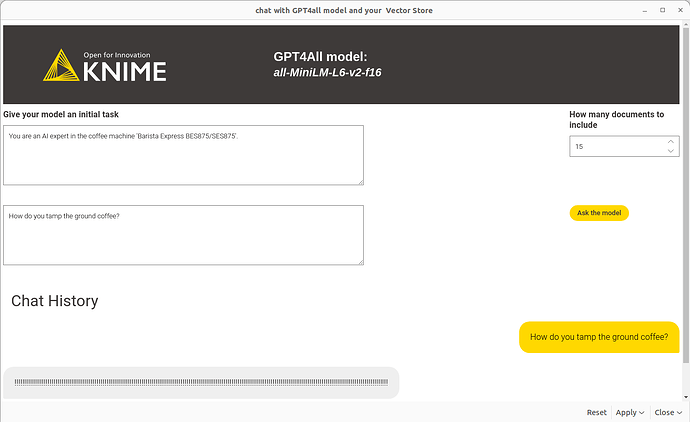

Managed to successfully run the workflow Creating a Local LLM Vector Store from PDFs with KNIME and GPT4All using all-MiniLM-L6-v2-f16.gguf (gpt4all-falcon-newbpe-q4_0.gguf KO) and the 24 page coffee machine manual, although with the following result:

Any hint anyone?

Cheers,

-Stef