HI @vlad28, welcome to the KNIME community! ![]()

Here you go:

- Reading local models from the Spacy Model Selector node:

-

Download on your local machine the model you prefer (“Assets” > model with extension “.tar.gz”). You can find model files on Spacy GitHub repository: Romanian · Releases · explosion/spacy-models · GitHub (I’ve already filtered by “Romanian” language). You should be able to read smoothly model versions up to 3.5.0 (but try also with higher versions).

-

Make sure you have 7-Zip to installed on your PC to unzip files:

a) Download and install 7-Zip if you don’t have it installed already.

b) Right-click on the .tar.gz file you want to extract and select “7-Zip” > “Extract Here.”

c) The contents of the archive will be extracted to the same directory as the archive. The now uncompressed.tarfile should be visible in the directory listing.

d) Locate the.tarfile, right click and select “7-Zip” > “Extract Here.”

e) The contents of the tar file will be extracted to the same directory as the archive. -

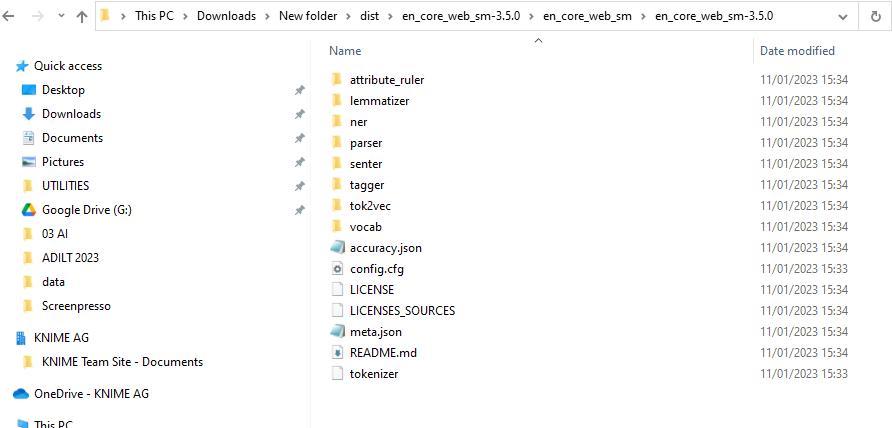

Point the Spacy Model Selector node to the model folder that looks like this:

In my case for example: C:\Users\roberto.cadili\Downloads\New folder\dist\en_core_web_sm-3.5.0\en_core_web_sm\en_core_web_sm-3.5.0

-

Unfortunately, as you correctly pointed the StanfordNLP Relation Extractor or (the even more powerful) StanfordNLP Open Information Extractor only support English.

In general, consider that extracting (meaningful) relations from text is quite a hard task and to train your own extractor can be quite challenging. Unfortunately, I’m not aware of an option for Romanian. My suggestion is to research literature on the this topic specifically dedicated to Romanian, as there might be some resources developed by researchers that can help. Or why not try LLMs? -

Using local LLMs for relation extraction in Romanian:

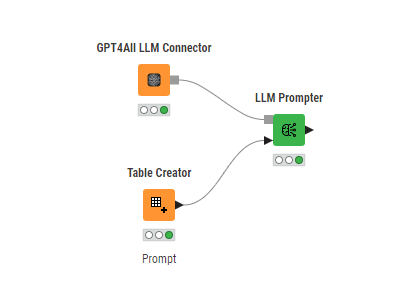

a) That’s quite simple: download your model of choice from GPT4All. To start off, I’d suggest Falcon - as it’s relatively light compared to others but may perform less well.

b) Next, point the GPT4All LLM Connector node to the model file, in my case: C:\Users\roberto.cadili\AppData\Local\nomic.ai\GPT4All\gpt4all-falcon-q4_0.gguf

c) Write your prompt, for example in a Table Creator node, and pass it on the LLM Prompter node. You will have to play a bit to find the right prompt and/or model that suits you best.

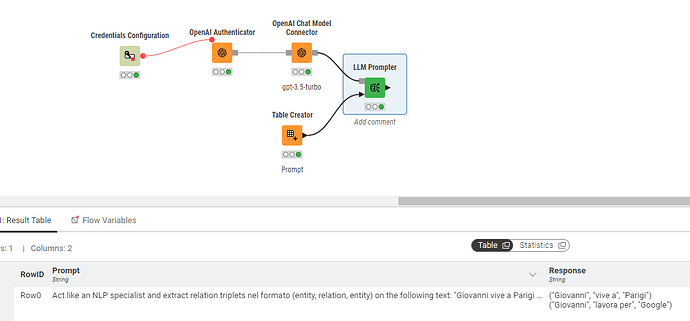

I did a couple of quick tests for Italian and the Falcon model was not great (or maybe my prompts were not good enough - totally possible! ![]() ). I tried the same prompt with ChatGPT and worked very well (the test sentence to extract relations from was: “John lives in Paris and works for Google”).

). I tried the same prompt with ChatGPT and worked very well (the test sentence to extract relations from was: “John lives in Paris and works for Google”).

Hope it helps!

Happy KNIMEing,

Roberto