@kowisoft in the end you will have to judge how you want to solve your problems ![]() and if you are happy with it we are all happy. I would like to add these remarks:

and if you are happy with it we are all happy. I would like to add these remarks:

- your loop would as far as I see it not iterate over the lines but would fill up the loop at each step so the number of lines processed would always get larger. If you only have small amounts of data this might not be an issue (and if this is what you want)

- then as far as I understood your request you wanted to process the chunks within a loop ‘as they are’ and be able to store more general information from within a loop. So you might be able to process groups of data or each line on its own instead of piling up lines until you reach the limit

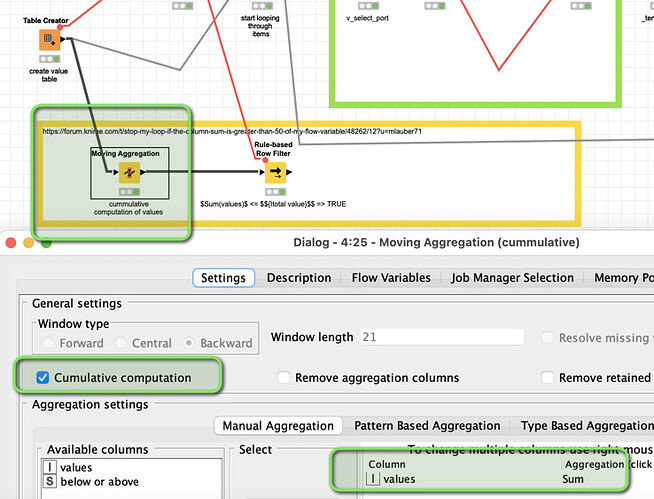

- question is if you could achieve the same result by having a cumulative sum (Creating a column with the cumulative sum of values in another column. Possible without scripting? - #2 by ipazin) and then just use a filter …

- I am not quite sure about the role of the temp file. In any case I would advise against using an unconnected file writer within any loop and just (hoping) that it would do the right thing at the right time. I would suggest to always explicitly wire such tasks by Flow Variables - it might save you from troubles later

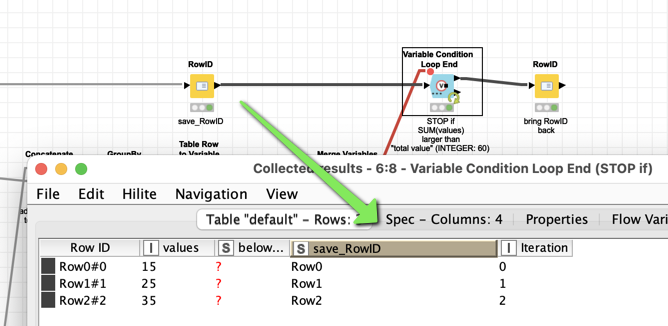

And you could also save the RowID within the loop if you want (Stop my loop if the column sum is greater than 50% of my flow variable – KNIME Hub) …