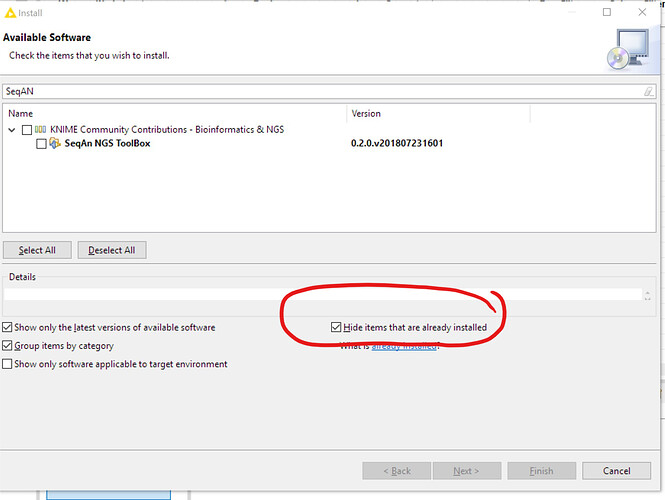

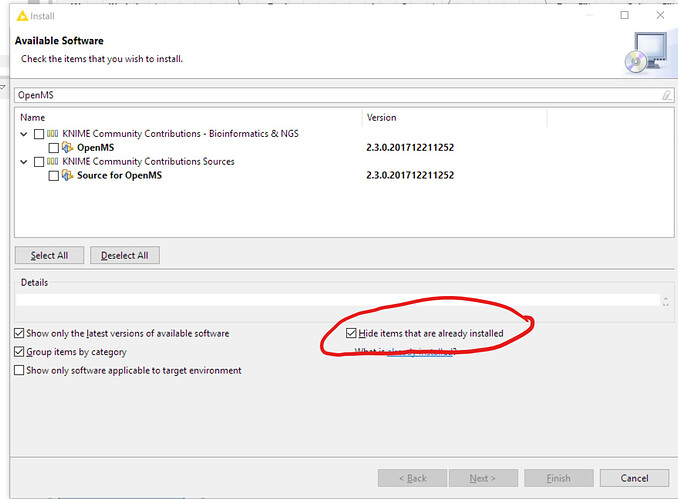

Do you have all community extensions installed, especially OpenMS or SeqAN? These file look like stemming from these extensions. Can you uninstall those extensions and check again?

Since I am running again out of Hard disk space:

KNIME takes at the moment 22.6 GB (!!!) of hard disk space for loading Images that are < 200 MB of size. This explains also the long loading times. Each image consists only of some Kilobytes of data.

There is something completely going nuts.

Stack Trace:

2018-10-30 17:10:31,600 : DEBUG : KNIME-Worker-8 : ImgReader2NodeModel : Image Reader : 0:10:0:1 : Encountered exception while reading image: java.io.IOException: Es steht nicht genug Speicherplatz auf dem Datenträger zur Verfügung at java.io.RandomAccessFile.writeBytes(Native Method) at java.io.RandomAccessFile.write(RandomAccessFile.java:525) at javax.imageio.stream.FileCacheImageInputStream.readUntil(FileCacheImageInputStream.java:148) at javax.imageio.stream.FileCacheImageInputStream.read(FileCacheImageInputStream.java:187) at com.sun.imageio.plugins.jpeg.JPEGImageReader.readInputData(JPEGImageReader.java:312) at com.sun.imageio.plugins.jpeg.JPEGImageReader.readImageHeader(Native Method) at com.sun.imageio.plugins.jpeg.JPEGImageReader.readNativeHeader(JPEGImageReader.java:620) at com.sun.imageio.plugins.jpeg.JPEGImageReader.checkTablesOnly(JPEGImageReader.java:347) at com.sun.imageio.plugins.jpeg.JPEGImageReader.gotoImage(JPEGImageReader.java:492) at com.sun.imageio.plugins.jpeg.JPEGImageReader.readHeader(JPEGImageReader.java:613) at com.sun.imageio.plugins.jpeg.JPEGImageReader.readInternal(JPEGImageReader.java:1070) at com.sun.imageio.plugins.jpeg.JPEGImageReader.read(JPEGImageReader.java:1050) at javax.imageio.ImageIO.read(ImageIO.java:1448) at javax.imageio.ImageIO.read(ImageIO.java:1352) at io.scif.formats.ImageIOFormat$Parser.typedParse(ImageIOFormat.java:131) at io.scif.formats.JPEGFormat$Parser.typedParse(JPEGFormat.java:161) at io.scif.formats.JPEGFormat$Parser.typedParse(JPEGFormat.java:149) at io.scif.AbstractParser.parse(AbstractParser.java:252) at io.scif.AbstractParser.parse(AbstractParser.java:219) at io.scif.AbstractParser.parse(AbstractParser.java:316) at io.scif.AbstractParser.parse(AbstractParser.java:51) at org.knime.knip.io.ScifioImgSource.getReader(ScifioImgSource.java:488) at org.knime.knip.io.ScifioImgSource.getSeriesCount(ScifioImgSource.java:219) at org.knime.knip.io.nodes.imgreader2.readfromdialog.ReadImg2Function.apply(ReadImg2Function.java:62) at org.knime.knip.io.nodes.imgreader2.readfromdialog.ImgReader2NodeModel.execute(ImgReader2NodeModel.java:166) at org.knime.core.node.NodeModel.execute(NodeModel.java:733) at org.knime.core.node.NodeModel.executeModel(NodeModel.java:567) at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1177) at org.knime.core.node.Node.execute(Node.java:964) at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:561) at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:95) at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:179) at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:110) at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:328) at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:204) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123) at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246) 2018-10-30 17:10:31,612 : WARN : KNIME-Worker-8 : ImgReader2NodeModel : Image Reader : 0:10:0:1 : Encountered exception while reading image: file:/C:/Users/Richard/Dropbox/dev/deep_learning_course/Deep%20Learning%20A-Z/Volume%201%20-%20Supervised%20Deep%20Learning/Part%202%20-%20Convolutional%20Neural%20Networks%20(CNN)/Section%208%20-%20Building%20a%20CNN/dataset/training_set/dogs/dog.3401.jpg! view log for more info.

Independently from your image loading problems, It looks like you are working with the dogs and cats dataset from Kaggle, we have a series of example workflows using that dataset on our example server:

https://nodepit.com/server/public-server.knime.com:80/04_Analytics/14_Deep_Learning/02_Keras/04_Cats_and_Dogs

best,

Gabriel

Looks like we are talking about the same data. I got it from a Udemy Course. I actually saw some of these nodes already (copied part of the VGG 16 manipulation Code from there :-))

This might be after the python node which does data augmentation or does this happen immediately after reading in the images?

Regarding your screenshots:

(a) The one with the “c” files puzzles me. Can you figure out which nodes causes the creation of those files? I wouldn’t be aware that we do that in any node.

(b) The other one is expected behaviour. The created files however should be removed again after the data has been shipped to or from python. @MarcelW can you comment?

Best,

Christian

Regarding (b): In KNIME Analytics Platform 3.6.1 and earlier, only parts of the data are deleted right after the data transfer. Most of it is deleted when KNIME closes properly. This behavior was fixed in the meantime and will be part of the next release of KNIME AP. @mereep, please feel free to try it out using our nightly build (please see the disclaimer there). We’d highly appreciate any feedback on whether that mitigates the problem  .

.

Hi and thanks for your responses,

I try to dig a bit into this as soon as I can. But the underlying problem in my eyes is that the 200MB of Data gets blown up to 22GB of Data. We are talking of an increase in size of factor over 100. This makes save times over 10 Minutes. Load times similiar. And actually this is so less data. Isn’t the fix to be found in the image handling itself? What happens if someone wants to work with some real data? This is absolutely not possible at this rate.

Maybe even the caching strategy is a problem: The Data could be with no problems just be hold in RAM. I have 16GB in this machine. 200MB of Image data would be a snap.

I know that most of the stuff is propably more easy said than done. But rest assured, that I am not doing anything special right now. Other users will notice this also and in consequence may stay away from using the platform. I think that would be a waste

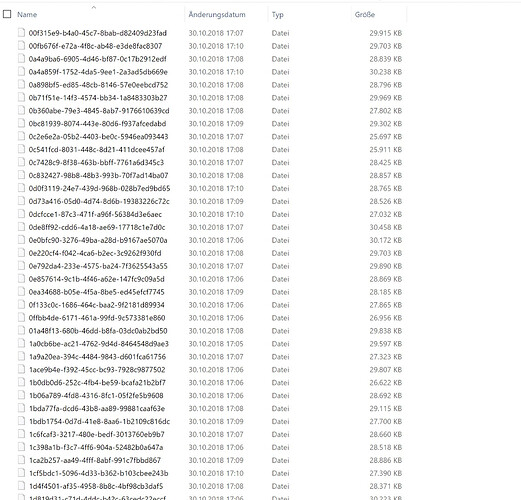

Ok, I think at least here I am already a step further: These files don’t seem to be workflow related. They are created when just opening knime. For sake of completeness I closed all workflows and just reopened knime → files are created.

This happens after the splash screen (some seconds after rendering the interface I would assume).

It seems that those files are created by the DeepLearning backends. During startup we check if a certain deep learning backend is installed or not (e.g. keras, theano etc). Those files seem to relate to that. Are they deleted after closing KNIME?

No, they stay and will be recreated, so they basically add up each time you start the application

Do you happen to have Theano installed in your Python environment? If yes, could you temporarily uninstall it and see if those c files are still being created?

Also, what serialization library do you use for data transfer between KNIME and Python? You can find that on the Python preference page.

Flatbuffers Column Serialization

Yes

I did

Fixes this problem. No .exe files and no .c files.

Alright, thanks for digging into it  . I assume those c/exe files were not created in a knime_… subfolder of the temp directory, right? I this case we can’t really control their deletion

. I assume those c/exe files were not created in a knime_… subfolder of the temp directory, right? I this case we can’t really control their deletion  .

.

Regarding the Python serialization: The Flatbuffers serializer uses a socket connection to transfer data between KNIME and Python, so we can rule out a leak there. You can ignore my post above.

Are those files:

https://forum.knime.com/uploads/default/original/2X/6/64acdd6fcb4287ec7eb8ff55eeaade0467996a2a.jpeg

all created by a single node (e.g., the image reader) or do they pile up over time/are distributed across the execution of several nodes?

unfortunatly they are in the base folder of the local temp. There they will resist forever, since even windows temp cleanup doesnt seem to touch them.

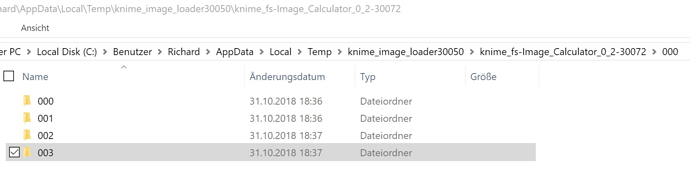

This happens in a single node execution. Furthermore upstream nodes will create their own files when a down stream node creates some. So using for example: Image Reader → Image Calculator. You can execute the Image Reader and only some empty(!) folders are created. Then you execute the Image calculator and both Image Loader and Image Calculator emit a big amount of huge files.

I made another propably important notice: The huge files only get created when loading a certain amount of data. If I load less Images there are only folders created with no content. If I load a certain amount of images then all files are created all together in one run. Also note that this behaviour seems to sum up the amount of data. If the an downstream node exceeds a limit that the upstream node did not, still both start to party on hard disk.

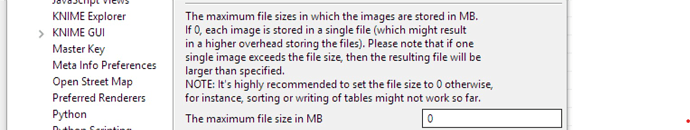

Also note that this setting:

influences the file size and the amount. If set to zero, there is a file created for every(!) Image that is between 1 and 5 MB big. 1000 per folder in

If set to (in my example 30) files just get less and bigger. The amount of data in sum is about equal. 15 GB for 4000 images.

Also note that the files created for Image Reader are all < 1 MB if setting is set to 0. Image Calculator are between 1 and 5 MB.

Regarding the C-files: There is not much we actually can do about this, as Theano is creating these files. Maybe you can uninstall theano?

Regarding the other temporary files: In the current implementation of KNIME Image Processing, if you read or process an image, the data is duplicated for each image. The duplicated images are only written to disk in the temp folder if the used main memory exceeds a certain percentage of the heap space available to the JVM. That’s why you experience the temporary files only after a certain amount of images have been read. However, 200mb of data (even uncompressed) shouldn’t result in > 10GB of temporary data. This is something we will investigate.

Having all of that said, we’re working on a new version of KNIME Image Processing which actually doesn’t duplicate all the data all the time. Especially for very large images this is a problem which we will address. I can’t give you an estimate when it will happen, though.

Sounds good. I cannot explain that also. As a matter of fact even very small images seem to be blown up by a big amount of data. An image of some KB becomes multiple MB of disk space.

I wanted to also look a bit into that, but I cannot get knip to work within my Eclipse for some reason

If I can help in some way, let me know!

Thanks! Confirmed close Knime, ensure it is not running. And can move to a different disk space, or delete. Using Optane Drive and had accrued 95GB of temp directory files!