Hello,

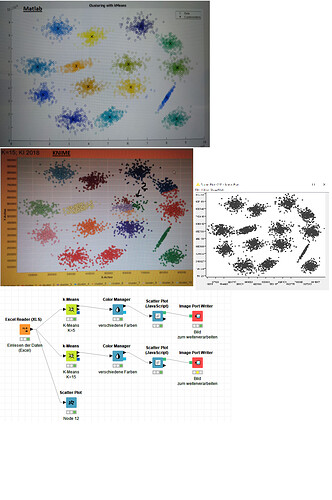

i’m trying to find clusters for an easy dataset. By plotting the data it easyly can be seen that there are 15 clusters.

But the accuracy of the k-means Node in KNIME is realy bad or even wrong by comparing it to the results of matlab. (see the attachment).

Is there a way to get better results ? Setting the iterations to a higher number does not have influence on this problem. I think the hole algorithm has to perform a few times and those resluts has to be compared.

Thank you very much in advance.

Hello Simon1795,

I believe this is an issue of initialization for the cluster centroids.

The k-means implemented in Matlab is also referred to as k-means++ and the ++ part is a smart initialization heuristic (https://www.mathworks.com/help/stats/kmeans.html#bueftl4-1).

In KNIME we use the first rows as initialization which can be an issue if multiple of those rows belong to the same cluster.

Our KNIME Distance Matrix extension also contains a k-Medoids node that performs a similar task to the k-Means node but allows you to do more configurations including the used distances. It also uses a random initialization which I found to be better than the initialization used in k-Means.

Thank you for bringing this to our attention.

Best,

nemad

Please see if this is a related issue.

K=2, I am trying out with a tiny data set. The results are inconsistent.

If the dataset is X = {1,2,8,10} and Y = {1,1,10,8} the cluster centers are identified fine. {1.5,1} and {9,9}. This matches results from Python sklearn also.

If I switch to X = {1,2,8,10} and Y = {2,1,10,8} the cluster centers are not. gives as {4.5,6} and {6,4.5} when it should be {1.5,1.5} and {9,9}

Thanks in advance.