Hello KNIME community,

It seems there is a serious lack of documentation for the Keras extension (no offense). Therefore I am asking if someone better knowing than me knows how to configure the hidden layers in a keras LSTM. It seems nearly impossible to find with google and not prior workflows (that I have seen) have hidden layers for their LSTM.

Hi again @Repletion

Apologies that some of the Documentation is a bit vague. It’s worth noting here that the Keras integration is applying the Keras python library under the hood so in some cases going straight to the Keras source documentation may be helpful:

https://keras.io/api/layers/recurrent_layers/lstm/

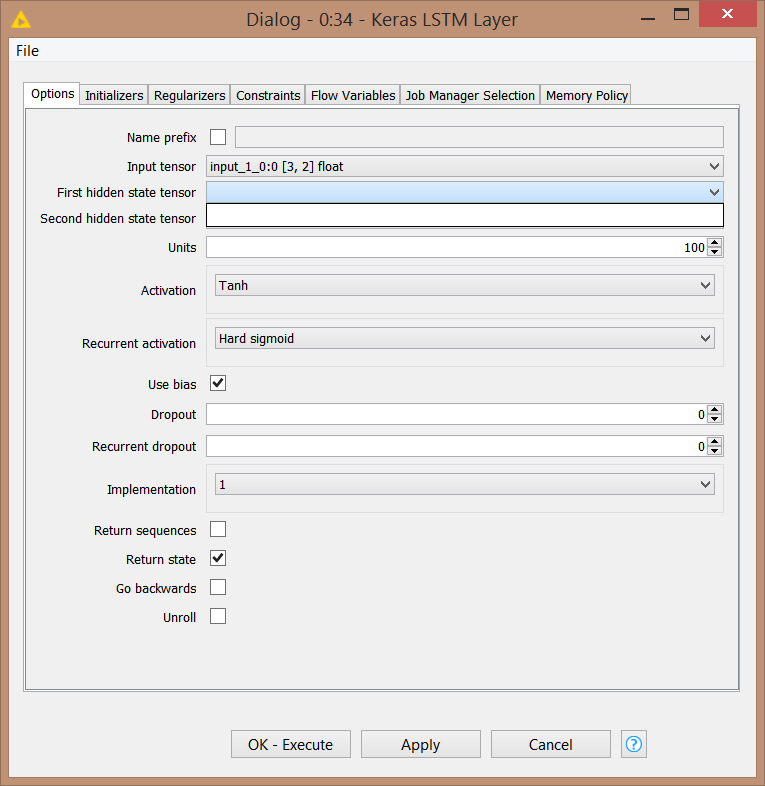

The short of it is that the hidden state vector of the LSTM unit in the KNIME node is a tensor of Shape 256. You can customize this by selecting the recurrent activation function (the activation for the LSTM unit’s input, forget, and output gates internally) and by optionally setting the initial value of those hidden states through the 2 optional input ports and those drop downs in the dialog. You could use that if you had specific values you wanted to embed into the hidden state from the beginning. In your use case I don’t think you’d want to.

Here’s an example where the initial hidden states are set in an encoder / decoder network for text generation:

If you have other questions about the configuration let us know.

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.