Hi there,

I’m tasked to find (unlabelled) anomalies in log files and figured Doc2Vec followed by DBScan might be a good choice. To verify this strategy works I came up with an extremely simplified example which wouldn’t really require language processing but the messages are in reality more complex (typically a message is made up from multiple words where you have similar message where only a few words are different and messages which are completely different). Despite the simplification I’m failing to conclude if the approach makes sense and the examples I found here seem to be different or a bit too complex to get started. So maybe someone’s got time to take a look at this and share his/her thoughts?

Logs typically contain messages for different operations a machine carries out during processing a product. In my example there is only three messages (a, b and c) and they’re always in this sequence. However, sometimes products are being more difficult and some tasks need multiple attempts but always b should come after n-times a and c should come after n-times b (where n is larger than or equal to 1). I limited my example to 6 scenarios:

22 normal products (a-b-c)

22 difficult products (a-a-b-b-c-c),

22 very difficult products (a-a-a-b-b-b-c-c-c)

3 anomalies (a-a-a, a-b-c-a-b-c and c-c-c-a-b-a-a-b-b).

Doc2Vec_MockUp_DataSet.xlsx (15.9 KB)

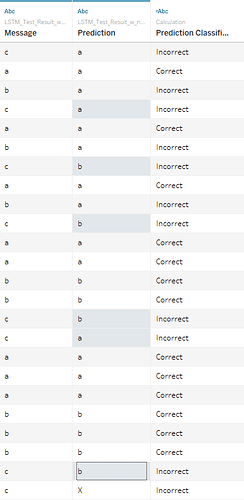

My goal is to find three product clusters and 3 anomalies either as separate clusters or noise and I figured I could use the product ID (called “Window” in the attached file) as document title and the message (a, b, or c) as text, then train a Doc2Vec model on the data, apply some distance metric and use DBScan to find the clusters. I’m not getting anything close to what I expect, no matter what distance metric or DBScan settings I use so I’m wondering if my understanding and usage of Doc2Vec is correct in the first place. Any thoughts on this are highly appreciated!

Doc2Vec_Test.knwf (111.5 KB)

Thanks in advance,

Mark