Hi,

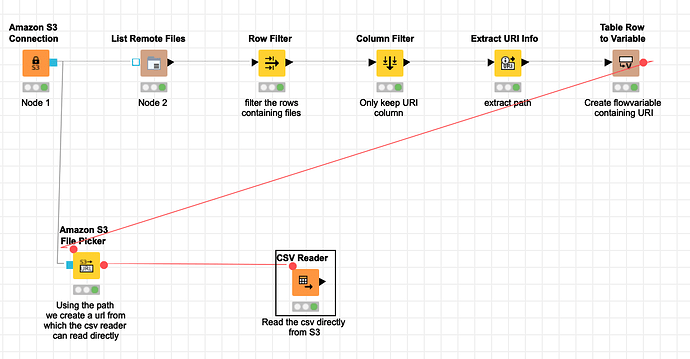

I just started using Knime and really like it. I’m having a problem with connecting to S3 and reading a number of CSV files using URLs. My flow is as follows:

- Connect to S3 (works)

- List files (works)

- Convert list to flow variables (works → converts to https URL, which is what I use)

- File Picker to get the file (works)

- CSV Reader to read the CSV (fails here, see below)

The url created for the file has the following form:

https://**my.bucket.name**.s3.us-east-1.amazonaws.com/myfilename.csv?<security signature/creds/etc.>

This URL is provided as a flow variable, and, when the CSV reader tries to read this URL, it fails. The logs show the following errors:

java.security.cert.CertificateException: No subject alternative DNS name matching my.bucket.name.s3.us-east-1.amazonaws.com found`

Caused by: javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException: No subject alternative DNS name matching

I used a browser and openssl to look at the security certificate, and I see that this error is indeed correct: there is no name for my bucket in the SSL certificate. That’s to be expected, since AWS can’t know how I’ll name my buckets. SO, they use a wildcard name in the certificate:

*.s3.us-east-1.amazonaws.com

When I go to my browser and put in the full URL that was created by the flow variable, I am able to easily download the file.

I went on these forums to see how to solve this, and it looks like the SSLHandshake error has occurred before. The fix seems to be to import the certificate into the local keystore. However, when I try to import the certificate chain into my Knime keystore located in my (windows install here!) \Program Files\KNIME\plugins\org.knime.binary.jre.win32.x86_64_1.8.0.202-b08\jre\lib\security\cacerts , I still get the error. I’ve verified that the cert chain that imported is present by listing the certs in the keystore and looking for the fingerprint-- it’s there. I’ll note however, that I gave the cert an alias (“aws_test”), so I’m not sure if that changes anything.

Does anyone have any thoughts on how I might fix this? What have I done wrong? Clearly, the browser honors the wildcard name on the cert, while Knime seems to have a problem with it. Could I address this with an LMHOSTS entry?

Thanks (in advance).

_Ravi