I think with a component (similar to a metanode but with much more options) you could tell it to write to disk, that might save some RAM. Writing to disk of course comes with a price tag. You would need time to process it from memory to disk and maybe back, but it could result in a more stable workflow.

As long as a workflow is open KNIME would keep the data ‘ready’ that could either be in memory or on disk. So if you still have issues it might be an option to split the workflow into sub-workflows (which obviously increases the handling costs thru calls and planning). As a side note: with the KNIME server you could orchestrate a cascade of workflows easily

A Cache node also can either be held in memory (faster) or could be told to write its content to disk. The cache per se would not save memory it would collect all the previous transactions into one place which sometimes could make a workflow more stable and might be placed before you export data to an outside file or you write it into a database.

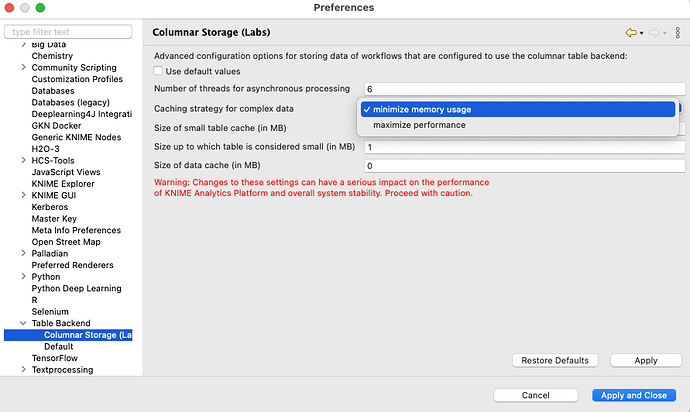

The columnar storage has some further options to tweak (you might want to be careful playing with that). But a word of advise: I have seen problems with the underlying parquet format and KNIME.