Hi,

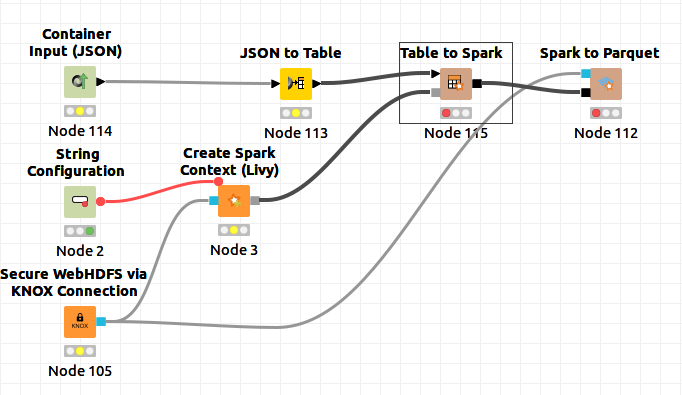

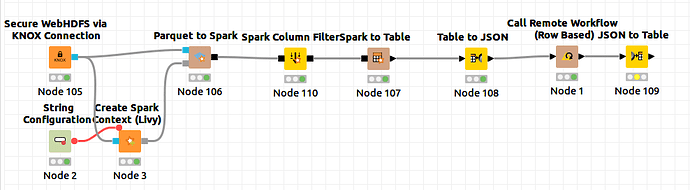

I am trying to use Call Remote Workflow(Row based) node to call other workflow. In this sample example, I am just trying to call a workflow and save the output file from the called worflow in our hdfs. I have added pictures of:

- call_workflow

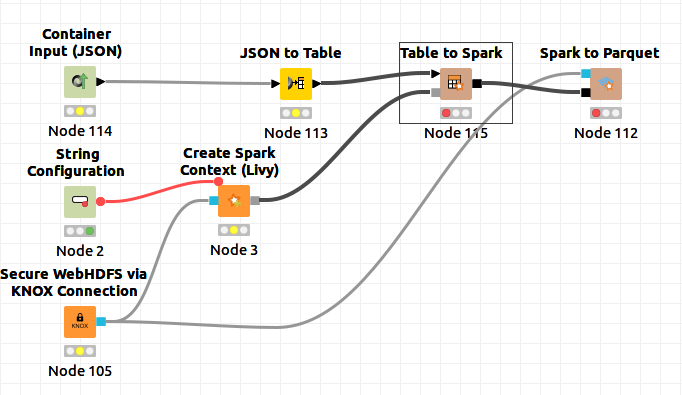

- called_workflow

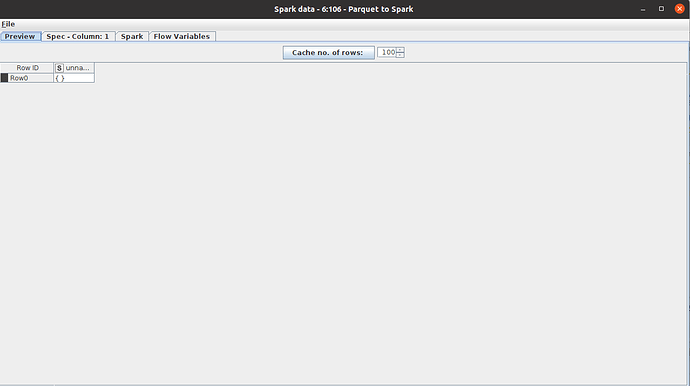

After the execution as show in the call_worflow, when I tried to access that dataframe, the resultant dataframe was something like this,

I can’t figure the reason behind this problem. Instead of this empty set, there should be a dataset of 1000 rows.

Hi,

Have you set “From Column” as the input data to be sent in the Call Remote Workflow node? For it to appear you need to click the “Load input format” button.

Kind regards,

Alexander

Hi,

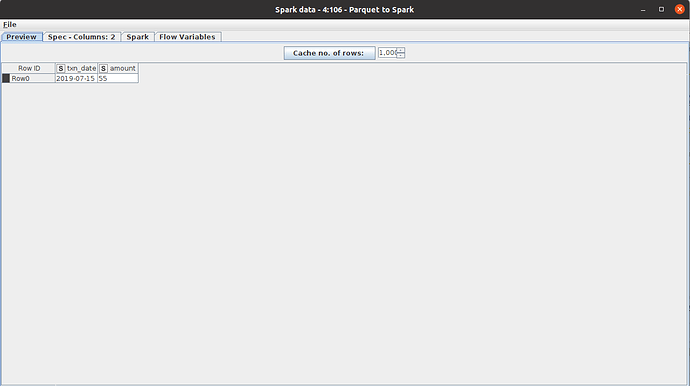

I did that and this time called workflow got executed but I think all the rows are getting overwritten on each new row because the final output file has only one row which is the last row of the input file.

Hi,

In your Spark to Parquet node, did you specify what to do when the file already exists? I think you are looking for the “Append” option here and currently the workflow is simply overwriting the file.

Additionally, you might want to have a look at the Call Workflow (Table Based) node instead of the row based one. You can simply send the whole whole table and then write it all to the Parquet file at once. Be aware, though, that this is only feasible for data sizes up to around 100MB.

Kind regards,

Alexander

So since I am working with Big Data, so using Call workflow nodes won’t work? If not, then what is the alternative?

Hi,

I don’t think this is the case. You can just turn on the append option in your Parquet Writer and it should output more than one line.

Kind regards,

Alexander

I am asking in Call workflow regard. suppose I want to call a workflow using call workflow node. So, is there any input data limitation that is being passed through Call Workflow node to the workflow that I called.

Oh, I see! Sorry for the confusion. Yes, the “soft limit” is around 100MB. If it is more, it makes more sense to upload the data to the server using the normal file handling nodes (e.g. File Upload before KNIME 4.3 and Transfer Files with KNIME 4.3). After the upload you can then access the data in your workflow using a KNIME Server Connector node.

Kind regards,

Alexander