Hi all!

It’s really awesome to see all those helpful packages and examples popping up that help to try out AI models on your own!

I found a bug in the GPT4All nodes in the KNIME AI Extension package. When you are offline and you select a model to be read from locally, the GPT4All Connectors still try to access gpt4all.io (to fetch /models/models2.json) which is not needed because I am not downloading a model from there, but want to just connect to the one I have stored locally. If I am connected to the internet, the connectors to the local file work just fine and do an awesome job ![]()

WARN GPT4All Embeddings Connector 3:1979 Traceback (most recent call last):

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connection.py", line 203, in _new_conn

sock = connection.create_connection(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\util\connection.py", line 60, in create_connection

for res in socket.getaddrinfo(host, port, family, socket.SOCK_STREAM):

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\socket.py", line 962, in getaddrinfo

for res in _socket.getaddrinfo(host, port, family, type, proto, flags):

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

socket.gaierror: [Errno 11001] getaddrinfo failed

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connectionpool.py", line 790, in urlopen

response = self._make_request(

^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connectionpool.py", line 491, in _make_request

raise new_e

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connectionpool.py", line 467, in _make_request

self._validate_conn(conn)

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connectionpool.py", line 1096, in _validate_conn

conn.connect()

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connection.py", line 611, in connect

self.sock = sock = self._new_conn()

^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connection.py", line 210, in _new_conn

raise NameResolutionError(self.host, self, e) from e

urllib3.exceptions.NameResolutionError: <urllib3.connection.HTTPSConnection object at 0x000001F69988F810>: Failed to resolve 'gpt4all.io' ([Errno 11001] getaddrinfo failed)

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\adapters.py", line 486, in send

resp = conn.urlopen(

^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\connectionpool.py", line 844, in urlopen

retries = retries.increment(

^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\urllib3\util\retry.py", line 515, in increment

raise MaxRetryError(_pool, url, reason) from reason # type: ignore[arg-type]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='gpt4all.io', port=443): Max retries exceeded with url: /models/models2.json (Caused by NameResolutionError("<urllib3.connection.HTTPSConnection object at 0x000001F69988F810>: Failed to resolve 'gpt4all.io' ([Errno 11001] getaddrinfo failed)"))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Software\knime_5.2.0\plugins\org.knime.python.llm_5.2.0.202312061509\src\main\python\src\models\gpt4all.py", line 644, in execute

_Embeddings4All(

File "pydantic\main.py", line 339, in pydantic.main.BaseModel.__init__

File "pydantic\main.py", line 1102, in pydantic.main.validate_model

File "C:\Software\knime_5.2.0\plugins\org.knime.python.llm_5.2.0.202312061509\src\main\python\src\models\gpt4all.py", line 476, in validate_environment

values["client"] = _GPT4All(

^^^^^^^^^

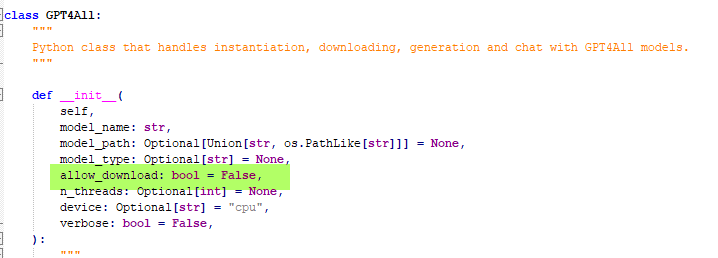

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\gpt4all\gpt4all.py", line 97, in __init__

self.config: ConfigType = self.retrieve_model(model_name, model_path=model_path, allow_download=allow_download, verbose=verbose)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

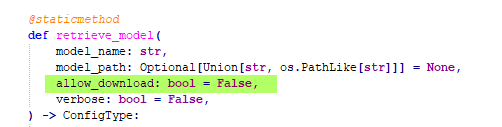

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\gpt4all\gpt4all.py", line 149, in retrieve_model

available_models = GPT4All.list_models()

^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\gpt4all\gpt4all.py", line 118, in list_models

resp = requests.get("https://gpt4all.io/models/models2.json")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\api.py", line 73, in get

return request("get", url, params=params, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\api.py", line 59, in request

return session.request(method=method, url=url, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\sessions.py", line 589, in request

resp = self.send(prep, **send_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\sessions.py", line 703, in send

r = adapter.send(request, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\bundling\envs\org_knime_python_llm\Lib\site-packages\requests\adapters.py", line 519, in send

raise ConnectionError(e, request=request)

requests.exceptions.ConnectionError: HTTPSConnectionPool(host='gpt4all.io', port=443): Max retries exceeded with url: /models/models2.json (Caused by NameResolutionError("<urllib3.connection.HTTPSConnection object at 0x000001F69988F810>: Failed to resolve 'gpt4all.io' ([Errno 11001] getaddrinfo failed)"))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Software\knime_5.2.0\plugins\org.knime.python3.nodes_5.2.0.v202311290857\src\main\python\_node_backend_launcher.py", line 718, in execute

outputs = self._node.execute(exec_context, *inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Software\knime_5.2.0\plugins\org.knime.python.llm_5.2.0.202312061509\src\main\python\src\models\gpt4all.py", line 650, in execute

raise ValueError(f"The model at path {self.model_path} is not valid.")

ValueError: The model at path C:\Users\dobo\knime-workspace-5.2\llm_models\all-MiniLM-L6-v2-f16.gguf is not valid.

ERROR GPT4All Embeddings Connector 3:1979 Execute failed: The model at path C:\Users\dobo\knime-workspace-5.2\llm_models\all-MiniLM-L6-v2-f16.gguf is not valid.