Dear all,

I am new to Knime. In Weka you have this nice ‘experimenter’ where you can compare the performance of several classifiers - with repeated holdout, or repeated cross validation - using a t-test. I like this feature , it gives more ‘significance’ to comparing classifiers (instead of just saying ‘this one is better than that one’.) Can this be done in Knime? So analysing whether a classifier performs significantly better or worse than another? Any existing workflows doing this? Any help is appreciated.

many thanks,

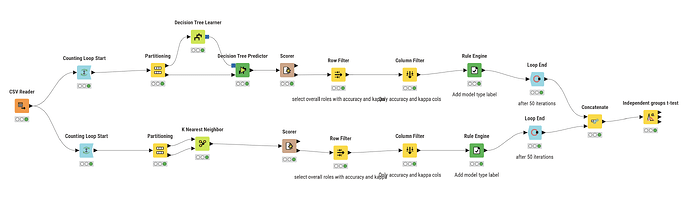

Ok, I found a solution using loops. Is there another way? Maybe shorter?

Hello @stephan_p

Your approach solves the problem, however, there is yet another optimal way to do cross-validation in KNIME. Yes it is still a loop (sort of) but will assist you in assessing for each fold.

You will need X-Partitioner and X-Aggregator node and customizing this example will be a better solution in your case.

Also a FYI, @alinebessa is working on the component that can do 5x2cv Paired t-test in KNIME. The current work in progress can be found here and it might be of help to in your specific case.

Best,

Ali

2 Likes

Hi @stephan_p! I highly recommend using the 5x2cv component that Ali pointed out. Maybe you can adapt it to your needs. This type of validation reduces certain biases that come from re-using data when comparing two models.

Dear Ali and Aline,

Thank you very much for your replies and suggestions. Yes, I know the X-Partitioner and X-aggregator nodes. Even though they create a loop through the k folds, I would still need to use them inside an overarching loop to create ‘repeated cross-validation’ and have a more meaningful t-test.

I will have a closer look at the 5x2cv Paired t-test !

best regards

Stephan

1 Like