Hello

We couldn’t get the AI Extension Example Workflows for Vector Space to work. We used a free api key for the AI Authenticator, but the flow crashes on the FAISS Vector Store Creator node because it says that the number of allowed requests was exceeded. We tried to load models for embeddings from the downloadable ones in GPT4All, but we didn’t succeed with either Llama2 or Wizard v1.1. Is there any way to make these flows work without having to pay for an api key?

Thanks

It might help if you share the flow and data.

The free API has request limits so not sure how many requests you have send sequentially

Regarding the nodes I also experienced some issues/ missing things. @ScottF Maybe KNIME devs/team can shed some light on open questions here

br

Hello @Daniel_Weikert and @ScottF ,

I am @lsandinop 's colleague.

The workflow and data we are working on is the example in the hub

I truly appreciate your help. Warm regards!

Hello @MoLa_Data and @lsandinop,

unfortunately, there is no support for local embeddings models yet, so you have to use either the OpenAI API or Hugging Face Hub, both of which enforce rate limits on their free tier.

However, there will be a local embeddings model in the next release.

If you like you can already check it out in the nightly build (https://update.knime.com/community-contributions/trunk).

Sorry for the inconvenience,

Best,

Adrian

Hello @nemad

The link doesn’t work.

If you want send it to me through the email: angelmolinalaguna@gmail.com

Thank you so much. ![]()

Sorry, I didn’t explain it clearly.

To check out the nightly you need to

- Download and install a nightly of the KNIME Analytics Platform from https://www.knime.com/nightly-build-downloads?&

- Start the AP

- Add the update site https://update.knime.com/community-contributions/trunk in Preferences → Install/Update → Available Software Sites

- Install the KNIME AI Extension (Labs)

Cheers,

Adrian

Hi @nemad

is it possible to store the vectorstore locally? And then load it?

I tried but encountered error when saving it with model writer.

It only worked with a faiss index which I created from python directly and not with knime nodes.

Also is the agent using the vectorstore and is there some “verbose” to see that?

Thanks

Hello @Daniel_Weikert,

can you give some more detail on the error you receive?

I just tried to save and load a vector store that uses the OpenAI Embedding with the Model Writer and Model Reader node and that worked fine.

There is a caveat, though: You will still need to provide the correct credentials in the nodes downstream of the reader as those are not stored as part of the model for security reasons.

Best,

Adrian

@nemad

How did you store it with the model writer? What format and naming?

Also in version 4.X or 5.1 ?

Thx

Hm, I think we might be referring to different things.

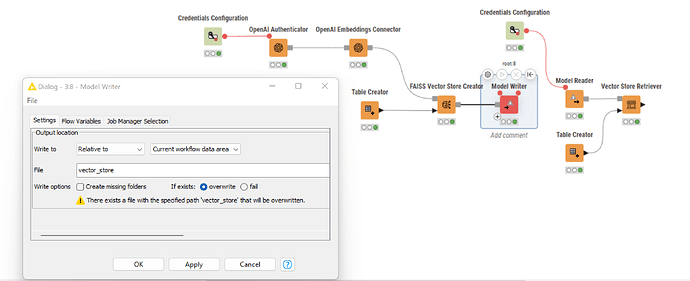

Here is a screenshot of my workflow in 5.1.0:

The naming doesn’t really matter to the writer and reader but it might influence how your OS displays the file when you locate it in the file explorer.

ah ok

@nemad thanks for the update

I thought I could use the Faiss Vectorstore Reader after the Model Reader

With the retriever like in your screen I run into a rate limit error which is kind of strange for a small sample dataset. I would assume only the query/s need to be emedded and therefore use the api as the vectorstore already persists.

br

Yes, only the query should be embedded and then similar vectors and their associated documents are retrieved from the vector store.

When I was experimenting with OpenAI’s free credit, I also had seemingly random rate limit errors from time to time even though I should have been no where near the limit. I suspect that they also drop those if they experience too much load on their system.

@nemad

have you tried it with Huggingfaceembeddings? I used repo (sentence-transformers/all-MiniLM-L6-v2)

It works but it’s pretty slow on my end

My test dataset only contained 30 rows to lookup. Vectorstore also was only 30 rows as i used exactly the same data for embedding

br

Yes I also found Hugging Face to be quite slow but I also only tried with the free tier, where I kind of expected it to be slow.

The model you tried is actually the one that the next version of our AI Extension will support via GPT4All.

At least on my laptop the performance is acceptable, especially for a model running on consumer hardware.

@nemad

Thanks appreciate your work here.

I am interested to see more implementations of available tools for the llm or maybe even a way to do function calling (using a function from a python script node as input or sth like that).

Maybe different kinds of chains,…

As far as I could see you are using langchain under the hood.

Will there be some performance difference in comparison to directly run a script?

br

br

Yes, the extension is based on LangChain and we have some ideas going in that direction.

Performance-wise you will probably not see a big difference compared to using the Python Script node because the backend is essentially the same.

@nemad

when I tried the vectorstore as tool i was not able to “force” the agent to use it. It was always referring to its “own” knowledge. My description was quite clear. Any thoughts/ideas why that is?

When I use it in langchain as retriever I never had issues so far

thx and br . Enjoy your weekend

After some investigation and debugging we found out that at least in our tests the issue was not that the agent didn’t use the tool but that it used it incorrectly because it didn’t seem to associate the general tool description correctly with the tool input.

We also found a remedy (turning the Tool into a StructuredTool with parameter descriptions) that will be included in the next release.

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.