Hello everyone,

While trying to execute the node Create Spark Context I am getting the following error:

ERROR Create Spark Context (Livy) 3:230 Execute failed: Found: (LivyHttpException)

Here are the specs used:

KNIME Analytics Platform : 4.7.4

Spark version: 2.4

Hosted by Cloudera

Here are the logs for the execution of the node:

2023-12-06 09:32:39,116 : DEBUG : main : : NodeContainerEditPart : : : Create Spark Context (Livy) 5:230 (CONFIGURED)

2023-12-06 09:32:41,821 : DEBUG : main : : ExecuteAction : : : Creating execution job for 1 node(s)...

2023-12-06 09:32:41,822 : DEBUG : main : : NodeContainer : : : Create Spark Context (Livy) 5:230 has new state: CONFIGURED_MARKEDFOREXEC

2023-12-06 09:32:41,822 : DEBUG : main : : NodeContainer : : : Create Spark Context (Livy) 5:230 has new state: CONFIGURED_QUEUED

2023-12-06 09:32:41,823 : DEBUG : main : : NodeContainer : : : Spark-par-livy 5 has new state: EXECUTING

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowManager : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 doBeforePreExecution

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 has new state: PREEXECUTE

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowDataRepository : Create Spark Context (Livy) : 5:230 : Adding handler 3ac0ff9f-8b90-49ac-921b-68a2c13c0868 (Create Spark Context (Livy) 5:230: <no directory>) - 2 in total

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowManager : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 doBeforeExecution

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 has new state: EXECUTING

2023-12-06 09:32:41,823 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : LocalNodeExecutionJob : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 Start execute

2023-12-06 09:32:41,825 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : LivySparkContext : Create Spark Context (Livy) : 5:230 : Creating new remote Spark context sparkLivy://4baba528-5caf-4f9f-8db4-d1d46f0d18de at https://custom.livy.url.bigdata:8443/ with authentication KERBEROS.

2023-12-06 09:32:41,827 : INFO : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : SparkContext : Create Spark Context (Livy) : 5:230 : Spark context sparkLivy://4baba528-5caf-4f9f-8db4-d1d46f0d18de changed status from CONFIGURED to OPEN

2023-12-06 09:32:42,668 : INFO : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : SparkContext : Create Spark Context (Livy) : 5:230 : Spark context sparkLivy://4baba528-5caf-4f9f-8db4-d1d46f0d18de changed status from OPEN to CONFIGURED

2023-12-06 09:32:42,668 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : Node : Create Spark Context (Livy) : 5:230 : reset

2023-12-06 09:32:42,668 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : SparkNodeModel : Create Spark Context (Livy) : 5:230 : In reset() of SparkNodeModel. Calling deleteSparkDataObjects.

2023-12-06 09:32:42,669 : ERROR : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : Node : Create Spark Context (Livy) : 5:230 : Execute failed: Found: (LivyHttpException)

java.util.concurrent.ExecutionException: org.apache.livy.client.http.LivyHttpException: Found:

at org.apache.livy.client.common.AbstractHandle.get(AbstractHandle.java:198)

at org.apache.livy.client.common.AbstractHandle.get(AbstractHandle.java:221)

at org.knime.bigdata.spark.core.livy.context.LivySparkContext.waitForFuture(LivySparkContext.java:495)

at org.knime.bigdata.spark.core.livy.context.LivySparkContext.createRemoteSparkContext(LivySparkContext.java:472)

at org.knime.bigdata.spark.core.livy.context.LivySparkContext.open(LivySparkContext.java:320)

at org.knime.bigdata.spark.core.context.SparkContext.ensureOpened(SparkContext.java:145)

at org.knime.bigdata.spark.core.livy.node.create.LivySparkContextCreatorNodeModel2.executeInternal(LivySparkContextCreatorNodeModel2.java:85)

at org.knime.bigdata.spark.core.node.SparkNodeModel.execute(SparkNodeModel.java:240)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:549)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1267)

at org.knime.core.node.Node.execute(Node.java:1041)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:595)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:367)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:221)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

Caused by: org.apache.livy.client.http.LivyHttpException: Found:

at org.apache.livy.client.http.LivyConnection.executeRequest(LivyConnection.java:304)

at org.apache.livy.client.http.LivyConnection.access$000(LivyConnection.java:68)

at org.apache.livy.client.http.LivyConnection$3.run(LivyConnection.java:277)

at java.base/java.security.AccessController.doPrivileged(Unknown Source)

at java.base/javax.security.auth.Subject.doAs(Unknown Source)

at org.apache.livy.client.http.LivyConnection.sendRequest(LivyConnection.java:274)

at org.apache.livy.client.http.LivyConnection.sendJSONRequest(LivyConnection.java:254)

at org.apache.livy.client.http.LivyConnection.post(LivyConnection.java:215)

at org.apache.livy.client.http.CreateSessionHandleImpl.performStart(CreateSessionHandleImpl.java:144)

at org.apache.livy.client.common.AbstractHandle$5.run(AbstractHandle.java:267)

at org.apache.livy.client.common.AbstractHandle$4.run(AbstractHandle.java:231)

at org.apache.livy.client.common.AbstractHandle$2.call(AbstractHandle.java:156)

at org.apache.livy.client.common.AbstractHandle$2.call(AbstractHandle.java:153)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

2023-12-06 09:32:42,669 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowManager : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 doBeforePostExecution

2023-12-06 09:32:42,669 : DEBUG : pool-7-thread-1 : : DestroyAndDisposeSparkContextTask : : : Destroying and disposing Spark context: sparkLivy://4baba528-5caf-4f9f-8db4-d1d46f0d18de

2023-12-06 09:32:42,670 : INFO : pool-7-thread-1 : : LivySparkContext : : : Destroying Livy Spark context

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 has new state: POSTEXECUTE

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowManager : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 doAfterExecute - failure

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : Node : Create Spark Context (Livy) : 5:230 : reset

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : SparkNodeModel : Create Spark Context (Livy) : 5:230 : In reset() of SparkNodeModel. Calling deleteSparkDataObjects.

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : Node : Create Spark Context (Livy) : 5:230 : clean output ports.

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : WorkflowDataRepository : Create Spark Context (Livy) : 5:230 : Removing handler 3ac0ff9f-8b90-49ac-921b-68a2c13c0868 (Create Spark Context (Livy) 5:230: <no directory>) - 1 remaining

2023-12-06 09:32:42,670 : DEBUG : pool-7-thread-1 : : DestroyAndDisposeSparkContextTask : : : Destroying and disposing Spark context: sparkLivy://ac59f61c-7af7-42ae-b401-fd8cc0ddf15e

2023-12-06 09:32:42,670 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 has new state: IDLE

2023-12-06 09:32:42,671 : INFO : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : SparkContext : Create Spark Context (Livy) : 5:230 : Spark context sparkLivy://ea502870-10f0-4e91-837c-574b694ba553 changed status from NEW to CONFIGURED

2023-12-06 09:32:42,671 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : Node : Create Spark Context (Livy) : 5:230 : Configure succeeded. (Create Spark Context (Livy))

2023-12-06 09:32:42,671 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Create Spark Context (Livy) 5:230 has new state: CONFIGURED

2023-12-06 09:32:42,671 : DEBUG : KNIME-Worker-17-Create Spark Context (Livy) 5:230 : : NodeContainer : Create Spark Context (Livy) : 5:230 : Spark-par-livy 5 has new state: CONFIGURED

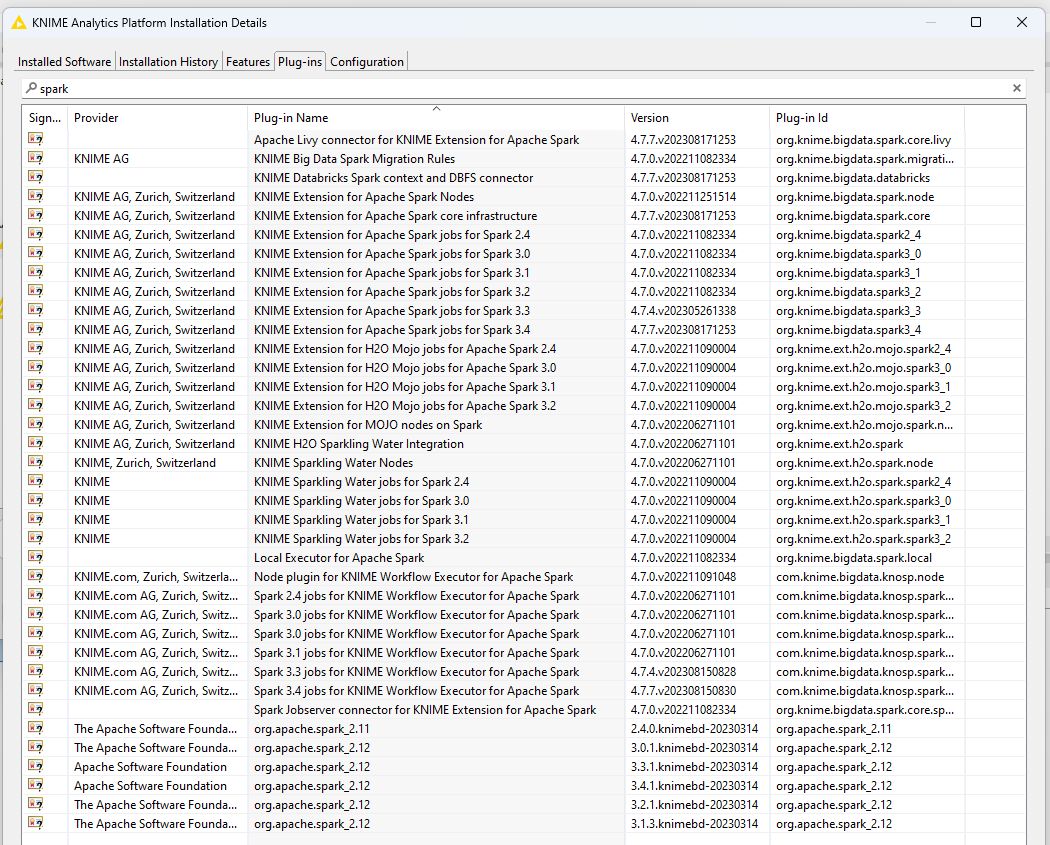

Here are the Spark plug-ins installed:

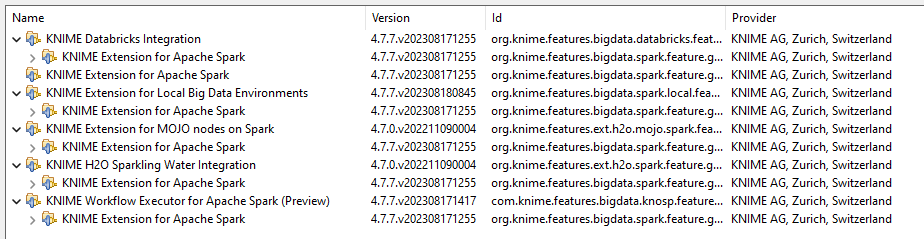

And the extensions:

Cheers,

Cristian