Hello KNIME Community,

I want to use DeepSeek to create some embeddings, but I’m unsure as to how I would go about that. I see in the FAISS Vector Store Creator node that you can use other embeddings if you specify them from somewhere:

“By default, the node embeds the selected documents using the embeddings model, but it is also possible to create the vector store from existing embeddings by specifying the corresponding embeddings column in the node dialog.”

I know that DeepSeek does not have an embedding model for use right now, but I’ve seen people create embeddings from a DeepSeek model on Hugging Face in Colab with Torch and vLLM.

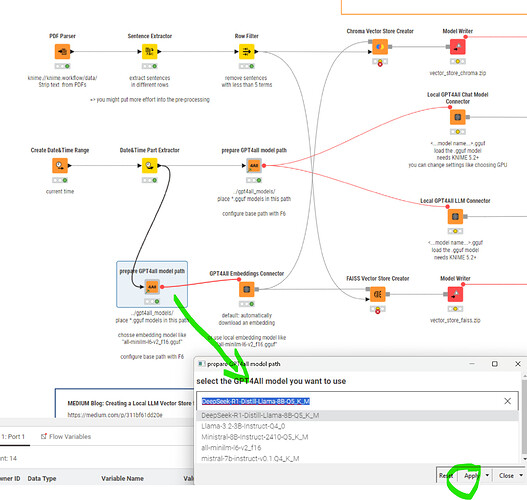

Is this possible using DeepSeek in KNIME? I’ve also seen a thread where someone developed a workflow to do this using GPT4All ( workflow linked here )

I was wondering if there’s a way to accomplish this in KNIME without doing this locally. Ideally, I’d like to avoid purchasing anything for this, so would I need to run something like the GPT4All workflow I linked?

Thanks in advance!