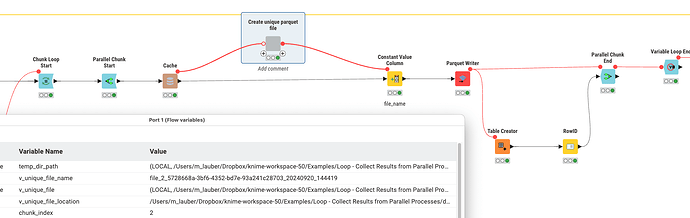

@mwiegand to be honest writing from parallel processes into the same local file is always a risk and will most likely result in failures (and this is most likely not a KNIME specific ‘bug’ but the way it is). From my perspective the best way to deal with this is to create unique file names in loop and chunk and write to individual parquet files (could also be CSV or Excel) and then import them back into a single file.

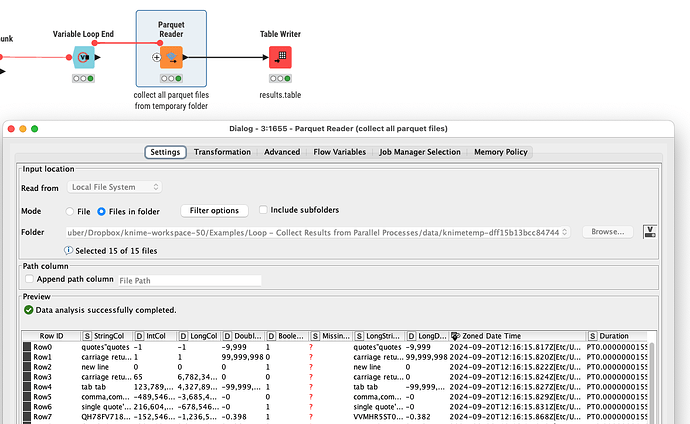

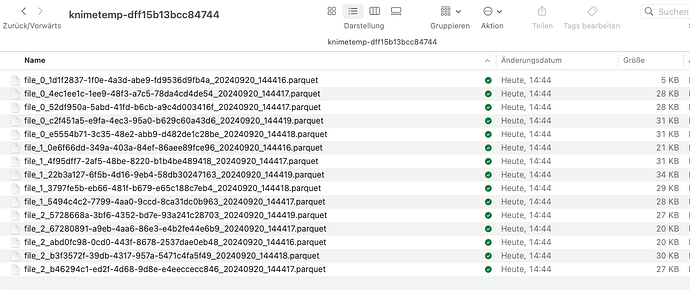

Parquet as a big data format is perfectly fine with dealing with several individual files and then treat them as one:

I think the system with loops etc will have no problem handling the files as individual files

Maybe you give it a try. I will expand the workflow over time. The other thing which could work would be to collect the results in a database like H2 or SQLite which might be able to deal with parallel accesses.