we occasionally encounter an error that blows my mind. I have tried everything I can think of, but I must be missing something.

We run 2 flows with a similar data-pattern. There is a flow that GETs lots of data from API’s and stores a JSON in a the datalake (no issue there). For instance product data from an e-commerce platform - such as Lightspeed. Then there is a flow that occasionally fails, which reads the JSON, compares all the information in there with a (Dimension-type) SQL table to Insert new products into the SQL database, and change fields that have changed. The DB Insert nodes give the error that is written in the title - output type mapping missing - But only when the flow is run on the server. When I process the exact same JSON or CSV files by running the flow locally, I never encounter any issue. And the server also sometimes works - it’s mind boggling. Running the flow daily produced an error today, and on the 30th of November, but not on the first and second of december.

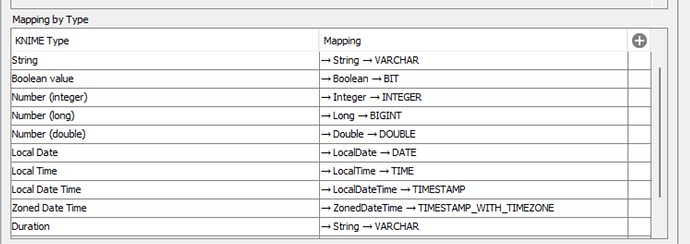

The nodes are configured with output type mappings, which to my knowledge should cover the data types:

is there anyone with experience on this issue?

Should I configure data type mappings per column name, instead of by type?

Here is an excerpt of an error message

DB Insert 4:1944

Message

Execute failed: Output type mappings are missing for columns: variantId (Number (integer)), priceExcl (Number (double)), priceIncl (Number (double)),