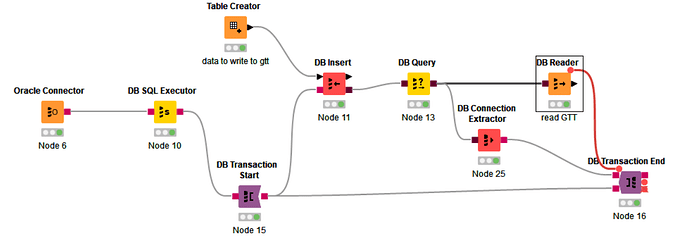

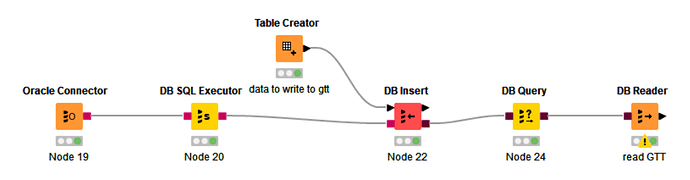

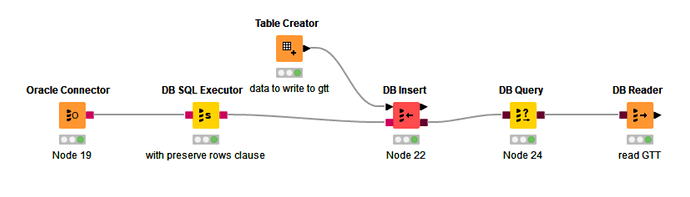

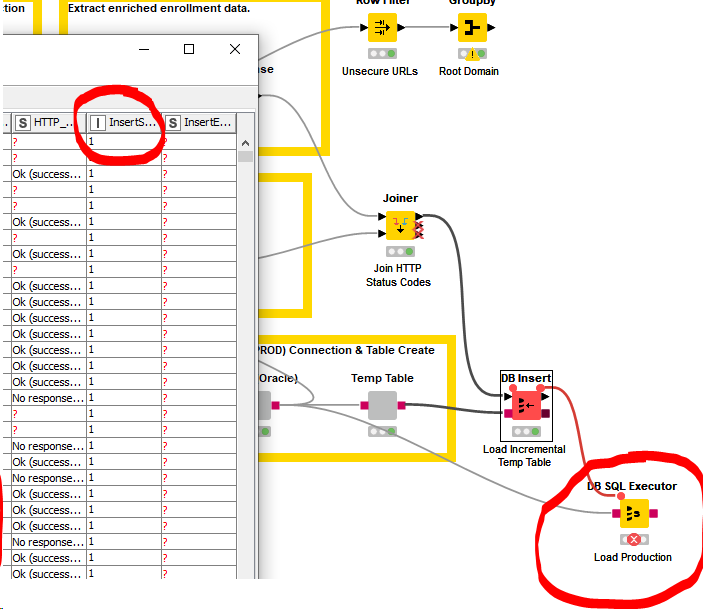

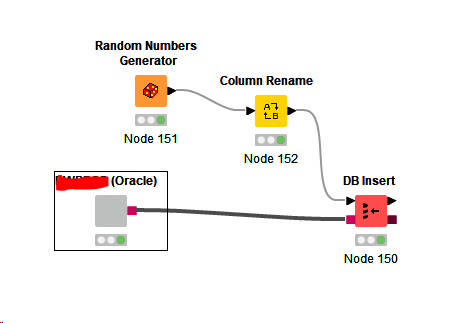

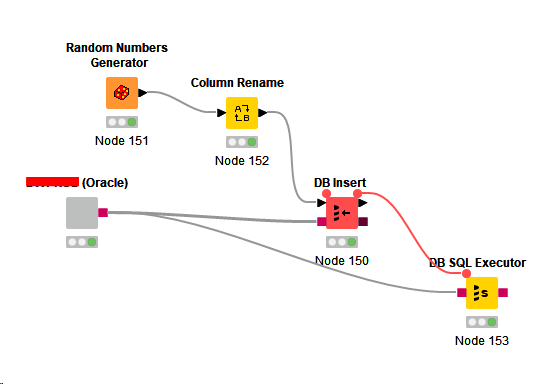

This is the debug output indicating DB Insert thinks it’s succeeded in loading data into a temp table, but it never does because running the query manually results in zero records, but the temp table persists. It says the records are committed, but nothing is there so I’m trying to figure out how to troubleshoot something like this that was previously working.

DEBUG DB Insert 3:155 Acquiring connection.

DEBUG DB Insert 3:155 All the statements have already been closed.

DEBUG DB Insert 3:155 The connection has been relinquished.

DEBUG DB Insert 3:155 The managed connection has been closed.

DEBUG DB Insert 3:155 The transaction managing connection has been closed.

DEBUG DB Insert 3:155 Configure succeeded. (DB Insert)

DEBUG DB Insert 3:155 DB Insert 3:155 has new state: CONFIGURED

DEBUG DB SQL Executor 3:145 Configure succeeded. (DB SQL Executor)

DEBUG DB Insert 3:155 DB SQL Executor 3:145 has new state: CONFIGURED

DEBUG WorkflowEditor Workflow event triggered: WorkflowEvent [type=NODE_SETTINGS_CHANGED;node=3:155;old=null;new=null;timestamp=Jan 24, 2024, 1:14:50 PM]

DEBUG ExecuteAction Creating execution job for 1 node(s)…

DEBUG NodeContainer DB Insert 3:155 has new state: CONFIGURED_MARKEDFOREXEC

DEBUG NodeContainer DB Insert 3:155 has new state: CONFIGURED_QUEUED

DEBUG NodeContainer Project_v15 3 has new state: EXECUTING

DEBUG DB Insert 3:155 DB Insert 3:155 doBeforePreExecution

DEBUG DB Insert 3:155 DB Insert 3:155 has new state: PREEXECUTE

DEBUG DB Insert 3:155 Adding handler 7bcfdfd5-d2fa-4472-a6c4-22427bb4539c (DB Insert 3:155: ) - 47 in total

DEBUG DB Insert 3:155 DB Insert 3:155 doBeforeExecution

DEBUG DB Insert 3:155 DB Insert 3:155 has new state: EXECUTING

DEBUG DB Insert 3:155 DB Insert 3:155 Start execute

DEBUG DB Insert 3:155 KNIME Buffer cache statistics:

DEBUG DB Insert 3:155 11 tables currently held in cache

DEBUG DB Insert 3:155 35 distinct tables cached

DEBUG DB Insert 3:155 2 tables invalidated successfully

DEBUG DB Insert 3:155 23 tables dropped by garbage collector

DEBUG DB Insert 3:155 41 cache hits (hard-referenced)

DEBUG DB Insert 3:155 123 cache hits (softly referenced)

DEBUG DB Insert 3:155 2 cache hits (weakly referenced)

DEBUG DB Insert 3:155 1 cache misses

DEBUG DB Insert 3:155 Using Table Backend “BufferedTableBackend”.

DEBUG DB Insert 3:155 Acquiring connection.

DEBUG DB Insert 3:155 Create SQL statement as prepareStatement: INSERT INTO “SCHEMA”.“TEMP_TABLE” (“GA_ENROLLMENT_ID”, “GA_PROMOCODE”, “GA_REFERRER”, “GA_SOURCE”, “GA_MEDIUM”, “GA_EVENT”, “GA_DATE_HOUR”, “GA_CHANNEL”, “GA_SESSIONS”, “STATUS”, “ZIPCODE”, “CREATIONDATE”, “ADDRESSLINE1”, “ADDRESSLINE2”, “STATE”, “CITY”, “ZIP5”, “ENROLLMENTLANGUAGE”, “PLAN”, “FIRST_NAME”, “LAST_NAME”, “EXTERNAL_ACCOUNT”, “USACFORM”, “ADL”, “PREVIOUSENROLLMENTNUMBER”, “QUALIFYDATE”, “UNQUALIFYDATE”, “PROMO_TYPE”, “PROMO_REPORT_TYPE”, “GEOLOCATION”, “FIRST_CALL_DATE”, “ANNIVERSARY_DATE”, “IPADDRESS”, “NOCOMMISSION”, “FUNDINGTYPE”, “APPLICATIONTOKEN”, “ROOT_DOMAIN”, “HTTP_STATUS_CODE”, “HTTP_DESCRIPTION”) VALUES (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)

DEBUG DB Insert 3:155 Using table format org.knime.core.data.container.DefaultTableStoreFormat

DEBUG DB Insert 3:155 Committing the small-scale transaction…

DEBUG DB Insert 3:155 The small-scale transaction has been successfully committed.

DEBUG DB Insert 3:155 Closing the small-scale transaction…

DEBUG DB Insert 3:155 Enabling auto-commit mode…

DEBUG DB Insert 3:155 The small-scale transaction has been successfully closed.

DEBUG DB Insert 3:155 The small-scale transaction has been removed.

DEBUG DB Insert 3:155 All the statements have already been closed.

DEBUG DB Insert 3:155 The connection has been relinquished.

DEBUG DB Insert 3:155 The managed connection has been closed.

DEBUG DB Insert 3:155 The transaction managing connection has been closed.

DEBUG DB Insert 3:155 Using Table Backend “BufferedTableBackend”.

INFO DB Insert 3:155 DB Insert 3:155 End execute (2 mins, 58 secs)

DEBUG DB Insert 3:155 DB Insert 3:155 doBeforePostExecution

DEBUG DB Insert 3:155 DB Insert 3:155 has new state: POSTEXECUTE

DEBUG DB Insert 3:155 DB Insert 3:155 doAfterExecute - success

DEBUG DB Insert 3:155 DB Insert 3:155 has new state: EXECUTED

DEBUG DB SQL Executor 3:145 Configure succeeded. (DB SQL Executor)

DEBUG DB Insert 3:155 Project_v15 3 has new state: IDLE

DEBUG DB Insert 3:155 Buffer file (C:\Users\ID\AppData\Local\Temp\knime_Project_12675\knime_container_20240124_6869753549474515344.bin.snappy) is 2.98MB in size

DEBUG NodeContainerEditPart DB Insert 3:155 (EXECUTED)

DEBUG NodeContainerEditPart DB SQL Executor 3:145 (CONFIGURED)

DEBUG ExecuteAction Creating execution job for 1 node(s)…

DEBUG NodeContainer DB SQL Executor 3:145 has new state: CONFIGURED_MARKEDFOREXEC

DEBUG NodeContainer DB SQL Executor 3:145 has new state: CONFIGURED_QUEUED

DEBUG NodeContainer Project_v15 3 has new state: EXECUTING

DEBUG DB SQL Executor 3:145 DB SQL Executor 3:145 doBeforePreExecution

DEBUG DB SQL Executor 3:145 DB SQL Executor 3:145 has new state: PREEXECUTE

DEBUG DB SQL Executor 3:145 Adding handler 4e17839c-29a0-48f0-ab75-2be87d118ee6 (DB SQL Executor 3:145: ) - 48 in total

DEBUG DB SQL Executor 3:145 DB SQL Executor 3:145 doBeforeExecution

DEBUG DB SQL Executor 3:145 DB SQL Executor 3:145 has new state: EXECUTING

DEBUG DB SQL Executor 3:145 DB SQL Executor 3:145 Start execute

DEBUG DB SQL Executor 3:145 Acquiring connection.

DEBUG DB SQL Executor 3:145 All the statements have already been closed.

DEBUG DB SQL Executor 3:145 The connection has been relinquished.

DEBUG DB SQL Executor 3:145 The managed connection has been closed.

DEBUG DB SQL Executor 3:145 The transaction managing connection has been closed.

DEBUG DB SQL Executor 3:145 reset

ERROR DB SQL Executor 3:145 Execute failed: ORA-00942: table or view does not exist