hello

I tried to run deepseek LLM on the local system, but the problem is that the model I downloaded is not displayed in the list.

hello

I tried to run deepseek LLM on the local system, but the problem is that the model I downloaded is not displayed in the list.

@arddashti you can use local DeepSeek models with the help of ollama or GPT4All

in terminal or windows powershell check if ollama is running in this link or not:

http://localhost:11434/

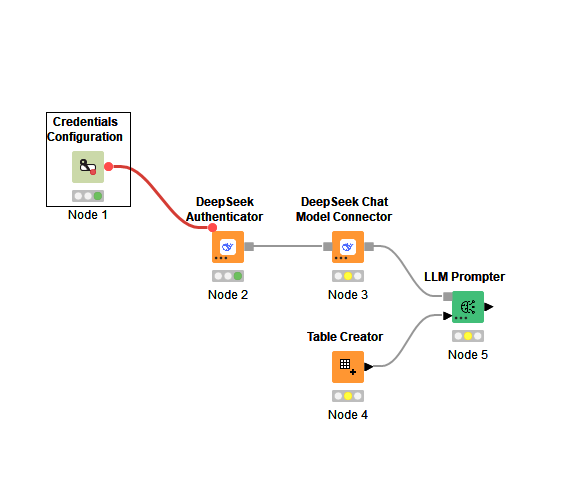

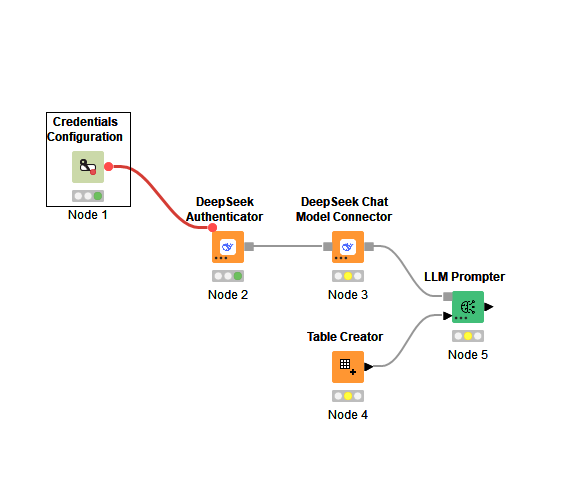

check here:

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.