Hi there,

I am considering deploying workflows as services and use them as API endpoints, the idea for now to have a Lambda function sending a webhook as a POST request, with the webhook as a json payload, the workflow transforms the data to send it to another API, and then returns that other API’s body.

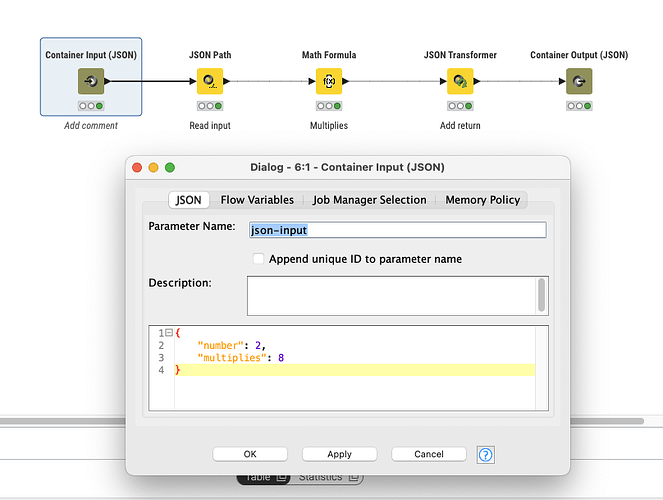

To do this, I have created a very simple test workflow that takes a json with 2 numbers, multiplies them, and returns a json with 3 numbers:

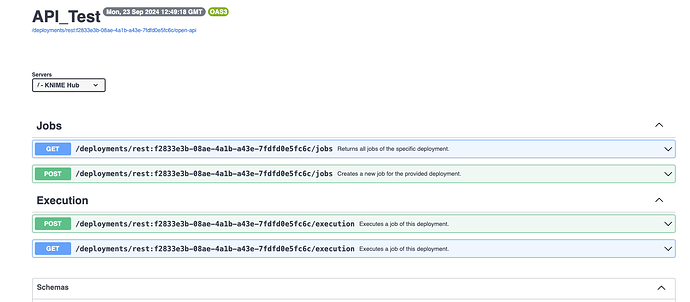

So talking about the endpoint, the open-api page only shows jobs and execution endpoint:

Which is fine but I don’t feel like either one of them are doing what I want. I actually fail to see the point of /jobs as it just loads the job without running it, is there something else to do to complete it?

/execution does run the workflow and sends a response, but here I can’t save the jobs (I would like to at least be able to monitor failed jobs).

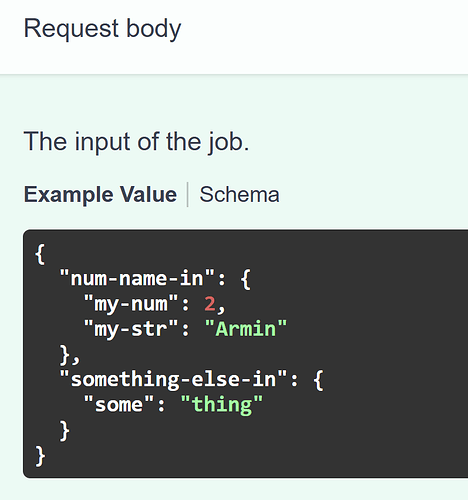

And finally, there is the question of the input and the output. For the input, I set up a simple schema in the Container Input, but then it forces me to add a root key (json-input by default):

{

"number": 2,

"multiplies": 8

}

instead of:

{

"json-input": {

"number": 2,

"multiplies": 8

}

}

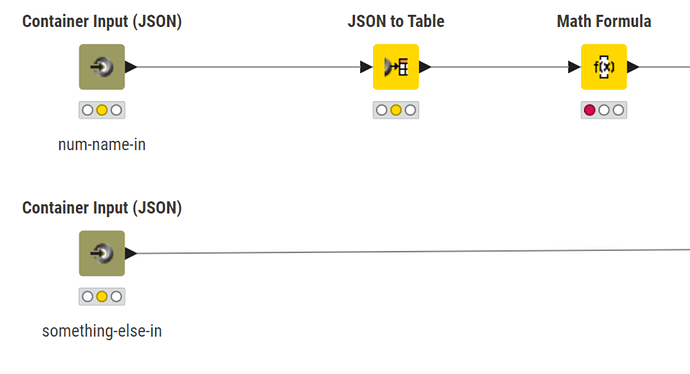

Is there a way to take a json as is and not have a mandatory parameter-name used a root key?

Finally, regarding the output, this is a bit of the same issue, but also with much more data than I’d like. The configured return schema in the Container Output is:

{

"number": 2,

"multiplies": 8,

"return": 16

}

However, what I get here is the entire job status (that I could actually use to do some monitoring but that would only be useful to call a workflow internally and not for an external user):

{

"id": "1defac00-6904-4b99-b714-af1c475d9982",

"createdAt": "2024-09-23T15:07:08.043Z[GMT]",

"scope": "account:team:*****",

"name": "API_Test 2024-09-23 15.07.08",

"creator": "account:user:*****",

"creatorName": "****",

"state": "EXECUTION_FINISHED",

"isSwapped": false,

"hasReport": false,

"startedExecutionAt": "2024-09-23T15:07:08.333465Z[GMT]",

"finishedExecutionAt": "2024-09-23T15:07:08.339456Z[GMT]",

"deploymentId": "rest:f2833e3b-08ae-4a1b-a43e-7fdfd0e5fc6c",

"workflow": "/****/API_Test",

"workflowId": "*****",

"spaceVersion": "2",

"itemVersion": "2",

"createdVia": "generic client",

"executorID": "****",

"executorName": "****",

"executorIPs": [

"****"

],

"executionContext": "****",

"discardAfterSuccessfulExec": false,

"discardAfterFailedExec": false,

"configuration": {},

"actions": [],

"inputParameters": {

"json-input": {

"number": 54,

"multiplies": 4

}

},

"outputValues": {

"json-output": {

"number": 54,

"multiplies": 4,

"return": 216

}

}

}

Here I would just like the outputValues, without any other key than the defined schema:

{

"number": 54,

"multiplies": 4,

"return": 216

}

I’m a bit lost here, right now in this state I can’t make any use of the REST deployment, which would be a shame ![]()

Thanks!