Hi,

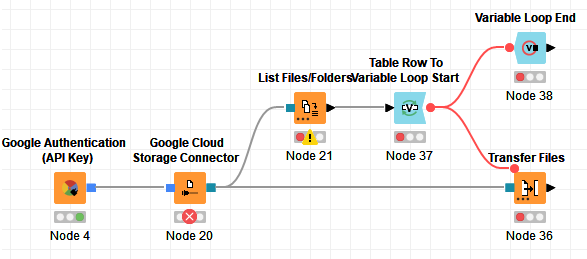

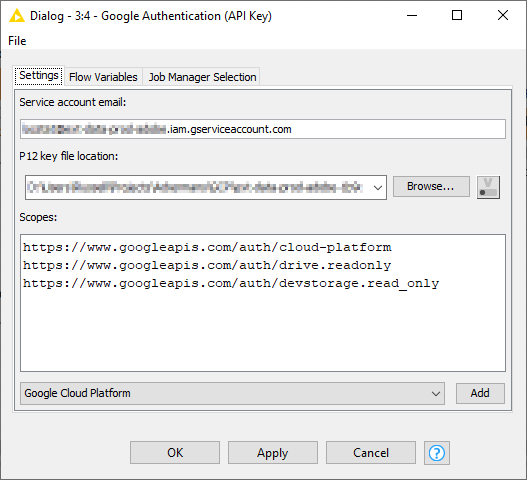

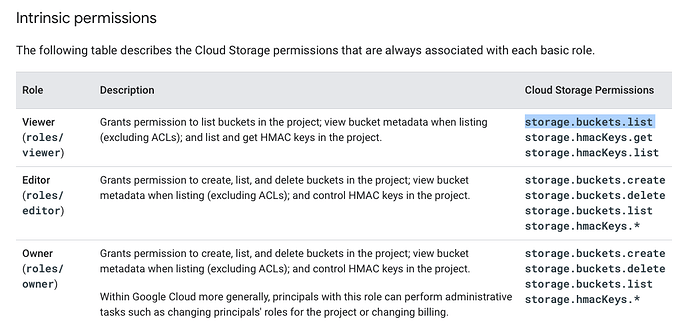

I have been trying to download files from a client’s Google cloud storage for a while but it keeps giving me a permission error. Using the same credentials I can down files with a python script. Doing 1 file at a time is not viable when there are over 200 files and there will be 200 more every week.

Is it possible to use the same python script (copied directly from the Google documentation and included below), or a similar one, in KNIME to loop through the list of files and download them to a local destination folder?

import os

from google.cloud import storage

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = r"D:\Users\<google_service_account.json>"

def download_blob(bucket_name, source_blob_name, destination_file_name):

"""Downloads a blob from the bucket."""

# The ID of your GCS bucket

# bucket_name = "your-bucket-name"

# The ID of your GCS object

# source_blob_name = "storage-object-name"

# The path to which the file should be downloaded

# destination_file_name = "local/path/to/file"

storage_client = storage.Client()

bucket = storage_client.bucket(bucket_name)

# Construct a client side representation of a blob.

# Note `Bucket.blob` differs from `Bucket.get_blob` as it doesn't retrieve

# any content from Google Cloud Storage. As we don't need additional data,

# using `Bucket.blob` is preferred here.

blob = bucket.blob(source_blob_name)

blob.download_to_filename(destination_file_name)

download_blob("project16","sensorlog/log20220301","d:/sensorlog/log20220301.csv")

I have a CSV file with columns that list the source blob, bucket, and destination file name.

source_blob_name,bucket_name,destination_file_name

project136,sensorlog/log20220301,d:/sensorlog/log20220301.csv

project136,sensorlog/log20220302,d:/sensorlog/log20220302.csv

project136,sensorlog/log20220303,d:/sensorlog/log20220303.csv

project136,sensorlog/log20220304,d:/sensorlog/log20220304.csv

project136,sensorlog/log20220305,d:/sensorlog/log20220305.csv

I would appreciate any suggestions.

Thanks,

tC/.