Hi guys,

I have tried so many things and still no luck…

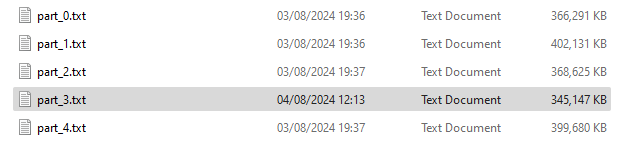

I have a huge file… so have split it into 201 smaller files.

created a loop to process in smaller chunks, although now found that my problem isnt there ( unless the workflow is caching old iterations data?? )

it will process part_2 no problem at all.

but gives the error

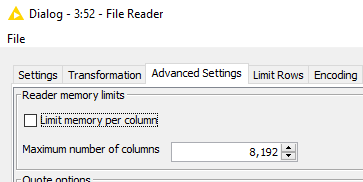

Execute failed: Memory limit per column exceeded. Please adapt the according setting.

on part_3 everytime.

ironicly part_2 is bigger on disk than paart_3

They are all 500000 lines ( except file 200 which is around 30k lines ) .

I have increased my -Xmx26340m ( was 16340m )

killed off the loop and just processed part_3 with a hard coded path still failed, and part 2 works every time.

it is all using “file reader” and am not splitting splitting the lines yet ( it is tab separated, but happy to get the whole line in one field just now )

I have tried memory using memory and disk.

is there anything else you guys can advise for moderately large files?