@tobias.koetter thank you for your response.

I am typing up this post (a) because I need to write it down to rationalise things in my own head, (b) because it may be useful information to others.

What you are describing is a reflection of the difference in the way that Linux and Windows create new processes. This difference is well documented, but I will summarise below and add commentary specific to KNIME where appropriate.

The common approach to creating new process in Linux is to fork the existing process. What this means is that execution on the main branch continues to use the variables and memory that it was allocated, and the new process also continues to use the same variables and memory. Therefore, forking a new process is quick. However, if both processes continue to use the same variables and memory there will be problems. So, whenever one of the processes writes to memory to change a variable that block of memory is copied. Therefore, the one process (that didn’t change memory) keeps the original block of memory, and the process that changed the block of memory gets a new block with the changed data. Therefore, whilst forking is quick to start, there are performance penalties if either of the processes writes to memory.

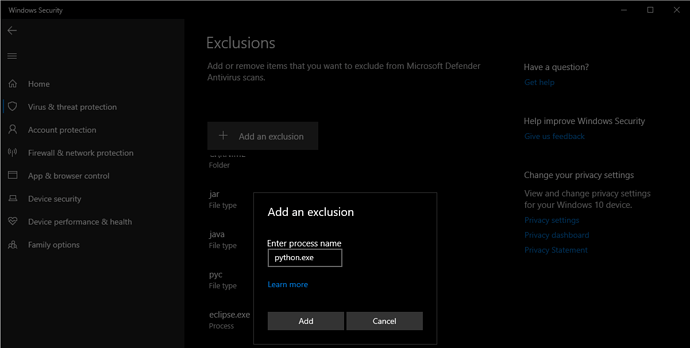

Windows takes a different approach. Whenever a new process is created, it creates a new environment into which everything is loaded. In this case Python interpreter, the python code and all the dependent libraries. However, once created the process runs independently of all other processes. Therefore, whilst Linux forks the process and continues to use copies of the Python Interpreter, Code and libraries that have already been loaded into memory, Windows needs to reload everything as if it was running the code for the first time. This is why you are seeing the compiled Python files (.pyc, pyd, dll) touched (but not necessarily scanned) by Windows Defender. It is a function of how new processes are created on each platform.

There is much heated discussion on which approach is better, and it is better not to get into that debate. What is important is that the approach to implement multi-tasking with Python in a cross-platform environment needs careful thought and planning.

There are two approaches that can be used:

-

The first, where the task are IO limited (e.g. on a web server) where the task spends a lot of time waiting for data and requires little CPU time to process. This is efficiently handled by the Python package asynco. It’s not relevant to KNIME, which tends to be CPU limited rather than IO limited (in most cases).

-

The second option is to create an internal server to handle tasks. For the geospatial extension that would be a task runner with a work queue. The geospatial nodes would then submit jobs to the work queue which would then be executed by the server. The server would then have multiple processes that would pull work from the work queue and either update tables directly or return data to the client node. In this case the server creates processes when it is started and only terminates them when all of the jobs have been completed/ the server shuts down.

What you are doing with KNIME/Python is superficially the most straightforward approach, but not necessarily the most performant.

DiaAzul

LinkedIn | Medium | GitHub