Hello,

I’m trying to upload a table with 7000+ rows to a Big Query table. When I try that I get the following error:

Message: Exceeded rate limits: too many table update operations for this table.

When I just upload 3 rows per batch and wait for some seconds it works but takes endlessly.

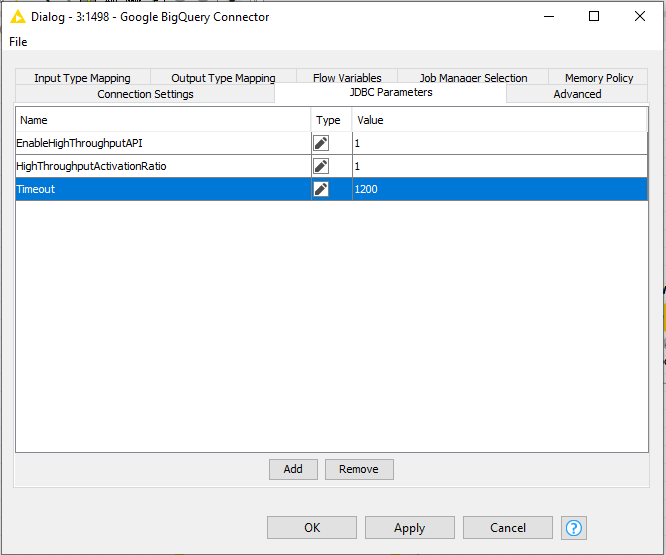

In the Simba documentation I found the parameters EnableHighThroughputAPI and Time out which I put in the JDBC parameters tab of the Google Big Query Connector node like this

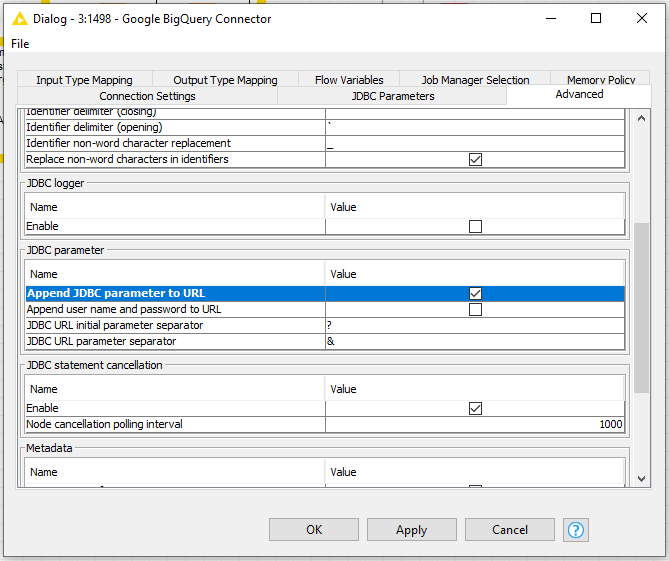

But the settings don’t seem to have an effect. When I check the option “Append JDBC parameters to URL” like this

I get the error message: “Execute failed: [Simba]BigQueryJDBCDriver Service account email or private key file path not specified.” although everything is set in the Google Authentication (API key) node.

What am I doing wrong? Does anybody solve this successfully?

Many thanks and best regards,

Andy

Hi @tobias.koetter, sorry to bother you with this. But you helped me some time ago at a KNIME summit with setting up a GBQ connection. Do you have clue what might be changed in the configuration toget this to work?

Hi aherzberg,

you don’t need to tick the Append JDBC parameter to URL option since the Simba driver also accepts parameters via the standard property object and then seems to have problems with the authentication parameters. Also as far as I understand the description of the parameter EnableHighTroughputAPI and HighThroughputActivationRatio they only improves query performance since it uses the storage API for Result Sets.

In general Google Big Query has a lot of restrictions for DML (insert, update, delete) statements. For example standard tables only allow 1000 DML operations per day.

The only advice I can give you right now is to use the DB Loader node in KNIME Analytics Platform to upload mass data which uses another API. However this only supports loading of data but not updating. So please contact the Google BigQuery support maybe there is a way to increase the standard limitations.

Bye

Tobias

4 Likes

Hey @tobias.koetter,

You are my personal champion!!!

The solution is to use the “Database Loader” node for large files.

You made my day! Thanks for your quick reply!

Andy

3 Likes