Hi all!

I need advice about organizing a cluster and a server for it.

I read the instructions, but still didn’t really understand how everything works. (https://docs.knime.com/2018-12/bigdata_spark_installation_guide/bigdata_spark_installation_guide.pdf )

I would like to connect using KNIME Extension for Apache Spark to own spark cluster. What do I need to do to create an Apache Livy service?

Hi @greatvarona ,

You can try Cloudera, that contains everything like HDFS, Spark and Livy already, but requires a license after some testing time: CDP Private Cloud - Trial Product Download | Cloudera

If you like to install all this stuff by yourself, here is a getting started guide: Livy - Getting Started

Not sure if there are other open-source distributions.

Cheers,

3 Likes

Sascha,

Hi @sascha.wolke ,

We installed Hadoop, Spark and Livy on the server. What’s next?

How can I connect to this server through node “Create databricks environment”?

Hi @greatvarona ,

You can use your own cluster with Haddop/Spark/Livy or a Databricks cluster that provides everything you need. Depending on this, you need the Create Spark Context (Livy) or the Create Databricks Environment node.

Cheers,

2 Likes

@sascha.wolke Hello!

Can you help with it?

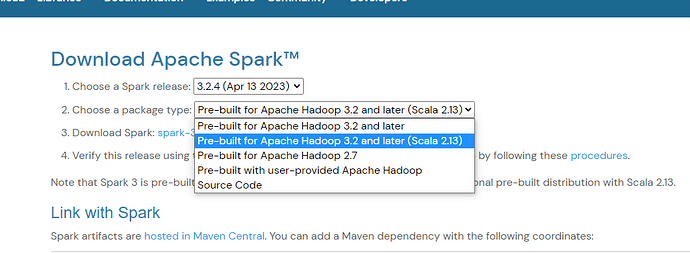

We are trying install Spark - Pre-built for Apache Hadoop 3.2 and later (with Scala 2.13)

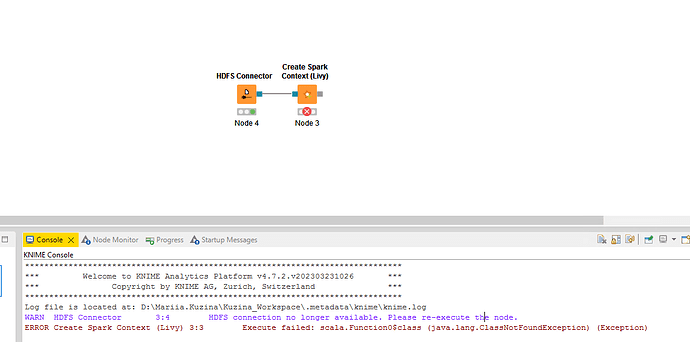

But we got error:

And trying install Spark - Pre-built for Apache Hadoop 3.2 and later, but got another error:

What we should to do for correct work of cluster?

Hi @greatvarona ,

Can you try the Version with Scala 2.12? (this is the one at the top without the scala version, in your screenshot)

Cheers

@sascha.wolke

Hi @greatvarona ,

At the download page of Apache Spark, select Spark release 3.2.4, and at the package type the first one (Pre-built for Apache Hadoop 3.2 and later ).

Not sure about Livy, you might need to compile the current master using the -Pspark3 and -Pscala-2.12 flags: GitHub - apache/incubator-livy: Apache Livy is an open source REST interface for interacting with Apache Spark from anywhere.

Cheers,

1 Like

system

September 24, 2023, 7:38am

10

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.