I tried configuring LLM with “HF Hub LLM Connector”. I got access token from huggign face and used it well. But since last week, the node says “please provide a valid repo ID” and never executed. I used “mistralai/Mistral-7B-Instruct-v0.2” and I tried to use other models but I couldn’t find any valid repo ID for HF Hub LLM Connector. What can I do for it??

Hey @ParkJunYong,

Welcome to the community!

It looks like you had the node working at the start, and it just stopped working after a week. It looks like it may be an issue with the access token. Have you tried recreating one and seeing if it works?

If you have a sample workflow you can share that is causing you an issue that would be great if you can post it here!

TL

Yes, I recreated several times and also recreated new ID but it didn’t work. So, I got Pro Acoount from Hugging Face, but I got this message again. “Execute failed: Error raised by inference API: Cannot override task for LLM models”

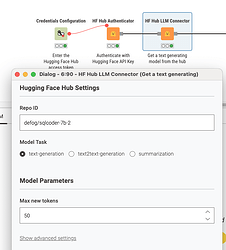

I want to use just "mistralai/Mistral-7B-Instruct-v0.2"or “defog/sqlcoder-7b-2” but I can’t use anything. Is there any solution of this problem? Or, do I have to configure options on hugging face(like launching inference endpoint…)?

The mistralai is gated, so that may be what is causing the node to error out.

For defog, I verified that it does work with read permissions on the token:

Try to see if this one works as an alternative to mistralai:

HuggingFaceH4/zephyr-7b-beta (it is fine tuned on mistralai)

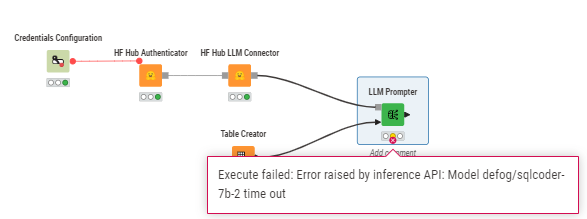

“defog/sqlcoder-7b-2” is successfully connected to HF Hub LLM Connector node but, It doesn’t work on LLM Prompter node.

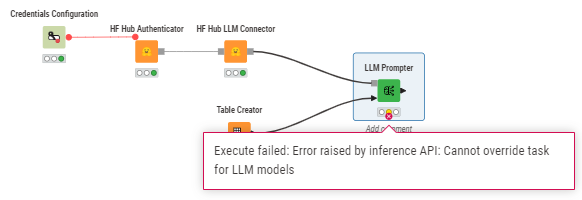

And, HuggingFaceH4/zephyr-7b-beta is also connected on HF Hub LLM Connector well but causes another error.

Hey @ParkJunYong,

Let me loop in one of the AI devs (@nemad) into the thread so he can look into the issue.

TL

Thank you for reporting this.

It seems like Hugging Face changed the behavior of their API which makes it incompatible with our nodes that run an older client version.

We are already working on an update that will hopefully fix this problem.

Kind regards,

Adrian

Thank you for your feedback. I want to know when will it be updated?When I use “mistralai/Mistral-7B-Instruct-v0.2”, it still shows me same issue.

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.