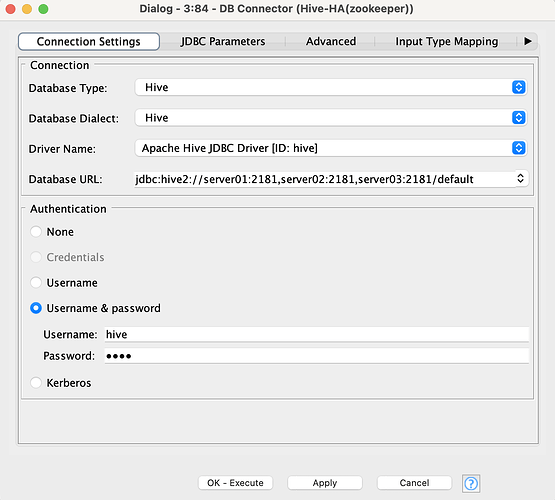

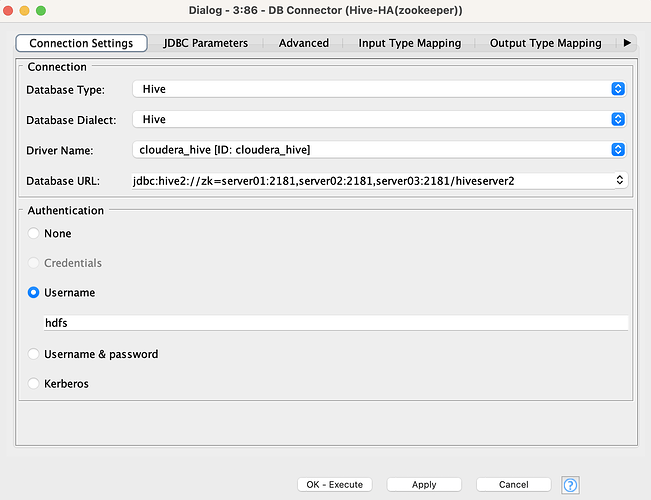

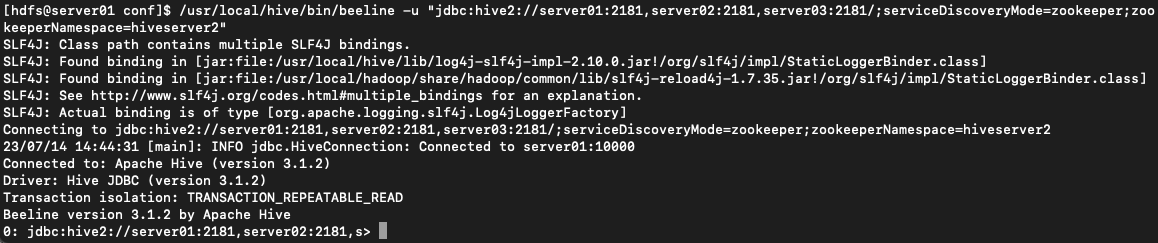

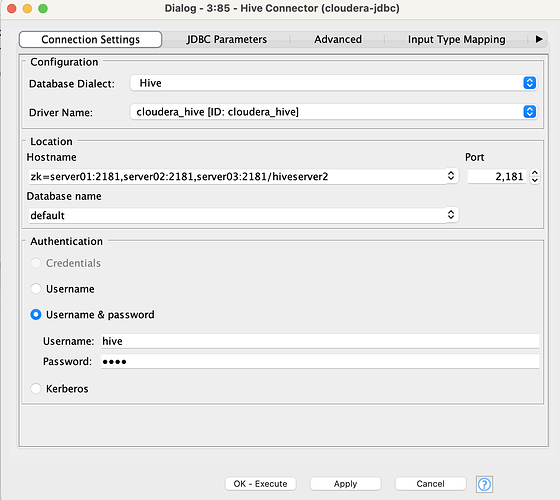

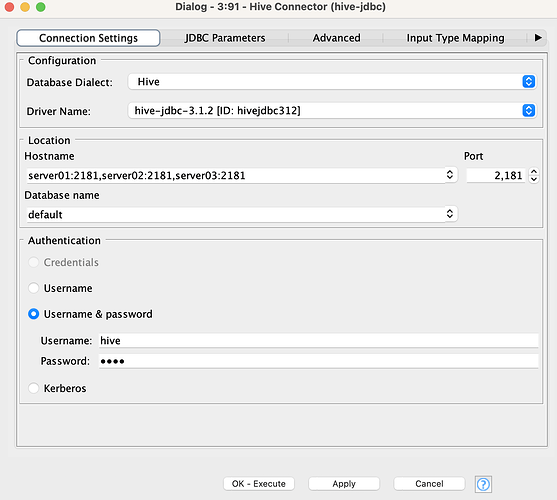

The server01, server02, server03 I’m using for the JDBC URL are the hostnames of my test server, a Linux server.

It works fine when I test it with DBeaver Tools. It must be connecting from outside the cluster.

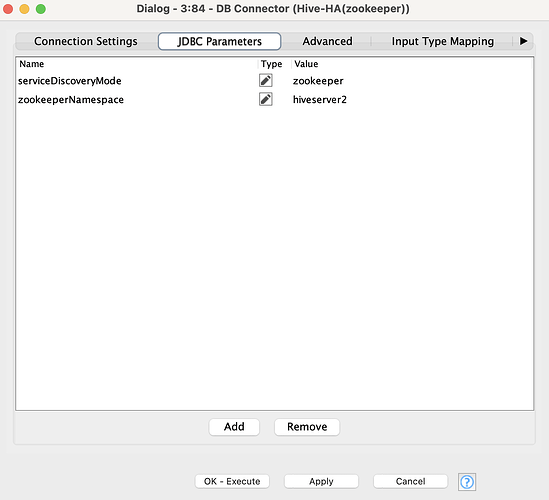

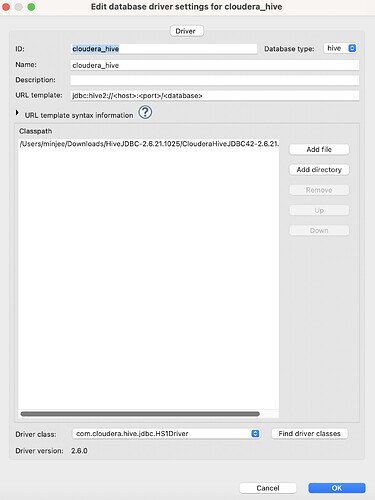

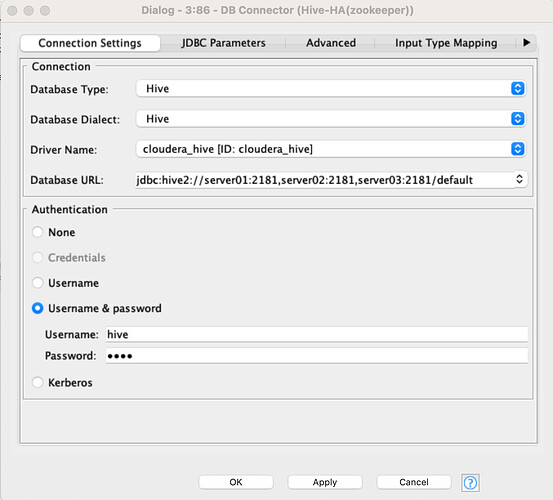

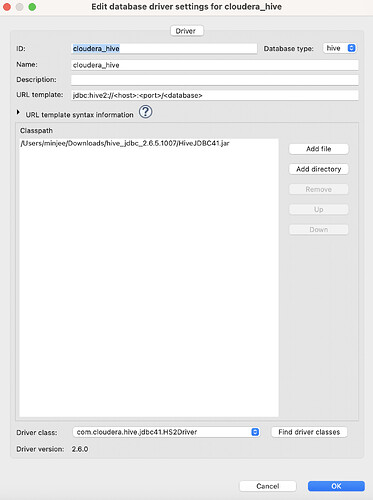

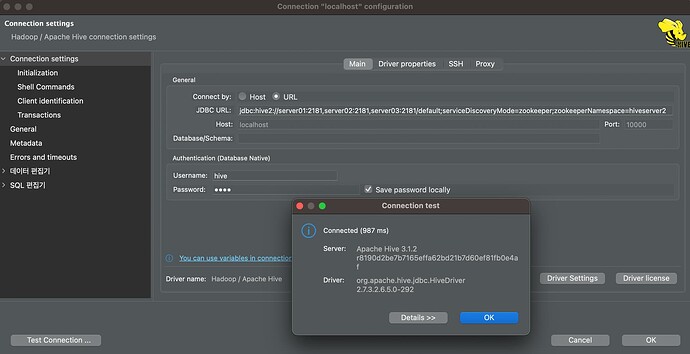

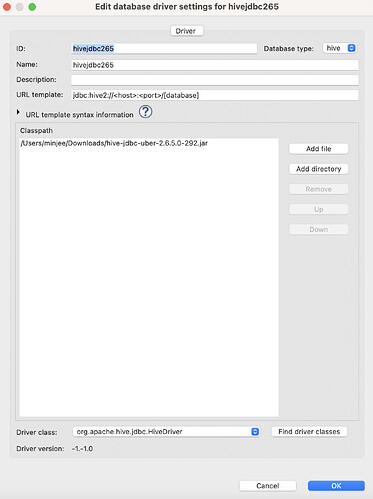

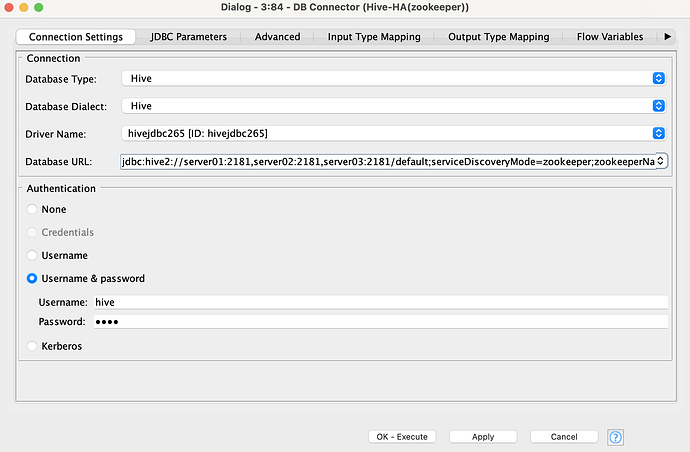

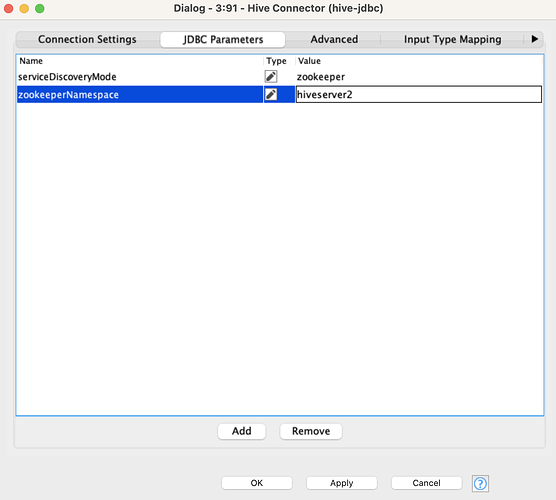

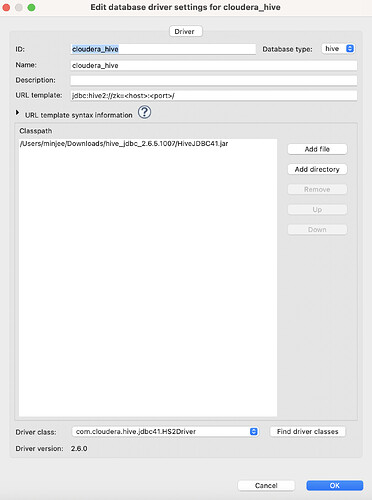

When I test in KNIME with the same driver (Add Driver to KNIME AP Preference - KNIME - Databases), I get the following message in the KNIME console.

DEBUG ExecuteAction Creating execution job for 1 node(s)…

DEBUG NodeContainer DB Connector 3:84 has new state: CONFIGURED_MARKEDFOREXEC

DEBUG NodeContainer DB Connector 3:84 has new state: CONFIGURED_QUEUED

DEBUG NodeContainer Using_Spark_File_to_HIVE_Kyuubi 3 has new state: EXECUTING

DEBUG DB Connector 3:84 DB Connector 3:84 doBeforePreExecution

DEBUG DB Connector 3:84 DB Connector 3:84 has new state: PREEXECUTE

DEBUG DB Connector 3:84 Adding handler 580ce38e-0fca-455e-bad8-df4e53a513b1 (DB Connector 3:84: ) - 1 in total

DEBUG DB Connector 3:84 DB Connector 3:84 doBeforeExecution

DEBUG DB Connector 3:84 DB Connector 3:84 has new state: EXECUTING

DEBUG DB Connector 3:84 DB Connector 3:84 Start execute

DEBUG DB Connector 3:84 New database session: DefaultDBSessionInformation(id=aa026643-f75b-4ed9-ab16-a7af0d2cc06d, dbType=DBType(id=hive, name=Hive, description=Hive), driverDefinition=DBDriverDefinition(id=hivejdbc265, name=hivejdbc265, version=-1.-1.0, driverClass=org.apache.hive.jdbc.HiveDriver, dbType=DBType(id=hive, name=Hive, description=Hive), description=, origin=USER), connectionController=org.knime.database.connection.UserDBConnectionController=(url=jdbc:hive2://server01:2181,server02:2181,server03:2181/default;serviceDiscoveryMode=zookeeper;zookeeperNamespace=hiveserver2, authenticationType=USER_PWD, user=hive, password=true), dialectId=hive, attributeValues={})

DEBUG DB Connector 3:84 Acquiring connection.

DEBUG DB Connector 3:84 An error of unexpected type occurred while checking if an object is a wrapper for org.knime.database.connection.impl.managing.transaction.BroadTransactionManagingWrapper.

DEBUG DB Connector 3:84 An error of unexpected type occurred while checking if an object is a wrapper for org.knime.database.connection.impl.managing.transaction.TransactionManagingWrapper.

WARN DB Connector 3:84 Couldn’t fetch SQL keywords from database.

DEBUG DB Connector 3:84 The connection has been relinquished.

DEBUG DB Connector 3:84 The managed connection has been closed.

DEBUG DB Connector 3:84 The transaction managing connection has been closed.

DEBUG DB Connector 3:84 Database session information:DefaultDBSessionInformation(id=aa026643-f75b-4ed9-ab16-a7af0d2cc06d, dbType=DBType(id=hive, name=Hive, description=Hive), driverDefinition=DBDriverDefinition(id=hivejdbc265, name=hivejdbc265, version=-1.-1.0, driverClass=org.apache.hive.jdbc.HiveDriver, dbType=DBType(id=hive, name=Hive, description=Hive), description=, origin=USER), connectionController=org.knime.database.connection.UserDBConnectionController=(url=jdbc:hive2://server01:2181,server02:2181,server03:2181/default;serviceDiscoveryMode=zookeeper;zookeeperNamespace=hiveserver2, authenticationType=USER_PWD, user=hive, password=true), dialectId=hive, attributeValues={})

DEBUG DB Connector 3:84 Attribute details of database session aa026643-f75b-4ed9-ab16-a7af0d2cc06d:

AttributeValueRepository(attributes={knime.db.connection.jdbc.fetch_size=knime.db.connection.jdbc.fetch_size:10000, knime.db.connection.parameter.append.separator=knime.db.connection.parameter.append.separator:&, knime.db.capability.multi.dbs=knime.db.capability.multi.dbs:false, knime.db.dialect.sql.delimiter.identifier.opening=knime.db.dialect.sql.delimiter.identifier.opening:, knime.db.dialect.sql.table_reference.keyword=knime.db.dialect.sql.table_reference.keyword:, knime.db.dialect.sql.capability.operation.minus=knime.db.dialect.sql.capability.operation.minus:false, knime.db.dialect.sql.insert_into_table_with_select=knime.db.dialect.sql.insert_into_table_with_select:true, knime.db.agent.writer.autocommit_if_failing_on_error=knime.db.agent.writer.autocommit_if_failing_on_error:false, knime.db.dialect.sql.table_reference.derived_table=knime.db.dialect.sql.table_reference.derived_table:true, knime.db.connection.parameter.append=knime.db.connection.parameter.append:false, knime.db.connection.parameter.append.last.suffix=knime.db.connection.parameter.append.last.suffix:, knime.db.connection.jdbc.properties=knime.db.connection.jdbc.properties:#Mon Jul 17 10:55:01 KST 2023 , knime.db.connection.statement.cancellation.polling_period=knime.db.connection.statement.cancellation.polling_period:1000, knime.db.dialect.sql.capability.random=knime.db.dialect.sql.capability.random:true, knime.db.connection.metadata.table.types=knime.db.connection.metadata.table.types:TABLE, VIEW, knime.db.agent.writer.batch.enabled=knime.db.agent.writer.batch.enabled:true, knime.db.connection.statement.cancellation.enabled=knime.db.connection.statement.cancellation.enabled:true, knime.db.connection.metadata.configure.timeout=knime.db.connection.metadata.configure.timeout:3, knime.db.connection.metadata.configure.enabled=knime.db.connection.metadata.configure.enabled:true, knime.db.connection.metadata.query.flatten=knime.db.connection.metadata.query.flatten:false, knime.db.dialect.sql.create_table.define_constraint_name=knime.db.dialect.sql.create_table.define_constraint_name:false, knime.db.dialect.sql.identifier.delimiting.onlyspaces=knime.db.dialect.sql.identifier.delimiting.onlyspaces:false, knime.db.connection.time_zone=knime.db.connection.time_zone:UTC, knime.db.dialect.sql.capability.random_seed=knime.db.dialect.sql.capability.random_seed:true, knime.db.connection.reconnect.timeout=knime.db.connection.reconnect.timeout:0, knime.db.dialect.sql.identifier.replace.character.non_word.enabled=knime.db.dialect.sql.identifier.replace.character.non_word.enabled:false, knime.db.dialect.sql.create.table.temporary=knime.db.dialect.sql.create.table.temporary:TEMPORARY, knime.db.dialect.sql.minus_operator.keyword=knime.db.dialect.sql.minus_operator.keyword:, knime.db.dialect.sql.delimiter.identifier.closing=knime.db.dialect.sql.delimiter.identifier.closing:, knime.db.connection.logger.log.errors=knime.db.connection.logger.log.errors:false, knime.db.dialect.sql.capability.expression.case=knime.db.dialect.sql.capability.expression.case:true, knime.db.dialect.sql.identifier.replace.character.non_word.replacement=knime.db.dialect.sql.identifier.replace.character.non_word.replacement:, knime.db.connection.kerberos_delegation.service=knime.db.connection.kerberos_delegation.service:, knime.db.connection.reconnect=knime.db.connection.reconnect:false, knime.db.connection.transaction.enabled=knime.db.connection.transaction.enabled:false, knime.db.connection.parameter.append.initial.separator=knime.db.connection.parameter.append.initial.separator:?, knime.db.dialect.sql.create_table.if_not_exists=knime.db.dialect.sql.create_table.if_not_exists:IF NOT EXISTS, knime.db.dialect.sql.drop_table=knime.db.dialect.sql.drop_table:true, knime.db.connection.validation_query=knime.db.connection.validation_query:, knime.db.connection.kerberos_delegation.host_regex=knime.db.connection.kerberos_delegation.host_regex:.(?:@|//)([^:;,/\]).*, knime.db.connection.parameter.append.user_and_password=knime.db.connection.parameter.append.user_and_password:false, knime.db.connection.logger.enabled=knime.db.connection.logger.enabled:false, knime.db.connection.restore=knime.db.connection.restore:false, knime.db.agent.writer.fail_on_missing_in_where_clause=knime.db.agent.writer.fail_on_missing_in_where_clause:true, knime.db.connection.init_statement=knime.db.connection.init_statement:},

none default values={})

DEBUG DB Connector 3:84 Acquiring connection.

DEBUG DB Connector 3:84 The connection has been relinquished.

DEBUG DB Connector 3:84 The managed connection has been closed.

DEBUG DB Connector 3:84 The transaction managing connection has been closed.

DEBUG DB Connector 3:84 reset

DEBUG DB Connector 3:84 Closing the connection manager of the session: DefaultDBSessionInformation(id=aa026643-f75b-4ed9-ab16-a7af0d2cc06d, dbType=DBType(id=hive, name=Hive, description=Hive), driverDefinition=DBDriverDefinition(id=hivejdbc265, name=hivejdbc265, version=-1.-1.0, driverClass=org.apache.hive.jdbc.HiveDriver, dbType=DBType(id=hive, name=Hive, description=Hive), description=, origin=USER), connectionController=org.knime.database.connection.UserDBConnectionController=(url=jdbc:hive2://server01:2181,server02:2181,server03:2181/default;serviceDiscoveryMode=zookeeper;zookeeperNamespace=hiveserver2, authenticationType=USER_PWD, user=hive, password=true), dialectId=hive, attributeValues={})

ERROR DB Connector 3:84 Execute failed: DB Session aa026643-f75b-4ed9-ab16-a7af0d2cc06d is invalid. Method not supported

DEBUG DBConnectionManager Closing the database connection: URL=“jdbc:hive2://server01:2181,server02:2181,server03:2181/default;serviceDiscoveryMode=zookeeper;zookeeperNamespace=hiveserver2”, user=“hive”

DEBUG DB Connector 3:84 DB Connector 3:84 doBeforePostExecution

DEBUG DB Connector 3:84 DB Connector 3:84 has new state: POSTEXECUTE

DEBUG DB Connector 3:84 DB Connector 3:84 doAfterExecute - failure

DEBUG DB Connector 3:84 reset

DEBUG DB Connector 3:84 clean output ports.

DEBUG DB Connector 3:84 Removing handler 580ce38e-0fca-455e-bad8-df4e53a513b1 (DB Connector 3:84: ) - 0 remaining

DEBUG DB Connector 3:84 DB Connector 3:84 has new state: IDLE

DEBUG DB Connector 3:84 Configure succeeded. (DB Connector)

DEBUG DB Connector 3:84 DB Connector 3:84 has new state: CONFIGURED

DEBUG DB Connector 3:84 Using_Spark_File_to_HIVE_Kyuubi 3 has new state: IDLE

DEBUG DBConnectionManager The database connection has been closed successfully: URL=“jdbc:hive2://server01:2181,server02:2181,server03:2181/default;serviceDiscoveryMode=zookeeper;zookeeperNamespace=hiveserver2”, user=“hive”

DEBUG NodeContainerEditPart DB Connector 3:84 (CONFIGURED)

Thanks.