Hi @Nick_Reader, I have a relatively simple python script that chatGPT built to my specification, and I run it periodically. (e.g. either manually or on an automated schedule using Task Scheduler).

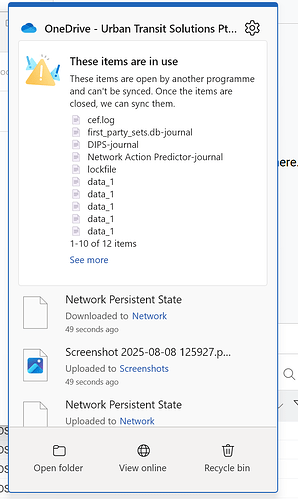

I wanted something that doesn’t keep archiving the same unchanging workflows that I wrote months ago, but instead would detect when workflows have been modified recently. I also wanted the archive to be reasonably compact, but for the individual workflow archives to be self-contained rather than lots of small files which just take so long to archive on cloud storage such as onedrive., so I decided that zipping each individual workflow effectively keeps it as an “exported” workflow which is easily recovered, and quick/easy to backup to cloud should I wish.

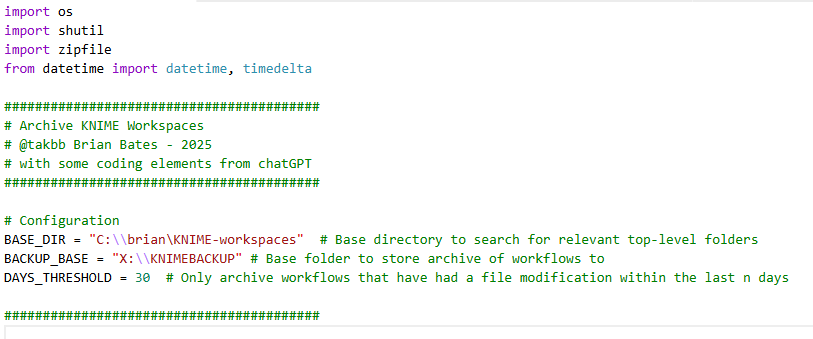

I configure the python script with the the base folder for all my KNIME workspaces.

e.g.

I have a KNIME 4.7 workspace

c:\brian\KNIME-workspaces\KNIME47

and a KNIME 5.x workspace as

c:\brian\KNIME-workspaces\KNIME5

(plus other workspaces)

thus I have a “base folder” for all my KNIME workspaces as

c:\brian\KNIME-workspaces

I then have an external drive X: where I want to store the archives under X:\KNIMEBACKUP

This for me is an external drive, but it could be a network share or cloud-folder such as a onedrive folder.

I also tell it the max age in days for workflows that should be be added to the archive. I set this at 30 days, which means any workflow that has changed within the past 30 days is included.

Running this backup on a continual basis means that all “recently changed” workflows are included in the archive, but it doesn’t continue to add older (unchanging) workflows, which would just waste time.

So the script looks for changing workflows based on latest file modification date. When it finds one that has changed within the past 30 days, it zips up the workflow and stores a copy in a folder structure that reflects the folder structure of my original workspace.

Note: the script looks for the terms “KNIME” and “WORKSPACE” in the full folder path name, and uses this to easily determine that a folder is a KNIME workspace. If yours don’t have these words somewhere in the path, you’d need to either adjust the script or adjust your workspace names. In my case, because all my workspaces are below a “KNIME-workspaces” folder, they all qualify no matter what the workspace itself is called!

Each zip file is given a datestamp as a prefix to its name which is the date of the latest file that has been modified in that workflow, so if I have a workflow called “Compare Oracle DB Parameters”, and it was last changed on 30 July 2025, it will be zipped as “20250730-Compare Oracle DB Parameters.zip”.

If I ran the archive script again a couple of weeks later and it sees that the workflow has been updated on 10 August 2025, a new zip will be created as “20250810-Compare Oracle DB Parameters.zip”. So I will now have two zip archives of the workflow with the workflow as at the given datestamp. If I run my archive script at the end of each day, I’d have a daily archive of changes.

As you can see, this is geared towards creating maybe a daily or weekly, or ad hoc backup rather than a continuous “to the minute” backup, but it works for me.

If I ever need to recover a previous copy of a workflow, I simply import the required zip file (since .knwf files are really just zipped workflows anyway!)

I’ve uploaded my python script to the community hub, where you can find it as a .txt file in my “experimental/utilities” space

Save and remove the .txt extension. Just change the config at the top and execute with python, or of course you could modify it to take the various parameters from the command line and I may do that one day, but for now it does the job I need it to do.

A note of caution: It works for me, and it should work as stated, but your folder/workspace structure/naming may mean it doesn’t work for you. Obviously use at your own risk, and check that it is working for you before coming to rely on it!!