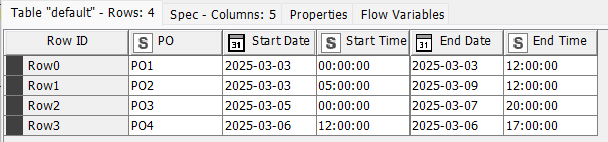

Hi there,

Is it possible for me to create a loop to add +1 day to the start date until it reaches the same as the end date? Then it records and combine them all into a single table.

Loop Query.knwf (56.2 KB)

Hi there,

Is it possible for me to create a loop to add +1 day to the start date until it reaches the same as the end date? Then it records and combine them all into a single table.

Loop Query.knwf (56.2 KB)

Does the time make any difference or only the dates?

Primarily the dates, the time boundary will need to update accordingly when a new date is set.

For example,

Initial PO1 03-Mar 08:00:00 to 05-Mar 08:00:00

then final outcome

PO1 03 Mar 08:00:00 to 03 Mar 24:00:00

PO1 04 Mar 00:00:00 to 04 Mar 24:00:00

PO 1 05 Mar 00:00:00 to 05 Mar 08:00:00

Do you have the date/time information stored somewhere as an actual date/time rather than the date as date and the time as a string as in your workflow? That would probably be a better starting point.

I am replicating it from the raw data source that I have to work with.

I could do some pre-work to get it to date & time format, but i need to convert it back to original format where date and time is split as these data is pass through other workflows (besides KNIME)

Hi,

I’ve adjusted your workflow using both legacy and new Date & Time nodes to achieve the format you were looking for (I assume). Check the screenshot below and review the revised flow.

Best,

Alpay

Loop Query 2.knwf (45.1 KB)

Hi,

Thanks for sharing, however that is not what I am looking for. What I want to achieve is that all PO with start date and end date is different, it will give me +1 start date until it matches end date. It can be dynamic, difference from x days.

Primarily the dates, the time boundary will need to update accordingly when a new date is set.

For example,

Initial PO1 03-Mar 08:00:00 to 05-Mar 08:00:00

then final outcome

PO1 03 Mar 08:00:00 to 03 Mar 24:00:00

PO1 04 Mar 00:00:00 to 04 Mar 24:00:00

PO 1 05 Mar 00:00:00 to 05 Mar 08:00:00

Got it!

Great example. Please check the screenshots and the attached flow. Jobs and dates have been sorted and manipulated accordingly. The flow is dynamic—it runs for every job and duration combination, as I have tested.

Best,

Alpay

Loop Query 2.knwf (79.9 KB)

In addition to @alpayzeybek solution you can use the Node “Create Date&Time Range”, extract the dates and let the min/max entry be calculated by the groupby node.

I encounter case if my time has seconds in it. the output gives me the same seconds count for each iteration.

E.g.

2025-03-03 08:01:05 to 2025-03-05 12:00:00

output is

2025-03-03 08:01:05 to 2025-03-03 23:59:05

2025-03-04 00:00:05 to 2025-03-04 23:59:05

2025-03-05 00:00:05 to 2025-03-04 12:00:00

How do I manage if it should be 23:59:59 and 00:00:00 instead?

I changed the interval parameter from 1m to 1s, and it changed as needed but is there a better way? the processing time significantly increases.

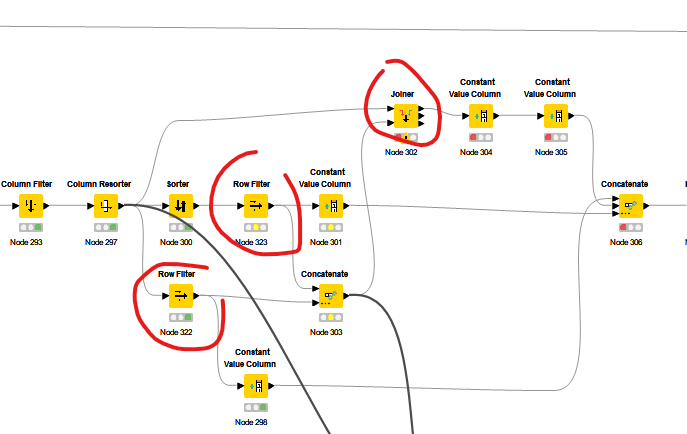

Could you show me row filters setting and joiner? My KNIME version unable to update nodes properly.

Hi,

Extracting First and Last Rows:

The process identifies the first and last rows of a dataset.

The first row’s start time and the last row’s end time are modified.

Row Filtering:

2 row filters are applied to extract the first row and last row.

Filtering first row is simple. Then the dataset is sorted backwards, and the new first row (originally last row) is filtered.

Joining the Middle Rows:

A left outer join is used in a Joiner node to pick the rows that are not the first or last row.

Concatenation:

The adjusted first row, middle rows, and modified last row are combined using concatenation.

I have also uploaded a newer version including the annotation for that part.

Below you shall see the screenshots and revised flow.

Best,

Alpay

Loop Query 2-x1.knwf (136.0 KB)

Hi,

in the “Create Date/Time Range” Node I set the interval to minutes, which was not correct in your case. Now I’ve changed it to seconds and it will work now as you desire.

The change of “23:59:59” can be as follows (“quick and dirty”):

I updated my Workflow in the KNIME Hub:

Thank you both @ActionAndi and @alpayzeybek for your solution sharing. I tested both and is working for my case. Great learnings.