Hi @JaeHwanChoi,

You might like to export the details as individual columns using something like this in the PySpark Script Source:

models = [

{ 'name': 'model_1', 'indicator_1': 0.65, 'indicator_2': 0.63, 'indicator_3': 0.88 },

{ 'name': 'model_2', 'indicator_1': 0.83, 'indicator_2': 0.76, 'indicator_3': 0.93 }

]

resultDataFrame1 = spark.createDataFrame(models)

This results in such a table:

+-----------+-----------+-----------+-------+

|indicator_1|indicator_2|indicator_3|name |

+-----------+-----------+-----------+-------+

|0.65 |0.63 |0.88 |model_1|

|0.83 |0.76 |0.93 |model_2|

+-----------+-----------+-----------+-------+

And this schema:

root

|-- indicator_1: double (nullable = true)

|-- indicator_2: double (nullable = true)

|-- indicator_3: double (nullable = true)

|-- name: string (nullable = true)

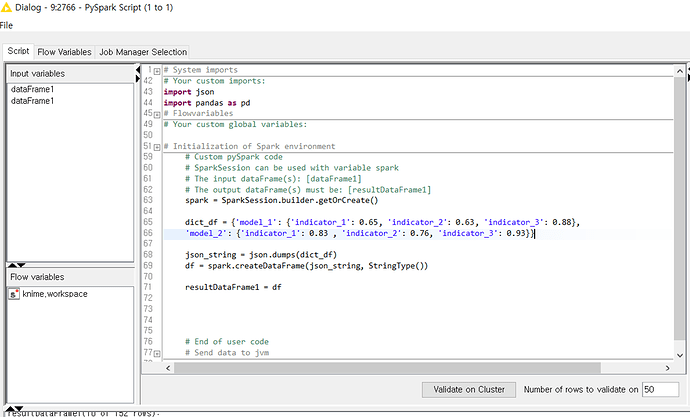

If you really like to put everything into one column and row, you can do something like this:

models = {

'model_1': { 'indicator_1': 0.65, 'indicator_2': 0.63, 'indicator_3': 0.88 },

'model_2': { 'indicator_1': 0.83, 'indicator_2': 0.76, 'indicator_3': 0.93 }

}

resultDataFrame1 = spark.createDataFrame([{ 'dict_detail': models }])

This results in such a table:

+--------------------------------------------------------------------------------------------------------------------------------------------------------+

|dict_detail |

+--------------------------------------------------------------------------------------------------------------------------------------------------------+

|{model_2 -> {indicator_1 -> 0.83, indicator_2 -> 0.76, indicator_3 -> 0.93}, model_1 -> {indicator_1 -> 0.65, indicator_2 -> 0.63, indicator_3 -> 0.88}}|

+--------------------------------------------------------------------------------------------------------------------------------------------------------+

And this is the schema:

root

|-- dict_detail: map (nullable = true)

| |-- key: string

| |-- value: map (valueContainsNull = true)

| | |-- key: string

| | |-- value: double (valueContainsNull = true)```

You can find more information about PySpark in the official documentation: PySpark documentation

Cheers,

Sascha