Hello.

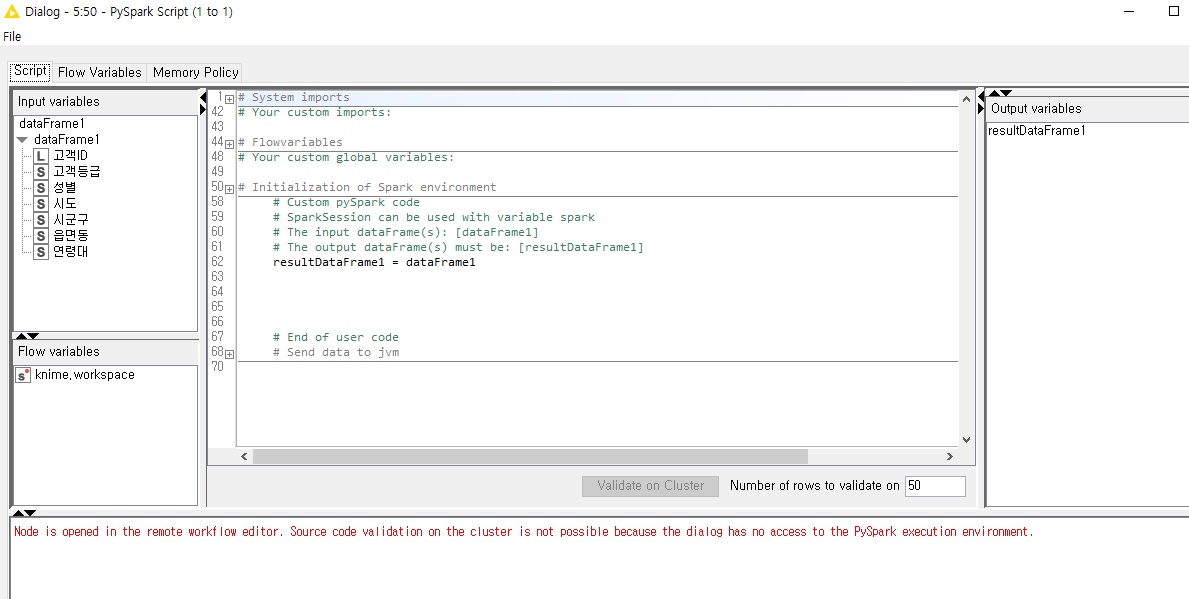

I have question about how to use Pyspark script node with Spark(Livy) environment.

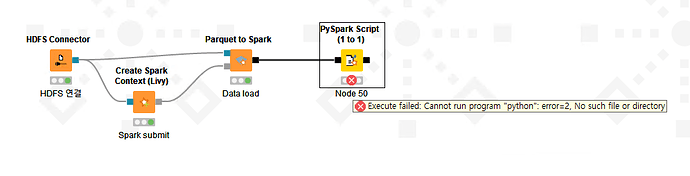

Here is my workflow.

The error message was as below:

Execute failed: Cannot run program “python”: error=2, No such file or directory

I set the config files as below:

[executor.epf]

/instance/org.knime.workbench.core/database_timeout=120

'# Add a mount point for this server. This is useful for the new filehandling nodes.

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/active=true

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/address=${origin:KNIME-EJB-Address}

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/factoryID=com.knime.explorer.server

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/mountID=${origin:KNIME-Default-Mountpoint-ID}

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/mountpointNumber=1

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/restPath=${origin:KNIME-Context-Root}/rest

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/user=${sysprop:user.name}

/instance/org.knime.workbench.explorer.view/mountpointNode/KNIME-Server/useRest=true

/instance/org.knime.conda/condaDirectoryPath=/opt/miniconda3

/instance/org.knime.python2/defaultPythonOption=python3

/instance/org.knime.python2/python2CondaEnvironmentDirectoryPath=/opt/miniconda3

/instance/org.knime.python2/python2Path=python

/instance/org.knime.python2/python3CondaEnvironmentDirectoryPath=/opt/miniconda3/envs/py3_knime

/instance/org.knime.python2/python3Path=/opt/knime/4.15/workflow_repository/config/client-profiles/executor/python3.exe

/instance/org.knime.python2/pythonEnvironmentType=manual

/instance/org.knime.python2/serializerId=org.knime.serialization.flatbuffers.column

[knime.ini]

-profileLocation

'http://localhost:8080/knime/rest/v4/profiles/contents

-profileList

executor

I’d appreciate it if you could tell me how to solve this problem.

And If you need more information, please tell me ![]()

Thanks,

hhkim