Hello,

I have created a workflow which results into a table with approx. 150k of rows.

I want that this output table from the workflow to be populate a table already created in my amazon redshift data base.

The problem is that this what ever node i use to write/ insert data into the database table , it takes to long , like hours/days , this is not normal

is there a way to make this more fast?

the same output from Knime , is written in a few seconds in a excel file … just for comparison …

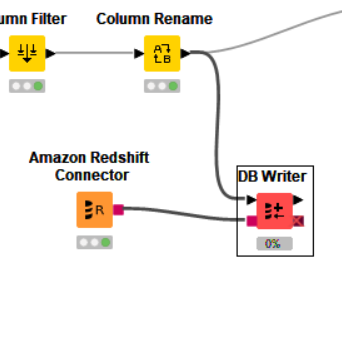

here is the end of my workflow ,

Do ypu guys have an idea what i can do or what node to use instead of the writer node ?

Hi,

The DB Writer uses the DB’s SQL INSERT statements to write rows, which can be slow because after every insert the database updates indexes, statistics, etc. A faster was is to use the database’s bulk load functionality via the DB Loader node, which also supports Redshift. Please have a look at the workflow in this forum post for some inspiration. This should be much faster.

Kind regards,

Alexander

thank you

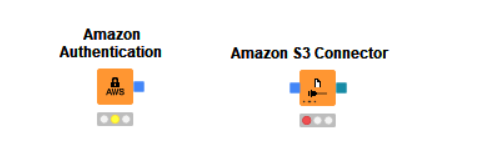

but due to the fact that i am still a beginner , i am not able to configure the following nodes:

Hi,

What information are you missing to be able to configure the nodes? Have you looked at their node description? It explains pretty well what is required.

Kind regards,

Alexander

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.