Good morning!

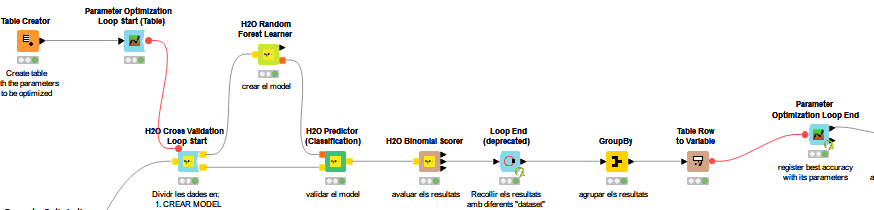

I’m using different H2O models to build a classification binary problem. One fo my H2O model workflow is like that:

In my workflow, I am implementing the cross-validation method. I have save the global result from the different iterations from cross-validation in the “GroupBy” node. So at the end of all the iterations, I have obtained different accuracy statistics parameters, as the mean of Log Loss, the mean of Accuracy, the mean of precision, the mean of recall, etc.

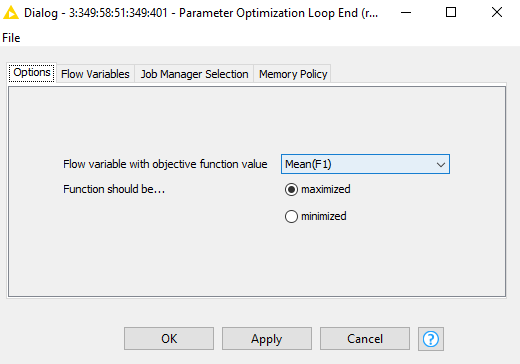

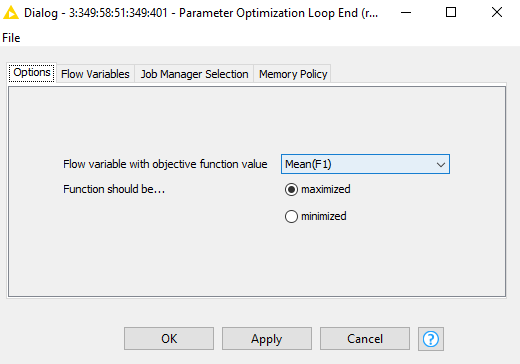

Apart from that, I am optimising the parameters. I have chosen the parameters which give me the highest F1 value. So the configuration for the Parameter Optimisation Loop End is the following:

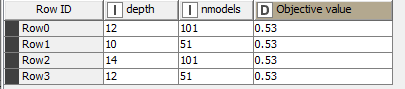

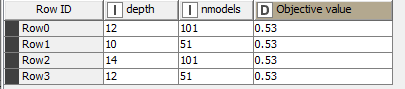

The ouport of the Paramter Optimisation Loop End gives me these results, being the objectve value the F1 parameter maximised.

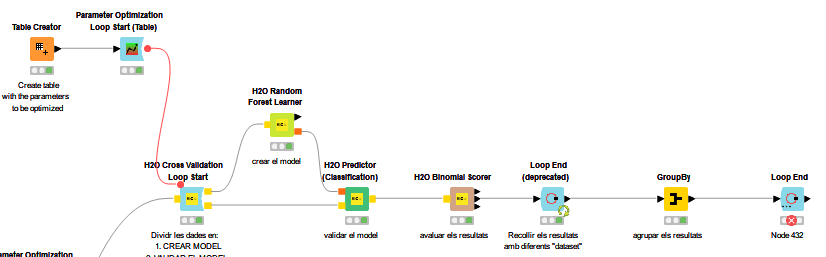

Apart from this parameter, I would like to get all the other accuracy statistics parameters I have obtained by the GroupBy node, like in this workflow (specifically in the Loop End node):

The problem is that if I use the “Loop End” node instead of the “Parameter Optimisation Loop End” node, results cannot be calculated. The error in the Loop End node is: "Cannot read the array length because “localBest” is null.

In conclusion, I would like to implement a workflow where I can obtain the two optmising paramters (depth, nmodels) and different accuracy statistics I have collected in my GroupBy node. After that, I am able to choose the iteration with best metrics according to my purpose. How can I do that if I’m applying also cross-validation in the model?

1 Like

Hi @helfortuny,

The Parameter Optimization Loop supports only the collection of one parameter at the end because this parameter is used for some of the algorithms it uses, such as hillclimbing.

You can instead create a table with your hyperparameter values and then use a Table Row To Variable Loop Start to go through your parameter combinations and expose them as flow variables inside the loop. Then you can use a normal loop end node to collect your statistics.

Kind regards,

Alexander

2 Likes

Okay, thank you so much!

So should I create a table with all the possible combinations of parameters I would like to try instead of defining the range for each parameter?

Thank you in advance again.

Hi,

Yes, you can do that. You can also calculate the parameters based on the currentIteration flow variable in a Counting Loop, or you create all combinations of parameters using Cross Joiner nodes.

Kind regards,

Alexander

2 Likes

Thank you for your answer. However, I still have some doubts regarding your response. I would really appreciate if we speak privately to discuss it in detail.

Kind regards,

Helena

Hi,

I would prefer to keep the discussion here so that the community also benefits from the responses. What doubts do you have?

Kind regards,

Alexander

1 Like

Sure, I understand. So my doubt is that by doing through the Cross Joiner node I am experiencing low executing speed. It takes a lot of time to get results. Whereas by using the hillclimbing strategy, it executes almost immediately. Do you know if I can somehow increase the execution speed ?

Thank you very much for your support.

Hi,

With the cross joiner, the loop tries every combination, but with the Parameter Optimization loop and hillclimbing only the ones that seem promising. The latter does so by always selecting the next combination of hyperparameters that has the highest objective function value. That is why it is much faster than the exhaustive search, but it does have the drawback of potentially trapping itself in local optima due to its greedy nature. I think there are two options for you:

- Use the Parameter Optimization loop and append the values you want to keep to either a file or a table in an in-memory SQLite DB (SQLite Connector), then after the loop read the values again

- Implement the hill-climbing yourself using a Recursive Loop Start and Recursive Loop End.

Option 1 is definitely much easier to implement.

Kind regards,

Alexander

3 Likes