Hello KNIME Community,

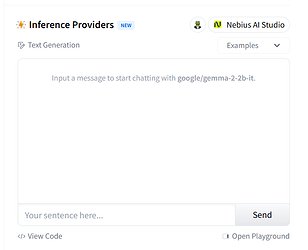

I’m trying to use the Hugging Face (HF) nodes in the KNIME AI Extension to load in a model from HF, but I am encountering different errors when attempting to utilize Hugging Face models. For the purposes of this post, I will be referring to the Credentials Configuration, HF Hub Authenticator, HF Hub LLM Connector, LLM Prompter, and Table Creator nodes. I’m mainly looking to use open source models via KNIME with the HF nodes.

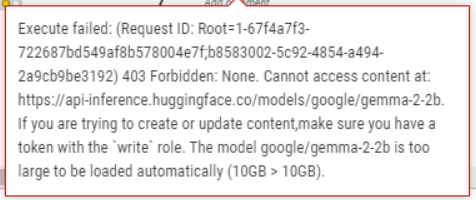

10 GB Issue

Above is the issue that I’ve encountered the most when testing out the HF nodes previously. I’ve looked into this issue on the HF forums and it seems that I would need a Pro Account to use models with a size greater than 10 GB. Am I correct in this conclusion? Or is KNIME limited in this aspect of Hugging Face integration?

I was under the impression that Hugging Face was generally free. I wanted to use their resources and models through them, as my personal hardware is not great for running most models locally.

HF Inference API Support

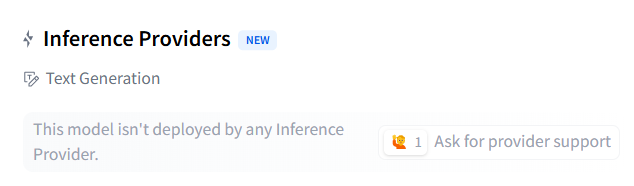

There are many models on HF, but not all of them are available via the HF Inference API. Is there a way to see if a model is available with the Inference API/KNIME?

Some models have this section on the model page with specifically the HF Inference API support or other support. There are many models that don’t have this support as well:

Is there a way or any resource to distinguish between models that are available for use through the Inference API and therefore KNIME?

HF Outage

Everything above is from a post that I was planning previously. More recently, I’m aware the HF had an outage this month, so most models that I’m trying today are now giving 404 messages. Has this impacted KNIME support of HF nodes, or is it a HF problem?

Any help or insights with this would be greatly appreciated!