Hello guys,

My JSON file has a header and a tailer.

I get an error from the JSON reader node that it does not recognize the HRD token (which is the header token).

How can I skip the header and the trailer to read the file?

Please advise.

Iryna

Hello,

Can you provide a basic sample of what your JSON might look like with a header/tailer for further testing? It doesn’t have to be long, just enough to give me an idea of what it looks like to try to trigger the same error.

Also, can you please tell us what version of KNIME AP you’re using?

Regards,

Nickolaus

Here is the structure:

HDR ZREOF 081 20210324 110554

{

“DATA”: [

{

“PRIMARY_KEY”: {

“CODE”: “10”

},

“ATTRIBUTES”: {

“TYPE”: “ZCCP”

},

“DATES”: {

“RECEIVED_DATE”: “0000-00-00”

},

“SITE”: [

{

“SITE_G”: “XW”

}

]

}

]

}

TRL ZREOF 1

When I use JSON readers, I need to delete the HDR and TRL lines for the reader to work.

How can I delete the lines in the file before I can read it?

Thank you,

IK

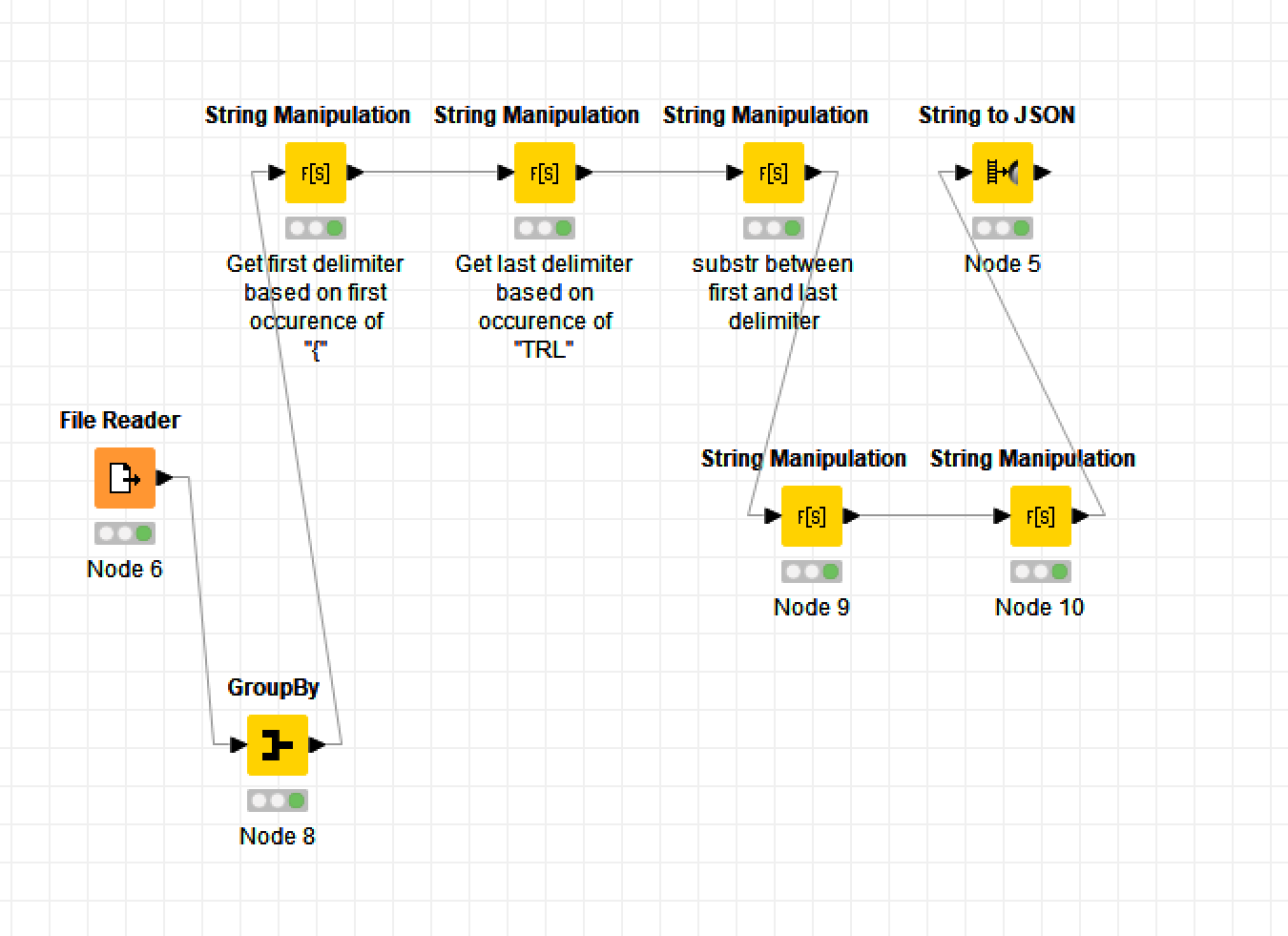

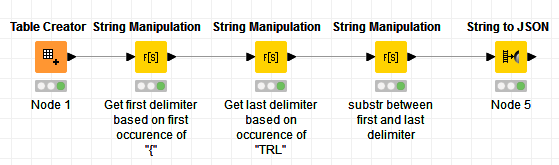

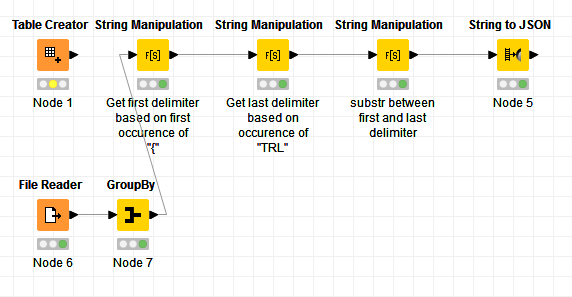

Hi @IrynaK , this workflow will do the trick for you. You can see the logic I used in the node comments:

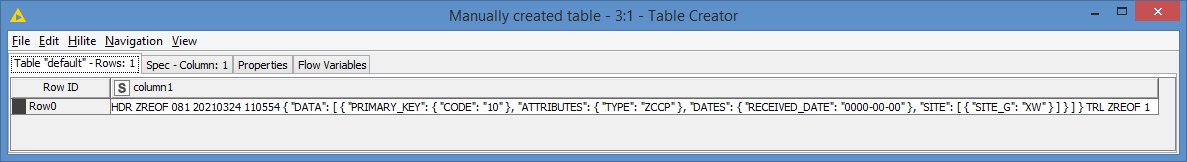

The table has the string you provided:

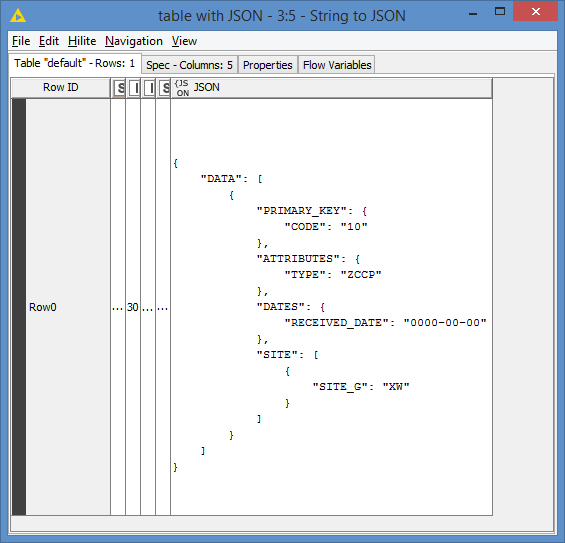

And the end result is the JSON string you expect to see:

Here is the workflow: JSON strip header and trailer.knwf (11.4 KB)

Thank you, let me try it.

Only, which node should I use to read the file then? In your workflow it is a table, but for mine I need a file read. Please suggest

Hi @IrynaK it depends on what format your file is in order to choose a file reader. Can you provide a sample of the file?

it is a .dat file; i am not able to upload it even here.

SAMPLE.txt (375 Bytes)

Try with this one

Hi @IrynaK , here you go: JSON strip header and trailer 2.knwf (15.2 KB)

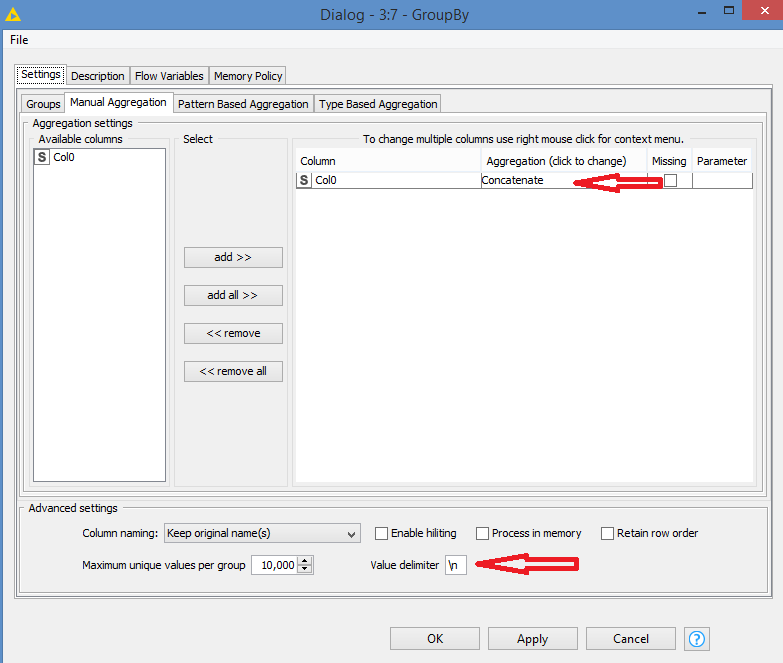

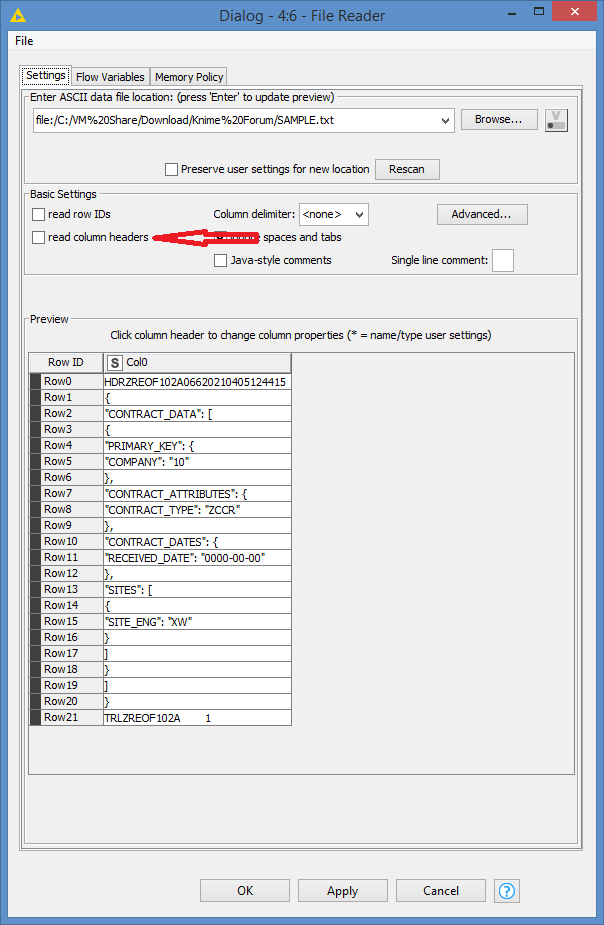

Basically, I used a file reader, however, file reader will assume that each line terminated with \n is a row, meaning it will read your file as multiple rows, which I assume is NOT what we want in this case. So I concatenated all the lines via a GroupBy, which gave me the same data as the table I created.

From there, I plugged the rest of the workflow to it:

Just make sure that your GroupBy is configured as follows:

Thank you!

How did you get to Col0? When I do a file reader it has only one column HDRxxxxx and when I plug that field into the rest of the workflow instead of Col0 it fails.

Hello,

I got the flow to work  Except the String to JSON node is failing saying ERROR String to JSON 3:5 Execute failed: Unexpected character (‘C’ (code 67)): was expecting double-quote to start field name

Except the String to JSON node is failing saying ERROR String to JSON 3:5 Execute failed: Unexpected character (‘C’ (code 67)): was expecting double-quote to start field name

at [Source: (StringReader); line: 2, column: 2]

in row: Row0.

Did you get anything similar?

Hello,

I get the same error.

If you look at the source example, you use left double quotation mark and right double quotation marks instead of the standard boring quotation mark. [1] I’d noticed it originally when pasting your example into Notepad++, but didn’t think much of it at the time.

If you modify the workflow with String Manipulation nodes to change left double quotation into regular quotation, and right double quotation into regular quotation, then string to json, then you can get a nicely formatted json at the end.

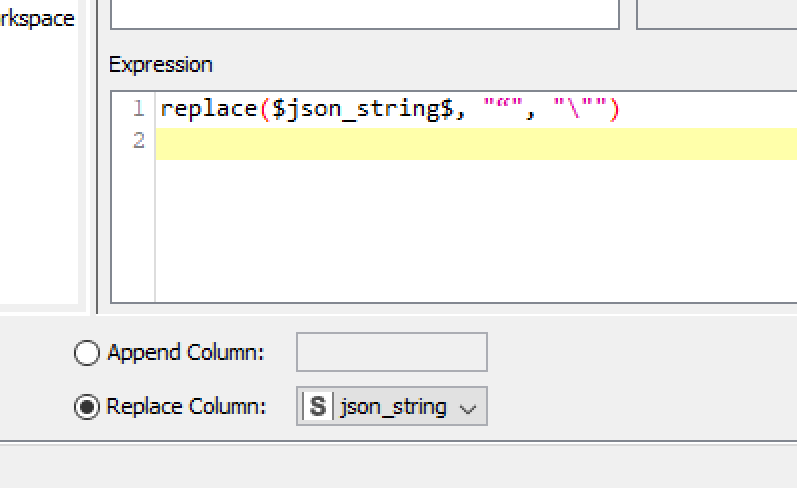

Node 9:

Node 10:

JSON strip header and trailer 2.knwf (15.2 KB)

Then, after all that ETL, you can finally use your JSON for whatever you’re doing with it.

Regards,

Nickolaus

[1] utf 8 - Are there different types of double quotes in utf-8 (PHP, str_replace)? - Stack Overflow

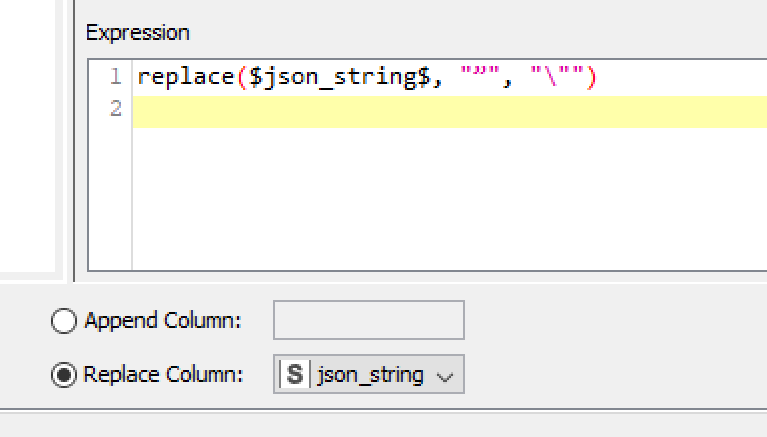

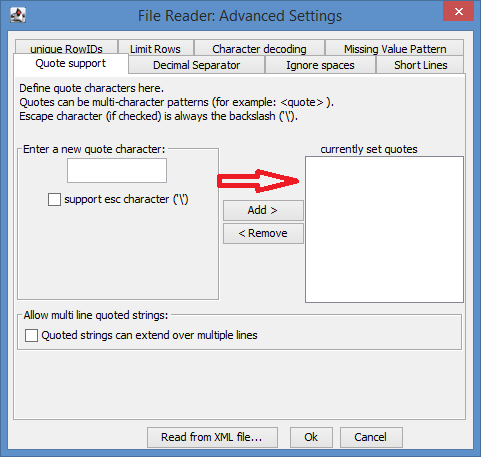

You don’t need to do so much manipulation. You just need to make sure that you configure the File Reader properly.

Since your file did not have a header, then make sure that the File Reader is not using the first line as header by unchecking this option (that’s how you’ll get Col0):

Furthermore, since your data has quotes in it, which you need to keep because they are part of the JSON string, make sure you remove these in Advanced settings:

You should have been able to run my workflow as is without any issues.

And yes, make sure that your input data is clean, that is use the proper quotes

This topic was automatically closed 182 days after the last reply. New replies are no longer allowed.