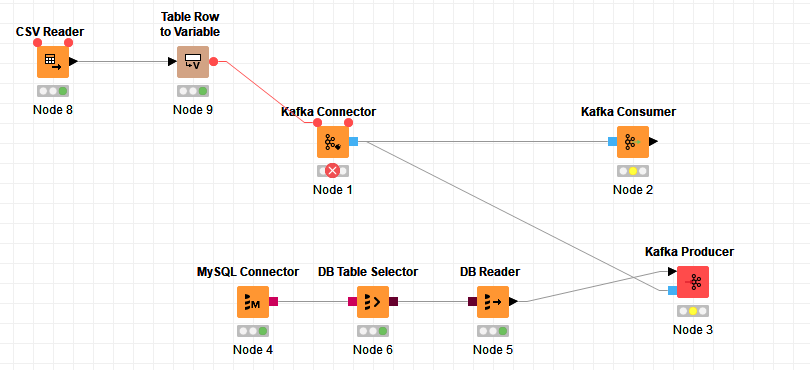

Dears, I am trying to connect with KNIME desktop to a confluent hosted KAFKA server. However, I cannot see all the paramters required to connect.

The following are the settings I need to provide, however there is not a place I can put some of these.

ssl.endpoint.identification.algorithm=https

sasl.mechanism=PLAIN

request.timeout.ms=20000

bootstrap.servers=pkc-43n10.us-central1.gcp.confluent.cloud:9092

retry.backoff.ms=500

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="<CLUSTER_API_KEY>" password="<CLUSTER_API_SECRET>";

security.protocol=SASL_SSL

// Enable Avro serializer with Schema Registry (optional). Note that KSQL only supports string keys

// key.serializer=io.confluent.kafka.serializers.KafkaAvroSerializer

value.serializer=io.confluent.kafka.serializers.KafkaAvroSerializer

Some client examples are here:

Would anyone be able to guide me on this? Thank you all.

ScottF

February 25, 2020, 9:28pm

2

Hello @paideepak and welcome to the forum.

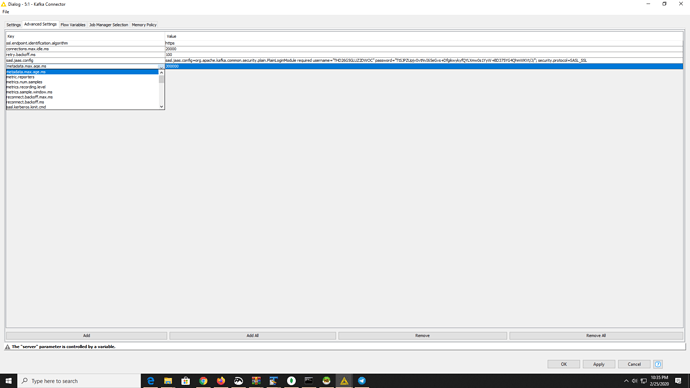

Have you tried the Kafka Connector node available in the KNIME Extension for Apache Kafka (Preview) ? On the Advanced Settings tab of the configuration you can set key/value pairs.

2 Likes

Yes sir. I have used the Kafka connector. The problem is some of the setting that I should use is not available in the advanced settings. I can only choose from a drop down list.

ScottF

February 25, 2020, 9:38pm

4

Ah, understood. Let me check with the dev team about the best way to approach this and I’ll get back to you.

1 Like

Thank you @ScottF . Highly appreciate it.

I have also tried to send it via Flow Variables (hence the CSV reader in my workflow). However, I am not 100% sure if it is really picking up anything there or not. Thanks again.

Hey @paideepak ,

except the value.serializer I think we support everything.

Connector:

“Kafka Cluster” equals bootstrap.servers=pkc-43n10.us-central1.gcp.confluent.cloud:9092

Advanced tab:

ssl.endpoint.identification.algorithm=https

sasl.mechanism=PLAIN

retry.backoff.ms=500

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="<CLUSTER_API_KEY>" password="<CLUSTER_API_SECRET>";

security.protocol=SASL_SSL

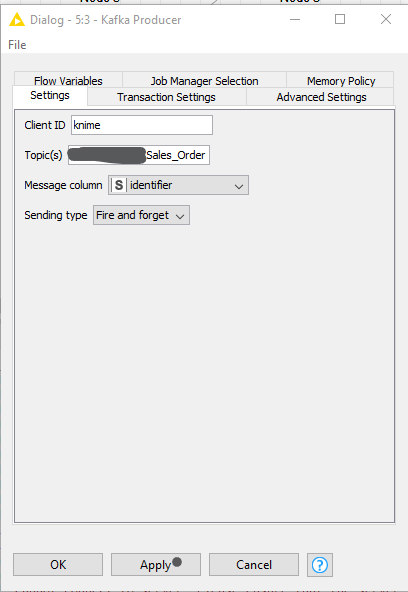

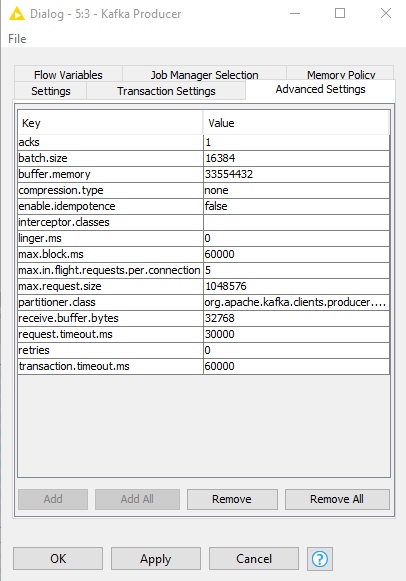

Kafka Producer:

If you still having trouble to connect to Kafka please let us know.

Fyi - releated topic: Kafka Node and Configuration of Cloud Kafka Provider (Cloud Karafka) (a lot of timeout probs)

2 Likes

Thank you! That helped to connect.

Now I am having trouble configuring the producer. The consumer seems to be doing alright (returning an empty table).

Not sure what I am doing wrong with the producer.

@paideepak ,

happy to hear that you can Connect to Kafka now.

Regarding your producing problem. Just for testing I’d change the sending type from Fire and forget to Asynchronous (this will throw an exception if something goes wrong).

Maybe Knime Kafka nodes - kafka version helps you further with the Consumer.

If you still cannot access any entries via the consumer that you produced please let me know

Best

2 Likes

system

March 5, 2020, 9:47am

9

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.