Hi There

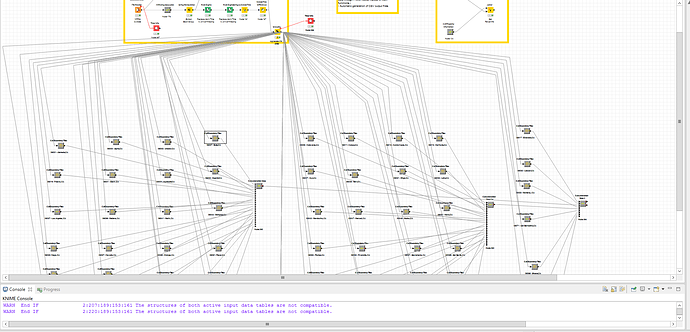

I’m trying to build a workflow where I want to the model for an entire state. Taking a naive approach, I have distributed it, so each meta node represents a different FIPS code in the state, so its easier for debugging and parallel execution. Even though I could copy and paste the meta node logic like 60 times, It was very painful to design the layout and the connections to each meta-node and then add any successive logic to this workflow. (Attached workflow for reference)

I’m guessing expert users have figured out more easier way to do deal with designing such workflows.

Is there a functionality in either Open source version or Server version where we can do some sort of templating but still be able to run the workflow in a distributed manner.

I’m open to any suggestions.

Main Workflow:

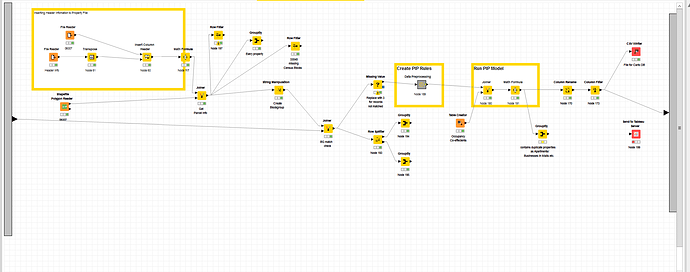

Logic inside each meta-node:

Thanks !

Could loop nodes be what you need here? That is, setup a Group Loop Start based on the FIPS, loop over the logic in your metanode for each FIPS code, and use a Loop End node to collect the results?

There are several workflows dealing with loops on the Examples server, but here’s a simple one you might take a look at:

https://www.knime.com/nodeguide/control-structures/loops/looping-over-groups-of-the-data

My first thought was using loops. The down side though is that loops are ‘sequential’ and for some reason End nodes take forever to execute (not sure how optimized the collection strategy is for each iteration). There are FIPS codes that are small and some are huge which take hours to execute. Not having dependency is important.

Also, the data set is about 20-25 millions rows.

If only there was a way inside a loop to say make as many virtual chucks as FIPS codes and run them in parallel and collect the results with an End node.

Have you tried the Parallel Chunk nodes in KNIME Labs? Perhaps you might be able to see some performance improvement with those - although I suspect you will still be bottlenecked by your larger FIPS groupings.

1 Like