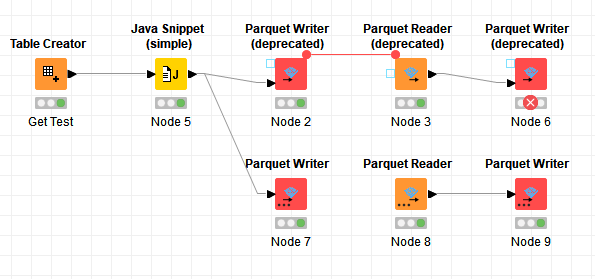

Little frustration I just found from writing a parquet file with a List(Collection of String) column that for the first several rows was missing a value, then reading that file, and trying to write it again - all using standard v4.7.2 Parquet Reader/Writer nodes. The issue is that after writing the parquet file and reading it back in, the first row has an empty array as the value for the list column instead of a missing cell. Trying to write the parquet file fails with stack trace:

2021-06-02 16:38:16,481 : ERROR : KNIME-Worker-25-Parquet Writer 0:86:124 : : Node : Parquet Writer : 0:86:124 : Execute failed: Data type mapping exception

org.knime.bigdata.fileformats.utility.BigDataFileFormatException: Data type mapping exception

at org.knime.bigdata.fileformats.parquet.writer.DataRowWriteSupport.write(DataRowWriteSupport.java:153)

at org.knime.bigdata.fileformats.parquet.writer.DataRowWriteSupport.write(DataRowWriteSupport.java:1)

at org.apache.parquet.hadoop.InternalParquetRecordWriter.write(InternalParquetRecordWriter.java:123)

at org.apache.parquet.hadoop.ParquetWriter.write(ParquetWriter.java:292)

at org.knime.bigdata.fileformats.parquet.writer.ParquetKNIMEWriter.writeRow(ParquetKNIMEWriter.java:172)

at org.knime.bigdata.fileformats.node.writer.FileFormatWriterNodeModel.writeToFile(FileFormatWriterNodeModel.java:404)

at org.knime.bigdata.fileformats.node.writer.FileFormatWriterNodeModel.writeRowInput(FileFormatWriterNodeModel.java:356)

at org.knime.bigdata.fileformats.node.writer.FileFormatWriterNodeModel.execute(FileFormatWriterNodeModel.java:275)

at org.knime.bigdata.fileformats.node.writer.FileFormatWriterNodeModel.execute(FileFormatWriterNodeModel.java:1)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:576)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1236)

at org.knime.core.node.Node.execute(Node.java:1016)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:558)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:95)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:334)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:210)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

Until I remove the empty array value from the list column and replace it with null (missing value).

Separately, sure would be nice to support Set columns in addition to List in the parquet nodes. That doesn’t seem like a big stretch. Perhaps even allow struct mappings to and from JSON?