Yes.

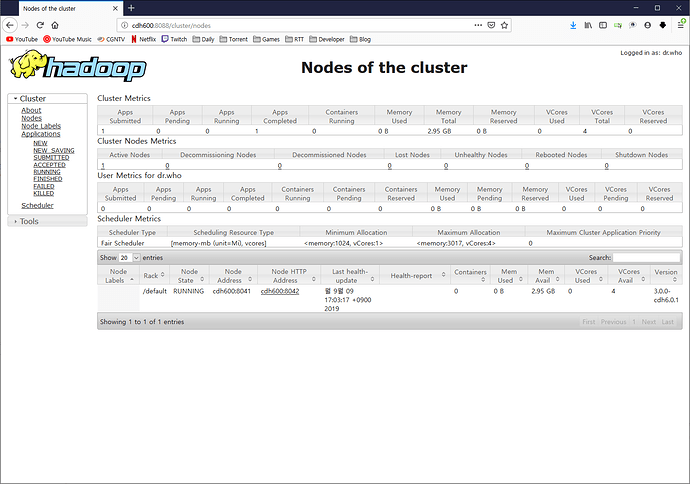

Node link screenshot is follow.

Different versions of CDH Installed. So different host names but resources is same .

Follow log is /var/log/livy/livy.log and one request log.

2019-09-09 17:20:30,405 WARN org.apache.livy.server.interactive.InteractiveSession$: sparkr.zip not found; cannot start R interpreter.

2019-09-09 17:20:30,411 INFO org.apache.livy.server.interactive.InteractiveSession$: Creating Interactive session 2: [owner: null, request: [kind: shared, proxyUser: None, conf: spark.driver.memory → 1g,spark.executor.instances → 1,spark.driver.cores → 1,livy.uri → http://192.168.200.99:8998/,spark.executor.memory → 1g,spark.dynamicAllocation.enabled → false,spark.executor.cores → 1, heartbeatTimeoutInSecond: 0]]

2019-09-09 17:20:30,430 INFO org.apache.livy.rsc.rpc.RpcServer: Connected to the port 10001

2019-09-09 17:20:30,431 WARN org.apache.livy.rsc.RSCConf: Your hostname, cdh600, resolves to a loopback address, but we couldn’t find any external IP address!

2019-09-09 17:20:30,431 WARN org.apache.livy.rsc.RSCConf: Set livy.rsc.rpc.server.address if you need to bind to another address.

2019-09-09 17:20:30,453 INFO org.apache.livy.sessions.InteractiveSessionManager: Registering new session 2

2019-09-09 17:20:34,045 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO client.RMProxy: Connecting to ResourceManager at cdh600/192.168.200.99:8032

2019-09-09 17:20:34,234 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

2019-09-09 17:20:34,285 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO conf.Configuration: resource-types.xml not found

2019-09-09 17:20:34,286 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO resource.ResourceUtils: Unable to find ‘resource-types.xml’.

2019-09-09 17:20:34,293 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (3017 MB per container)

2019-09-09 17:20:34,294 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

2019-09-09 17:20:34,295 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Setting up container launch context for our AM

2019-09-09 17:20:34,298 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Setting up the launch environment for our AM container

2019-09-09 17:20:34,322 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Preparing resources for our AM container

2019-09-09 17:20:34,373 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/rsc-jars/livy-api-0.5.0.knime3.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/livy-api-0.5.0.knime3.jar

2019-09-09 17:20:34,588 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/rsc-jars/livy-rsc-0.5.0.knime3.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/livy-rsc-0.5.0.knime3.jar

2019-09-09 17:20:34,631 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/rsc-jars/netty-all-4.0.37.Final.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/netty-all-4.0.37.Final.jar

2019-09-09 17:20:34,677 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:34 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/repl_2.11-jars/livy-repl_2.11-0.5.0.knime3.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/livy-repl_2.11-0.5.0.knime3.jar2019-09-09 17:20:35,148 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/repl_2.11-jars/commons-codec-1.9.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/commons-codec-1.9.jar

2019-09-09 17:20:35,194 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/LIVY-0.5.0.knime3-cdh6/repl_2.11-jars/livy-core_2.11-0.5.0.knime3.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/livy-core_2.11-0.5.0.knime3.jar2019-09-09 17:20:35,233 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/CDH-6.0.1-1.cdh6.0.1.p0.590678/lib/spark/jars/datanucleus-core-4.1.6.jar → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/datanucleus-core-4.1.6.jar

2019-09-09 17:20:35,265 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Uploading resource file:/run/cloudera-scm-agent/process/89-livy-LIVY_SERVER/hive-conf/hive-site.xml → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/hive-site.xml

2019-09-09 17:20:35,356 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Uploading resource file:/tmp/spark-7224ae72-bbd5-444b-8841-645d13c051ff/__spark_conf__5594470883316094049.zip → hdfs://cdh600:8020/user/livy/.sparkStaging/application_1568015477136_0003/spark_conf.zip

2019-09-09 17:20:35,422 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO spark.SecurityManager: Changing view acls to: livy

2019-09-09 17:20:35,424 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO spark.SecurityManager: Changing modify acls to: livy

2019-09-09 17:20:35,425 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO spark.SecurityManager: Changing view acls groups to:

2019-09-09 17:20:35,426 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO spark.SecurityManager: Changing modify acls groups to:

2019-09-09 17:20:35,427 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(livy); groups with view permissions: Set(); users with modify permissions: Set(livy); groups with modify permissions: Set()

2019-09-09 17:20:35,448 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Submitting application application_1568015477136_0003 to ResourceManager

2019-09-09 17:20:35,493 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO impl.YarnClientImpl: Submitted application application_1568015477136_0003

2019-09-09 17:20:35,498 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client: Application report for application_1568015477136_0003 (state: ACCEPTED)

2019-09-09 17:20:35,504 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO yarn.Client:

2019-09-09 17:20:35,504 INFO org.apache.livy.utils.LineBufferedStream: stdout: client token: N/A

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: diagnostics: N/A

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: ApplicationMaster host: N/A

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: ApplicationMaster RPC port: -1

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: queue: root.users.livy

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: start time: 1568017235465

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: final status: UNDEFINED

2019-09-09 17:20:35,505 INFO org.apache.livy.utils.LineBufferedStream: stdout: tracking URL: http://cdh600:8088/proxy/application_1568015477136_0003/

2019-09-09 17:20:35,506 INFO org.apache.livy.utils.LineBufferedStream: stdout: user: livy

2019-09-09 17:20:35,521 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO util.ShutdownHookManager: Shutdown hook called

2019-09-09 17:20:35,523 INFO org.apache.livy.utils.LineBufferedStream: stdout: 19/09/09 17:20:35 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-7224ae72-bbd5-444b-8841-645d13c051ff

2019-09-09 17:22:00,537 ERROR org.apache.livy.rsc.RSCClient: Failed to connect to context.

java.util.concurrent.TimeoutException: Timed out waiting for context to start.

at org.apache.livy.rsc.ContextLauncher.connectTimeout(ContextLauncher.java:134)

at org.apache.livy.rsc.ContextLauncher.access$300(ContextLauncher.java:63)

at org.apache.livy.rsc.ContextLauncher$2.run(ContextLauncher.java:122)

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38)

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:358)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:394)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:112)

at java.lang.Thread.run(Thread.java:748)

2019-09-09 17:22:00,542 INFO org.apache.livy.rsc.RSCClient: Failing pending job 1ac0d720-4930-4274-b57b-300065003b52 due to shutdown.

2019-09-09 17:22:00,549 INFO org.apache.livy.server.interactive.InteractiveSession: Failed to ping RSC driver for session 2. Killing application.

2019-09-09 17:22:00,549 INFO org.apache.livy.server.interactive.InteractiveSession: Stopping InteractiveSession 2…

2019-09-09 17:22:00,759 INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl: Killed application application_1568015477136_0003

2019-09-09 17:22:00,771 INFO org.apache.livy.server.interactive.InteractiveSession: Stopped InteractiveSession 2.2019-09-09 17:22:00,771 WARN org.apache.livy.server.interactive.InteractiveSession: Fail to get rsc uri

java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Timed out waiting for context to start.

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41)

at org.apache.livy.server.interactive.InteractiveSession$$anonfun$22.apply(InteractiveSession.scala:406)

at org.apache.livy.server.interactive.InteractiveSession$$anonfun$22.apply(InteractiveSession.scala:406)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.liftedTree1$1(Future.scala:24)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.run(Future.scala:24)

at scala.concurrent.impl.ExecutionContextImpl$AdaptedForkJoinTask.exec(ExecutionContextImpl.scala:121)

at scala.concurrent.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at scala.concurrent.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at scala.concurrent.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: java.util.concurrent.TimeoutException: Timed out waiting for context to start.

at org.apache.livy.rsc.ContextLauncher.connectTimeout(ContextLauncher.java:134)

at org.apache.livy.rsc.ContextLauncher.access$300(ContextLauncher.java:63)

at org.apache.livy.rsc.ContextLauncher$2.run(ContextLauncher.java:122)

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38)

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:358)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:394)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:112)

at java.lang.Thread.run(Thread.java:748)