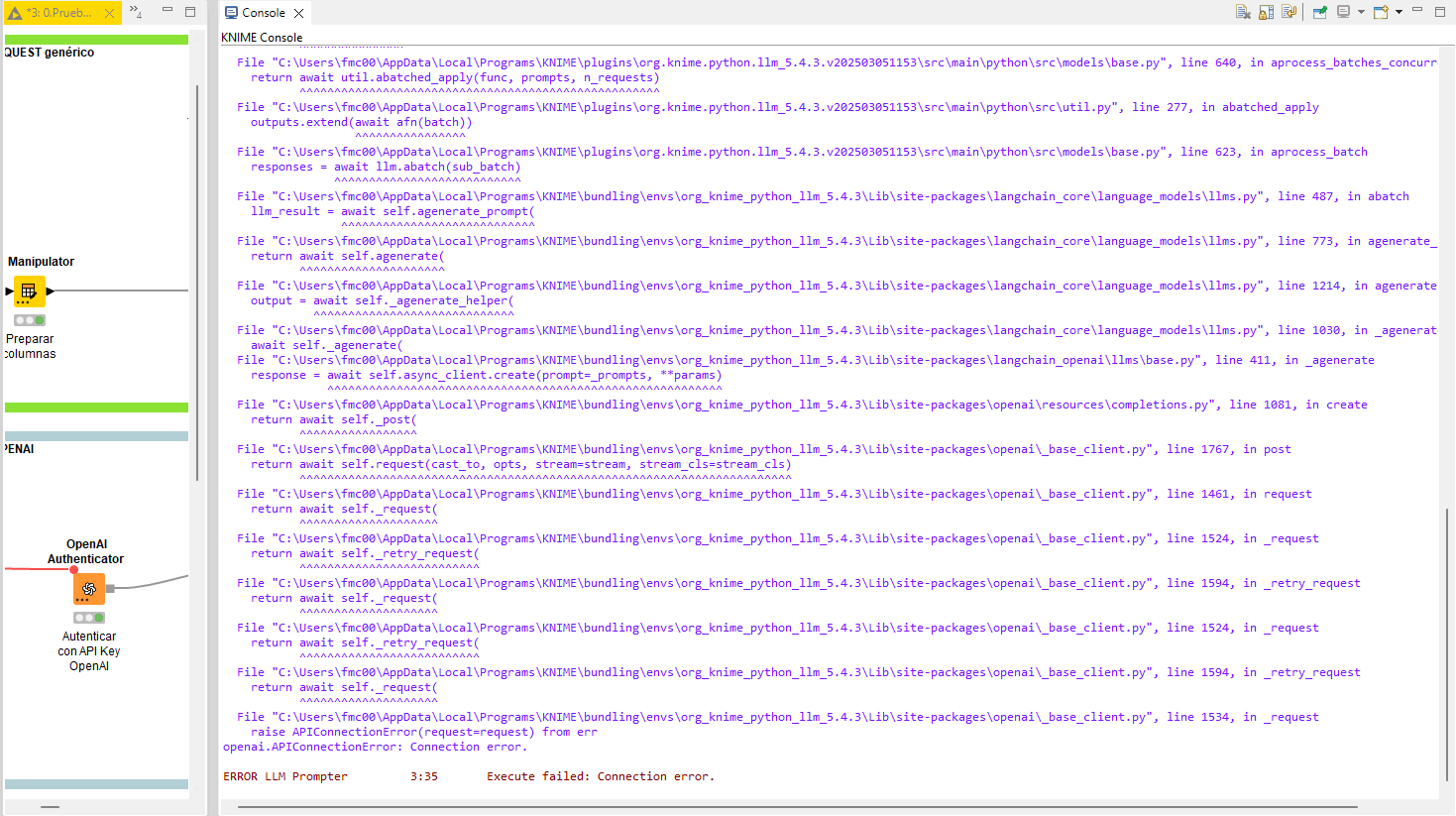

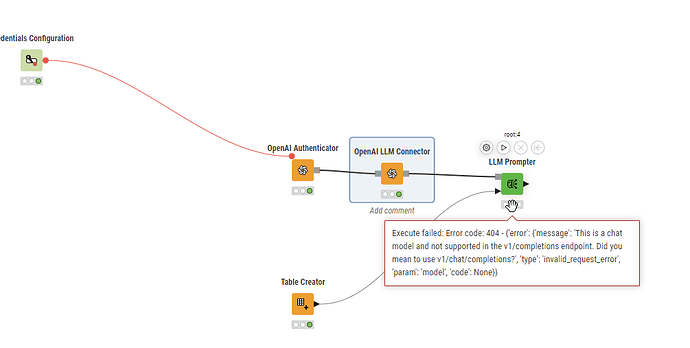

Just chiming in as I experience the exact same issue while attempting to apply newly acquired kownledge from the recent AI courses. I tried several models and verified my API key has no restrictions.

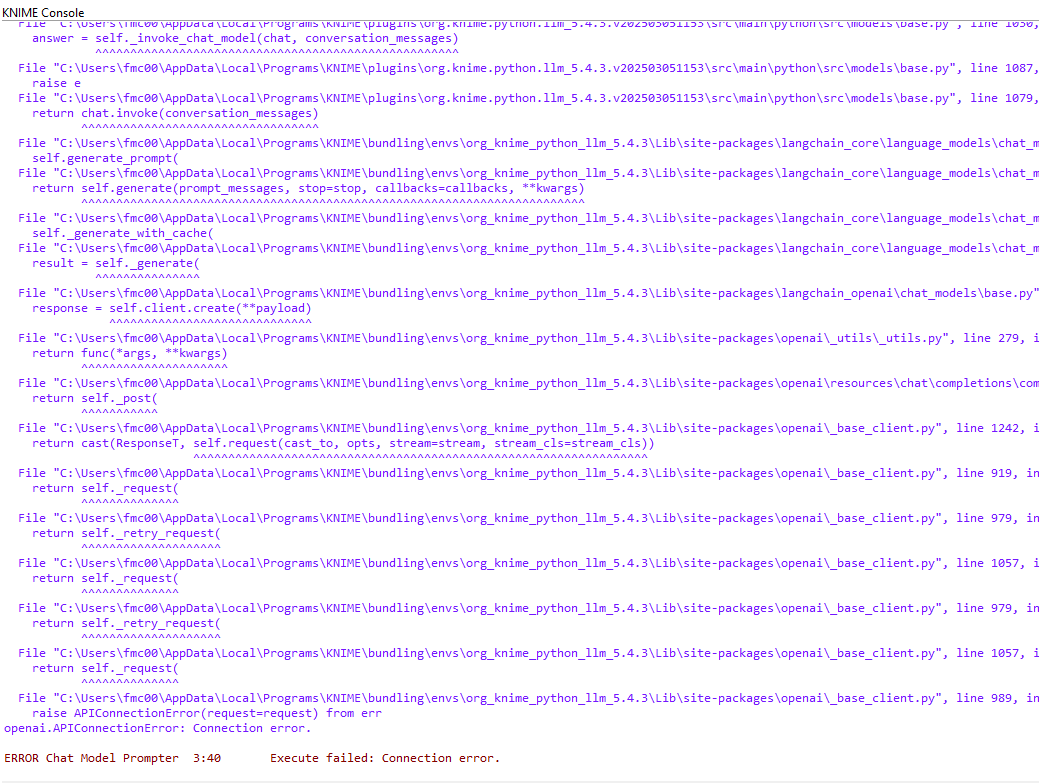

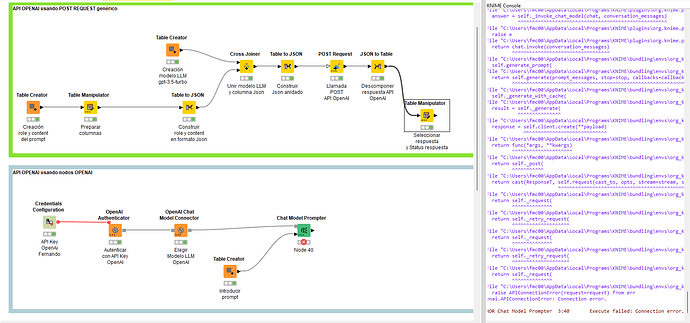

Console Output with stack trace

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python3.nodes_5.4.1.v202501291500\src\main\python\_node_backend_launcher.py", line 1055, in execute

outputs = self._node.execute(exec_context, *inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python3.nodes_5.4.1.v202501291500\src\main\python\knime\extension\nodes.py", line 1237, in wrapper

results = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\models\base.py", line 748, in execute

responses = _call_model_with_output_format_fallback(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\models\base.py", line 1179, in _call_model_with_output_format_fallback

return response_func(model)

^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\models\base.py", line 742, in get_responses

return asyncio.run(

^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\asyncio\runners.py", line 190, in run

return runner.run(main)

^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\asyncio\runners.py", line 118, in run

return self._loop.run_until_complete(task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\asyncio\base_events.py", line 654, in run_until_complete

return future.result()

^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\models\base.py", line 640, in aprocess_batches_concurrently

return await util.abatched_apply(func, prompts, n_requests)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\util.py", line 277, in abatched_apply

outputs.extend(await afn(batch))

^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\plugins\org.knime.python.llm_5.4.3.v202503051153\src\main\python\src\models\base.py", line 623, in aprocess_batch

responses = await llm.abatch(sub_batch)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\runnables\base.py", line 905, in abatch

return await gather_with_concurrency(configs[0].get("max_concurrency"), *coros)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\runnables\utils.py", line 71, in gather_with_concurrency

return await asyncio.gather(*coros)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\runnables\base.py", line 902, in ainvoke

return await self.ainvoke(input, config, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 306, in ainvoke

llm_result = await self.agenerate_prompt(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 871, in agenerate_prompt

return await self.agenerate(

^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 831, in agenerate

raise exceptions[0]

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 999, in _agenerate_with_cache

result = await self._agenerate(

^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\langchain_openai\chat_models\base.py", line 951, in _agenerate

response = await self.async_client.create(**payload)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\openai\resources\chat\completions\completions.py", line 1927, in create

return await self._post(

^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\openai\_base_client.py", line 1767, in post

return await self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\openai\_base_client.py", line 1461, in request

return await self._request(

^^^^^^^^^^^^^^^^^^^^

File "C:\Program Files\knime_5.3.0\bundling\envs\org_knime_python_llm_5.4.3\Lib\site-packages\openai\_base_client.py", line 1562, in _request

raise self._make_status_error_from_response(err.response) from None

openai.NotFoundError: Error code: 404 - {'error': {'message': 'The model `gpt-4` does not exist or you do not have access to it.', 'type': 'invalid_request_error', 'param': None, 'code': 'model_not_found'}}

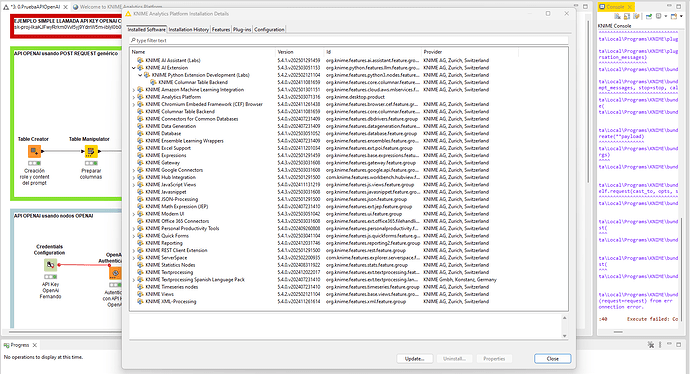

2025-04-08 13:11:03,526 : ERROR : KNIME-Worker-1088-LLM Prompter 8:1462 : : Node : LLM Prompter : 8:1462 : Execute failed: Error code: 404 - {'error': {'message': 'The model `gpt-4` does not exist or you do not have access to it.', 'type': 'invalid_request_error', 'param': None, 'code': 'model_not_found'}}

org.knime.python3.nodes.PythonNodeRuntimeException: Error code: 404 - {'error': {'message': 'The model `gpt-4` does not exist or you do not have access to it.', 'type': 'invalid_request_error', 'param': None, 'code': 'model_not_found'}}

at org.knime.python3.nodes.CloseablePythonNodeProxy$FailureState.throwIfFailure(CloseablePythonNodeProxy.java:805)

at org.knime.python3.nodes.CloseablePythonNodeProxy.execute(CloseablePythonNodeProxy.java:568)

at org.knime.python3.nodes.DelegatingNodeModel.lambda$4(DelegatingNodeModel.java:180)

at org.knime.python3.nodes.DelegatingNodeModel.runWithProxy(DelegatingNodeModel.java:237)

at org.knime.python3.nodes.DelegatingNodeModel.execute(DelegatingNodeModel.java:178)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:596)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1284)

at org.knime.core.node.Node.execute(Node.java:1049)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:603)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:198)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:369)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:223)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

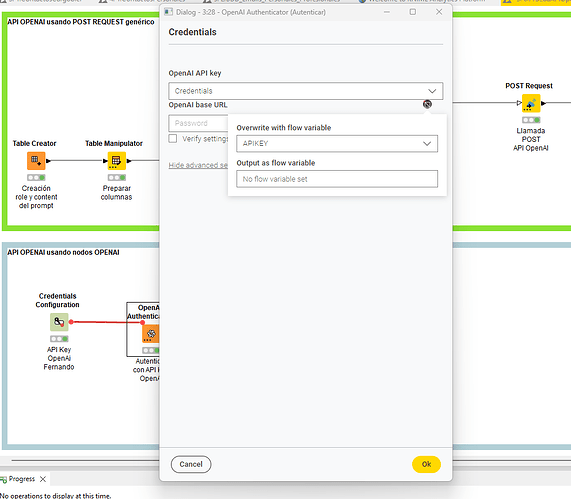

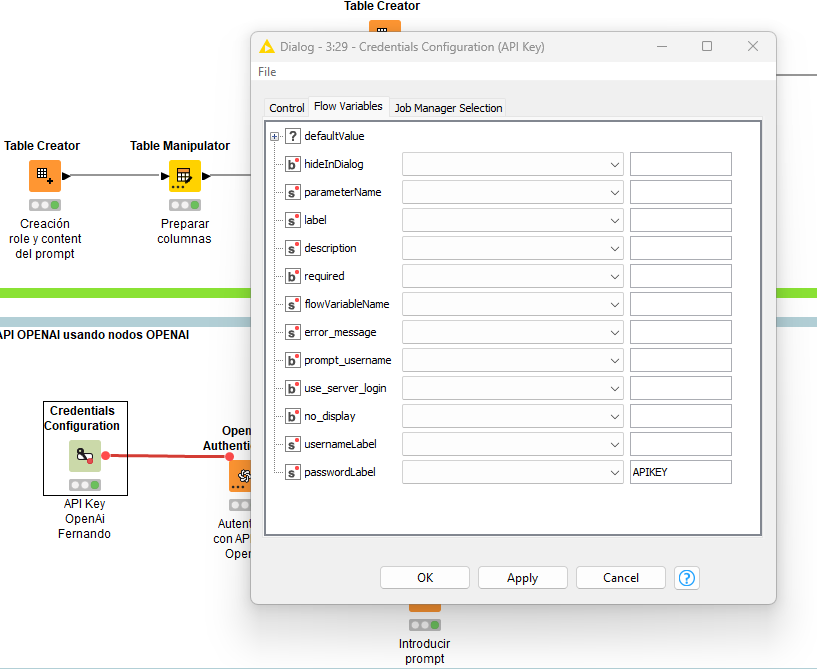

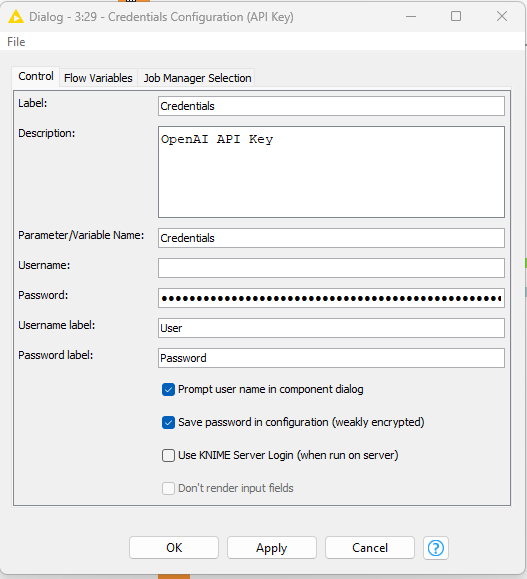

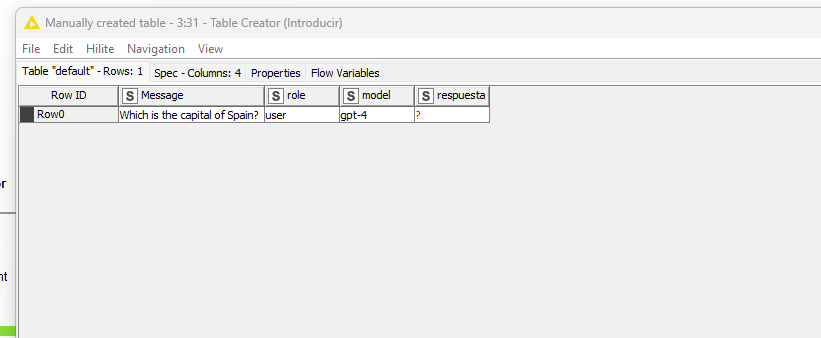

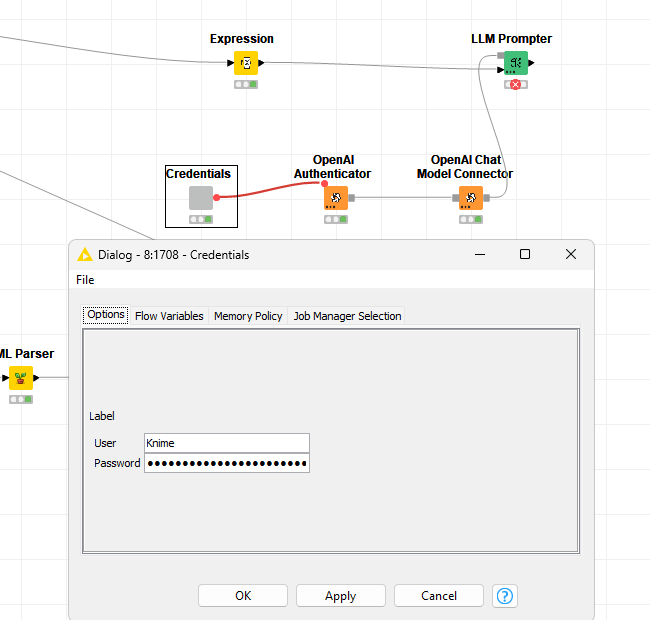

What bugs me a bit is that credentials have a user-secret pair but the OpenAI Api only provides a secret key with an optional name.